Exam Code: E20-598

Exam Name: Backup Recovery - Avamar Specialist for Storage Administrators

Certification Provider: EMC

Corresponding Certification: EMCSA Avamar

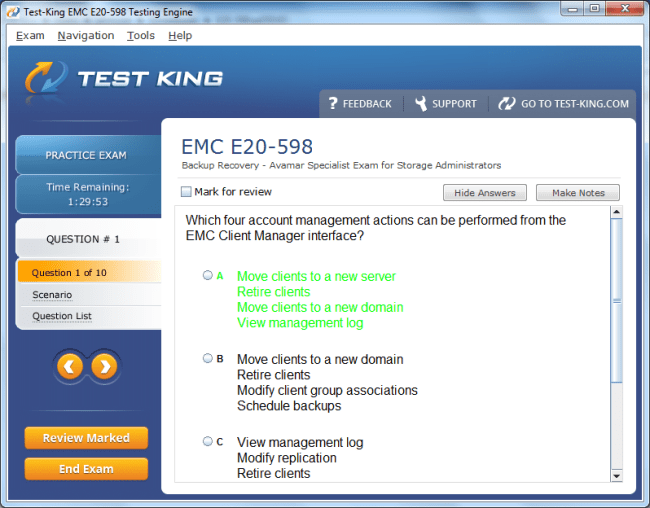

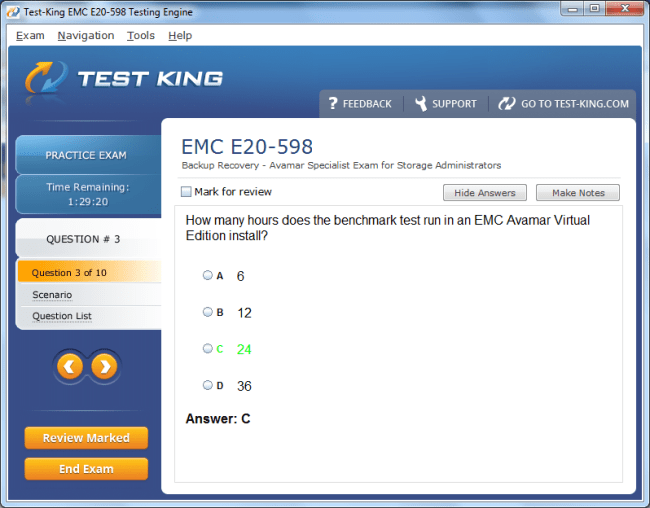

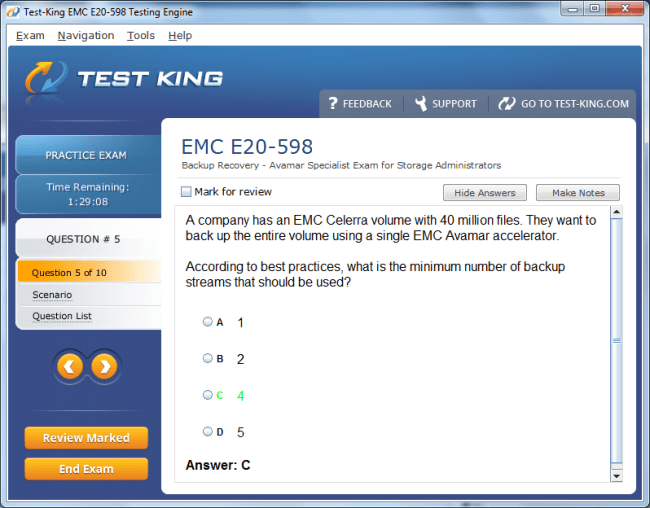

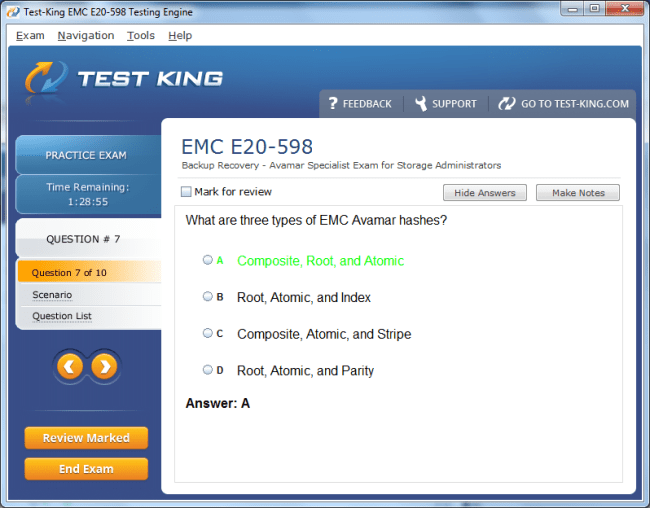

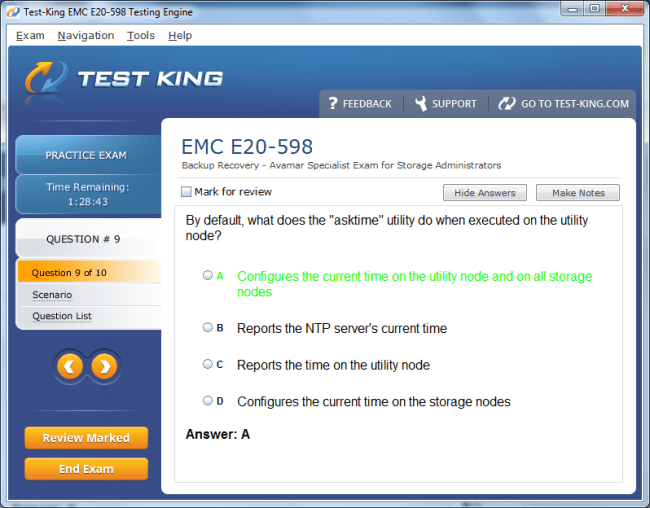

E20-598 Exam Product Screenshots

Product Reviews

Passed EMCSA Avamar E20-598 Exam

"Passed EMCSA Avamar E20-598 Exam with 987/1000 Scores because all the Questions provided for EMCSA Avamar E20-598 Exam by Testking were awesome. They cleared my EMCSA Avamar E20-598 1 Exam concepts......Thanks for enormous job.......loved my testking experience :-))) yay Ora Craft"

Passed my EMCSA Avamar E20-598

"Passed my EMCSA Avamar E20-598 exam and I am very happy today. Thanks to Testking for giving me help in preparing and eventually passing EMCSA Avamar E20-598 exam. PEYTON"

11gTest was Apple pie

"Your test Products were just like real Exam Products. Testking E20-598 Testing Engine was simulation of Real Exam. I am definitely going to use Testking for future. I trust you I trust Testking always. ROWLEY"

Truly Delighted

"Am happy to inform that I passed my EMCSA Avamar E20-598 exam last week with excellent scores. I am extremely happy and delighted and can't thank you guys enough. Your customer care was always there to help me understand any difficulty I faced whilst preparing for EMCSA Avamar E20-598 exam. I did not understand a few points in the EMCSA Avamar E20-598 study guide due to lack of IT background but they helped me through the concepts via providing me with the facilitating tutorials. Thanks a lot guys. I owe you a lot! Germaine Antonio, Los Angeles"

Top Secret of Topers... TestKing

"I wanted to get to position in my academic career and could not find the secret for it.Then i searched testking test engine and got the secret.All the exam material was based on gtestking study packages .Now i will be using it onwards and will score high in future

Ashley"

Frequently Asked Questions

How can I get the products after purchase?

All products are available for download immediately from your Member's Area. Once you have made the payment, you will be transferred to Member's Area where you can login and download the products you have purchased to your computer.

How long can I use my product? Will it be valid forever?

Test-King products have a validity of 90 days from the date of purchase. This means that any updates to the products, including but not limited to new questions, or updates and changes by our editing team, will be automatically downloaded on to computer to make sure that you get latest exam prep materials during those 90 days.

Can I renew my product if when it's expired?

Yes, when the 90 days of your product validity are over, you have the option of renewing your expired products with a 30% discount. This can be done in your Member's Area.

Please note that you will not be able to use the product after it has expired if you don't renew it.

How often are the questions updated?

We always try to provide the latest pool of questions, Updates in the questions depend on the changes in actual pool of questions by different vendors. As soon as we know about the change in the exam question pool we try our best to update the products as fast as possible.

How many computers I can download Test-King software on?

You can download the Test-King products on the maximum number of 2 (two) computers or devices. If you need to use the software on more than two machines, you can purchase this option separately. Please email support@test-king.com if you need to use more than 5 (five) computers.

What is a PDF Version?

PDF Version is a pdf document of Questions & Answers product. The document file has standart .pdf format, which can be easily read by any pdf reader application like Adobe Acrobat Reader, Foxit Reader, OpenOffice, Google Docs and many others.

Can I purchase PDF Version without the Testing Engine?

PDF Version cannot be purchased separately. It is only available as an add-on to main Question & Answer Testing Engine product.

What operating systems are supported by your Testing Engine software?

Our testing engine is supported by Windows. Andriod and IOS software is currently under development.

Mastering EMC Avamar: Key Concepts You Must Know for the E20-598 Exam

In the intricate universe of enterprise data protection, EMC Avamar stands as a paragon of efficiency, designed to deliver unparalleled backup and recovery capabilities across diverse IT environments. The modern data landscape is characterized by exponential information growth, intricate compliance regulations, and the perpetual need for instantaneous recovery. Within this demanding ecosystem, the E20-598 certification validates a professional’s prowess in managing, maintaining, and optimizing Avamar-based infrastructures, which have become indispensable for organizations that prioritize resilience and continuity.

Introduction to EMC Avamar and the E20-598 Exam

Understanding Avamar begins with grasping its conceptual foundation. EMC Avamar is a sophisticated deduplication backup and recovery solution that operates on the principle of minimizing redundant data before it ever traverses the network. This unique capability transforms the economics and logistics of backup operations. By storing only unique data segments, Avamar reduces bandwidth consumption and storage requirements, creating a more streamlined and energy-efficient infrastructure. The E20-598 exam evaluates one’s ability to harness this technology proficiently, ensuring mastery of Avamar’s architecture, client integration, operational workflow, and management methodologies.

The examination itself is not merely a theoretical assessment; it is an evaluation of one’s ability to apply Avamar principles in practical, real-world situations. Candidates who aspire to achieve this certification must cultivate a deep comprehension of Avamar’s internal components, from its client-server relationship to its grid architecture. This understanding extends to how Avamar integrates with broader EMC ecosystems, such as Data Domain and NetWorker, to deliver unified backup solutions.

EMC Avamar’s evolution has mirrored the transformation of enterprise IT. Initially conceptualized to address the inefficiencies of traditional tape-based backups, it has matured into a fully virtualized and cloud-ready platform that supports both physical and virtual environments. Avamar’s intelligent data deduplication and robust recovery processes make it a cornerstone for storage administrators seeking to optimize performance and reliability. To excel in the E20-598 examination, one must appreciate not just the functional mechanics of Avamar, but also its philosophical approach to data protection—one that favors precision, scalability, and sustainability.

At its core, Avamar is built around a multi-tiered grid architecture that divides functions across specialized nodes. Each node type—whether it be storage, utility, management, or access—plays a unique role in ensuring the harmony of the entire system. The grid concept allows for linear scalability and redundancy, ensuring that even as data volumes escalate, system performance remains steady. Avamar’s architecture inherently supports fault tolerance through distributed data placement, which means that even if a single node encounters an anomaly, the system maintains continuity.

When examining the interplay between Avamar and its clients, one observes a seamless interaction facilitated through Avamar agents installed on host systems. These agents identify and transmit only new or changed data blocks to the Avamar server, dramatically reducing network load. The mechanism involves segmenting files into variable-length chunks, computing unique hashes for each segment, and cross-referencing these hashes with the Avamar server’s global index. Only unrecognized chunks are transmitted and stored, which eliminates redundancy.

The E20-598 certification assesses knowledge of these intricate mechanisms in depth. Candidates must understand how data deduplication enhances backup performance, how the hash algorithm ensures data integrity, and how Avamar achieves efficient compression and encryption without compromising speed. Furthermore, the examination measures one’s ability to configure, schedule, and monitor backup jobs across heterogeneous environments, ensuring that recovery objectives align with organizational policies.

Beyond its technical structure, Avamar exemplifies operational elegance through its management interface, the Avamar Administrator. This console allows administrators to oversee all backup and restore activities, monitor capacity utilization, configure retention policies, and generate diagnostic reports. The Administrator serves as a nexus for both strategic and tactical operations, offering granular visibility into every aspect of the backup infrastructure. To master Avamar at the level expected by the E20-598 examination, one must not only navigate this interface intuitively but also interpret its data to make informed maintenance and optimization decisions.

An often-underestimated component of Avamar is its ability to integrate seamlessly with Data Domain systems. This synergy enhances scalability, allowing organizations to leverage Data Domain as a repository for long-term retention while maintaining Avamar’s superior deduplication for client-side efficiency. The integration ensures that backups are both rapid and resilient, with the capacity to manage vast datasets across dispersed geographical regions. Understanding this integration is vital for candidates preparing for the E20-598 certification, as it reflects real-world deployment scenarios where Avamar operates within a hybrid data protection framework.

From an operational perspective, Avamar’s backup and recovery processes are governed by policies that dictate retention periods, backup windows, and client priorities. Administrators define these parameters based on service-level agreements and data criticality. The Avamar server enforces these policies with remarkable precision, executing scheduled backups, verifying data integrity, and maintaining checkpoint records that safeguard against corruption. In the event of anomalies, Avamar’s built-in recovery mechanisms allow administrators to revert to previous stable states, preserving the continuity of data availability.

Another pivotal concept in Avamar’s operational framework is the use of checkpoints and rollbacks. These mechanisms function as temporal markers that capture the system’s state at specific intervals. If an error or corruption occurs, administrators can invoke a rollback to restore the system to its last verified checkpoint. This strategy fortifies Avamar’s reliability, making it particularly valuable in environments where downtime equates to significant financial or reputational loss. The E20-598 exam often probes a candidate’s understanding of how these checkpoints interact with backup policies and garbage collection routines.

Security remains an integral component of Avamar’s design philosophy. Every transaction between clients and servers is encrypted, and user authentication is strictly enforced through the Avamar Administrator and Management Console. The deduplication database itself is protected by layered encryption algorithms, ensuring that even if storage media were compromised, the data would remain indecipherable. The exam evaluates how well candidates grasp these protective layers and how they can be configured to align with enterprise security standards.

EMC Avamar’s efficiency is further amplified through its intelligent scheduling and bandwidth optimization capabilities. Administrators can configure Avamar to perform backups during low-traffic periods, balancing workload distribution and preventing network congestion. The system’s throttling features dynamically adjust data transmission rates based on available bandwidth, ensuring that other critical applications remain unaffected. The E20-598 examination assesses not only one’s ability to configure these features but also one’s understanding of their underlying logic and impact on system performance.

Maintenance is another domain where Avamar demonstrates its finesse. Routine tasks such as garbage collection, checkpoint validation, and data integrity verification are automated yet configurable. The administrator can initiate on-demand integrity checks to ensure that the backup data remains pristine and recoverable. These procedures are essential for long-term stability and are often included in exam scenarios that test troubleshooting and preventive maintenance skills.

Avamar’s adaptability extends into virtualized environments, particularly VMware and Hyper-V platforms. The Avamar Virtual Edition (AVE) allows deployment as a virtual appliance, delivering the same capabilities as physical servers but with greater flexibility. This variant supports backup of virtual machines at both the image and file level, with deduplication occurring across the entire environment. For candidates pursuing the E20-598 certification, understanding how Avamar operates in these virtual ecosystems is paramount, as enterprise adoption of virtualization continues to grow exponentially.

A comprehensive grasp of Avamar also entails familiarity with its replication and disaster recovery functions. The system supports bidirectional replication between Avamar grids, enabling data redundancy across geographically distinct locations. This feature ensures business continuity even in catastrophic events, as replicated data can be activated swiftly from a secondary site. The exam evaluates one’s proficiency in configuring, monitoring, and troubleshooting replication workflows, which are vital for achieving stringent recovery time and recovery point objectives.

From a strategic viewpoint, EMC Avamar epitomizes the convergence of performance optimization and environmental consciousness. Its deduplication engine not only conserves storage but also reduces energy consumption by minimizing disk usage. This ecological efficiency contributes to the broader corporate sustainability goals of many organizations. Candidates who internalize this perspective demonstrate not only technical competence but also an appreciation for the holistic impact of technology on enterprise ecosystems.

While many backup solutions rely on post-processing deduplication, Avamar performs deduplication at the source. This preemptive approach ensures that only unique data is transmitted, which dramatically accelerates backup cycles. It also minimizes wear on network infrastructure and reduces the strain on storage arrays. The E20-598 examination emphasizes comprehension of this client-side deduplication process, including how Avamar differentiates between variable-length and fixed-length segmentation and how its hashing algorithms maintain precision without redundancy.

To navigate Avamar effectively, administrators must develop an intuitive sense of its performance metrics. Metrics such as capacity utilization, deduplication ratio, and backup duration offer valuable insights into the health and efficiency of the system. By interpreting these indicators, one can identify potential bottlenecks or misconfigurations before they escalate into operational issues. The exam often incorporates scenarios requiring interpretation of system reports, logs, and alerts generated by Avamar Administrator.

A nuanced understanding of Avamar also involves comprehending its lifecycle management. Over time, backup datasets accumulate, consuming capacity and potentially degrading performance. Avamar addresses this through a sophisticated retention policy framework that automatically expires outdated backups and reclaims storage. Administrators can tailor these policies based on data sensitivity, compliance requirements, and organizational needs. The ability to craft efficient retention strategies is a key competency evaluated in the E20-598 exam.

From an administrative standpoint, Avamar promotes precision and foresight. The Avamar Management Console provides a comprehensive overview of all operational parameters, allowing administrators to anticipate challenges rather than react to them. It offers reporting tools that highlight backup success rates, client performance, and system resource utilization. Effective interpretation of these reports enables predictive maintenance, ensuring optimal system reliability.

As enterprises transition toward hybrid and multi-cloud architectures, Avamar has evolved to support integration with public cloud platforms. Its ability to extend backup and recovery capabilities into cloud storage repositories broadens its applicability. Whether data resides in on-premises servers, virtual machines, or cloud environments, Avamar ensures cohesive management through its unified interface. Candidates preparing for the E20-598 exam must be conversant with these hybrid capabilities, as they represent the direction in which enterprise data protection is moving.

The journey toward mastering EMC Avamar for the E20-598 certification demands more than rote memorization; it requires conceptual clarity and applied intelligence. Each functional layer—from deduplication to replication—embodies principles that collectively form a robust data protection ecosystem. Mastery lies in perceiving how these layers interlock to create a seamless continuum of reliability, efficiency, and adaptability.

In the realm of enterprise storage administration, those who attain the E20-598 certification distinguish themselves not merely by technical knowledge but by their ability to translate that knowledge into operational excellence. EMC Avamar, with its fusion of innovation and precision, remains a testament to how technology can transform complexity into coherence, and chaos into control. Through diligent study, practical engagement, and analytical understanding, candidates can elevate themselves from mere users of Avamar to true custodians of data integrity and continuity.

Avamar Architecture and Components

The intricate design of EMC Avamar architecture serves as the backbone of its backup and recovery prowess, enabling enterprises to safeguard massive amounts of critical data with precision and consistency. Understanding this architecture is essential for anyone preparing for the E20-598 exam, as it reveals the inner workings of Avamar’s operational ecosystem. At its essence, Avamar operates on a grid-based structure, an intelligent network of nodes that collaborate to perform data deduplication, storage, recovery, and system management tasks. This distributed design ensures that workloads are balanced, performance is optimized, and no single point of failure can jeopardize the system’s integrity.

Avamar’s architectural philosophy is built upon modularity and scalability. Unlike traditional monolithic backup systems that rely on a single central repository, Avamar’s grid configuration distributes its responsibilities across multiple specialized nodes. Each node type serves a unique function, yet all operate harmoniously under a unified framework. The primary node categories include the utility node, storage node, management node, and access node. Together, they form a symbiotic relationship that supports both performance efficiency and operational resilience.

The utility node is often described as the brain of the Avamar grid. It orchestrates numerous administrative tasks, such as scheduling, checkpoint creation, and garbage collection. It also coordinates backup and restore operations by communicating with client systems and managing job distribution. The utility node is responsible for ensuring the system remains synchronized and healthy, executing periodic system checks, and maintaining catalog consistency. In environments with large data volumes or multiple Avamar grids, the utility node becomes indispensable in maintaining equilibrium and coherence across all operational fronts.

Storage nodes represent the heart of Avamar’s capacity and performance. These nodes are where the deduplicated data chunks are stored. Each storage node contains a combination of disk drives optimized for high throughput and data protection. The data stored here is not organized as traditional files but as hash-based objects, each corresponding to a unique data segment identified during the deduplication process. When clients initiate a backup, only unique data segments are transmitted to the Avamar grid and stored on these nodes. This architecture minimizes storage consumption and accelerates data retrieval.

The management node serves as the interface between the Avamar grid and the administrators who govern it. Through this node, users access the Avamar Management Console and Avamar Administrator application, where they can monitor system health, schedule tasks, analyze logs, and configure policies. The management node consolidates real-time metrics from all other nodes and presents them in an organized and intelligible manner. It allows administrators to identify potential bottlenecks, failed backups, or nodes requiring maintenance. The E20-598 exam often tests candidates on their understanding of how this management node integrates with the rest of the grid and how it can be leveraged for troubleshooting and optimization.

Access nodes play a subtler but equally crucial role in enabling communication between the Avamar clients and the grid infrastructure. They act as gateways, processing client requests for backup or recovery and routing them to the appropriate storage resources. These nodes enhance scalability by distributing client connections evenly across the grid, ensuring that no single node becomes overloaded. The access nodes also handle encryption and authentication procedures during client-server communication, thereby maintaining data confidentiality.

Beyond its nodes, Avamar’s architecture is characterized by its unique data deduplication mechanism. Deduplication in Avamar occurs at the source, which means that redundant data is eliminated before it is transmitted to the storage grid. This technique is facilitated through the Avamar client agent, a lightweight software component installed on the systems that need protection. When a backup is initiated, the agent analyzes the data at the file system level, dividing it into variable-length segments. Each segment is then processed through a hash algorithm that generates a unique identifier. The client compares these identifiers to the global hash index maintained by the Avamar server. If a segment’s hash already exists, the client omits transmission; if not, the new data is sent for storage. This process ensures that the network is used judiciously, conserving bandwidth and accelerating backup operations.

The Avamar deduplication index is one of the most intricate components of its architecture. It acts as a catalog that maps every unique data segment stored within the grid. The index allows the system to quickly reconstruct files during recovery operations by referencing which segments are required to rebuild the original data. This process ensures that even though data is broken down into countless deduplicated fragments, the system can reassemble them flawlessly upon request. The E20-598 certification assesses candidates’ understanding of how this index functions, its impact on system performance, and the importance of maintaining its integrity.

Equally important to Avamar’s architecture is its internal database structure. The Avamar server uses proprietary databases to track backup metadata, client configurations, and retention policies. These databases are essential for ensuring data consistency and enabling rapid queries during restore operations. They are tightly integrated with the grid architecture, and their synchronization is governed by the utility node. Administrators preparing for the E20-598 exam should understand how these databases interact and how corruption or misconfiguration can affect system performance.

Another architectural marvel within Avamar is its checkpoint system. At scheduled intervals, Avamar creates checkpoints that capture the system’s current state. These checkpoints function as restoration points that can be invoked if the grid experiences anomalies, such as data corruption or unexpected shutdowns. Each checkpoint consists of metadata snapshots, hash index references, and configuration data. The checkpoint mechanism ensures that the system can be restored to a stable state without data loss. This reliability feature is a cornerstone of Avamar’s design philosophy, and understanding its mechanics is crucial for certification success.

The concept of stripes within Avamar storage architecture also contributes to its performance optimization. Data within storage nodes is distributed across multiple stripes, each representing a logical division of disk resources. By dispersing data across stripes, Avamar achieves parallelism during read and write operations, thereby enhancing speed and reliability. In addition, striping improves fault tolerance since data loss in one stripe does not jeopardize the entire dataset. The E20-598 exam frequently assesses the candidate’s grasp of how striping works and its influence on backup and restore performance.

Avamar’s architecture is designed with fault tolerance and self-healing mechanisms at its core. The system continuously monitors node health and data integrity. If a node fails, the grid’s redundancy ensures that operations continue with minimal interruption. The data chunks stored on failed nodes can be reconstructed from other nodes through the deduplication catalog. This capability epitomizes the resilience of Avamar and is a critical concept for storage administrators to master.

Replication is another architectural feature that reinforces Avamar’s reliability. It allows data to be duplicated across different Avamar grids or remote sites. Replication can be configured to occur automatically according to defined policies, ensuring that offsite copies are always synchronized. This feature supports disaster recovery strategies and complies with regulatory requirements for data protection. Understanding replication scheduling, retention synchronization, and bandwidth management is imperative for anyone aiming to excel in the E20-598 exam.

Within Avamar’s architecture lies an intricate communication framework that governs data exchange among nodes and clients. Communication is facilitated through secure network protocols, and traffic is optimized using compression and encryption. Avamar employs port-based communication channels to ensure smooth interaction between components. The Management Console coordinates these connections, maintaining system harmony and reducing latency.

From a performance standpoint, Avamar leverages multiple optimization techniques to ensure scalability. Data caching, load balancing, and resource pooling are intrinsic to its design. Load balancing distributes tasks evenly among nodes, preventing bottlenecks and maximizing throughput. Caching reduces disk I/O by temporarily storing frequently accessed data in memory. Resource pooling allows nodes to share workloads dynamically, thereby accommodating fluctuating demands without manual intervention. These architectural refinements contribute to Avamar’s reputation as a highly efficient and adaptable backup solution.

Integration is a defining characteristic of Avamar’s architecture. The system is designed to coexist seamlessly with other EMC technologies such as Data Domain, NetWorker, and VMware vSphere. Integration with Data Domain enhances scalability by allowing Avamar to offload deduplicated data to a more expansive repository. Integration with VMware enables image-level backups of virtual machines, simplifying protection for virtualized environments. Understanding how Avamar communicates with these technologies is fundamental for the E20-598 exam, as candidates must demonstrate an ability to design and manage hybrid backup architectures.

Security within Avamar’s architectural framework is multilayered. Encryption is applied both during data transmission and at rest. Each backup operation is authenticated through certificates, ensuring that only authorized clients can communicate with the Avamar grid. Access control policies define user privileges, preventing unauthorized configuration changes or data access. These security features embody Avamar’s commitment to safeguarding sensitive information, and the E20-598 exam evaluates knowledge of how to implement and manage them effectively.

Monitoring and reporting functions are deeply embedded within Avamar’s architecture. The system continuously generates logs that capture every operational event, from client connections to backup completion statuses. These logs are vital for troubleshooting and auditing. Administrators can access performance reports that detail deduplication ratios, backup success rates, and system utilization metrics. Such visibility empowers proactive management and ensures compliance with corporate data protection policies.

Maintenance processes such as garbage collection, integrity checking, and checkpoint validation are intrinsic to Avamar’s sustainability. Garbage collection reclaims storage space by removing expired or redundant data segments. Integrity checking ensures that the stored data remains consistent and uncorrupted. Checkpoint validation confirms that recovery points are intact and usable. These processes run automatically at defined intervals, but administrators can also initiate them manually if anomalies arise. For the E20-598 exam, understanding the timing, purpose, and impact of these maintenance operations is critical.

Another salient architectural component is the Avamar client plugin framework. Avamar supports a variety of plugins designed to protect specific applications and databases, such as Oracle, SQL Server, Exchange, and SharePoint. These plugins enable application-consistent backups by coordinating with the application’s native APIs. For instance, during an Oracle backup, the Avamar plugin communicates with RMAN to ensure transaction consistency. The architecture’s flexibility in accommodating diverse platforms makes it an indispensable solution for enterprises with heterogeneous environments.

The Avamar grid’s expandability ensures that organizations can scale as their data requirements grow. Adding new nodes to the grid is straightforward, involving minimal disruption to ongoing operations. The grid automatically redistributes data and workload among the newly added nodes. This elastic scalability aligns with modern enterprise demands for agility and cost efficiency. Candidates preparing for the E20-598 certification must understand the process of grid expansion, including node configuration, synchronization, and validation.

In virtualized and cloud-integrated infrastructures, Avamar’s architecture exhibits remarkable adaptability. The Avamar Virtual Edition allows deployment as a virtual appliance, eliminating the need for dedicated hardware. This virtual variant operates identically to its physical counterpart, supporting all backup and recovery functions within a software-defined environment. It integrates with cloud storage platforms, offering organizations flexibility in managing on-premises and offsite data simultaneously.

The elegance of Avamar’s architecture lies in its ability to blend complexity with simplicity. Beneath its intricate grid design and sophisticated algorithms lies a user-centric operational philosophy that empowers administrators to maintain control with ease. Every architectural component, from the smallest client plugin to the vast grid infrastructure, contributes to an ecosystem that values precision, reliability, and scalability. The E20-598 exam is structured to assess not just memorization of these architectural details but the candidate’s capacity to apply them effectively in dynamic, real-world situations.

A profound understanding of Avamar architecture provides more than technical knowledge; it imparts an appreciation for how intelligent system design can harmonize speed, safety, and sustainability. Each node, each hash, each checkpoint represents a fragment of a larger vision—one where data protection transcends mere storage and becomes a strategic instrument of business resilience. EMC Avamar stands as a testament to how meticulous engineering and forward-thinking design can redefine the possibilities of backup recovery technology.

Avamar Backup Processes and Deduplication Mechanism

The foundation of EMC Avamar’s innovation in data protection lies in its unique approach to backup processes and its highly efficient deduplication mechanism. For anyone preparing for the E20-598 exam, understanding these principles is essential because they form the operational nucleus of the Avamar platform. Traditional backup systems have long been plagued by inefficiency, redundancy, and the constant repetition of storing identical data across multiple sessions. Avamar resolves this dilemma through an ingenious system of client-side deduplication, variable-length data segmentation, and intelligent indexing, resulting in accelerated backups, optimized network usage, and significantly reduced storage costs.

The Avamar backup process begins at the client level, where an Avamar agent is installed on each system requiring protection. This agent is not merely a passive participant but an intelligent entity capable of analyzing data, identifying redundancies, and transmitting only unique data blocks to the Avamar server. When a backup operation is initiated, the agent examines the file system and divides files into small, variable-length chunks using an algorithm that identifies natural data boundaries. This segmentation process is critical, as it allows Avamar to capture changes with surgical precision, even if modifications occur within files rather than at their edges.

Each data chunk generated by the Avamar client undergoes a hash calculation using a cryptographic algorithm designed to produce a unique fingerprint for every piece of data. These hash values are compared against a centralized index stored within the Avamar server’s database. If the hash already exists in the index, it indicates that the data chunk has been previously stored and does not need to be transmitted again. Only new or altered chunks are sent over the network. This client-side deduplication ensures that bandwidth is used only for truly unique data, a design that dramatically reduces network congestion and accelerates backup completion times.

Once the deduplicated data reaches the Avamar grid, it is distributed across the storage nodes according to the system’s grid architecture. The grid handles data placement intelligently, balancing loads among nodes to maintain performance consistency. Each data chunk is stored as an object within the Avamar storage system, accompanied by metadata that links it to its original file and client source. The system’s database maintains a catalog of these relationships, allowing for seamless data reconstruction during restoration. The efficiency of this model is one of the defining characteristics of Avamar and is a topic frequently emphasized in the E20-598 certification.

A pivotal element of Avamar’s backup process is the use of backup groups and policies. Administrators can organize clients into groups based on similar backup requirements or operational schedules. Each group is governed by a policy that dictates parameters such as backup frequency, retention duration, and priority level. For example, mission-critical servers may be configured for daily incremental backups with longer retention periods, while less vital data may be backed up weekly. These policies ensure systematic protection and optimal resource allocation across the Avamar environment.

When backups are executed, Avamar performs multiple verification layers to ensure data integrity. The client verifies its local data before transmission, and the server revalidates received data chunks against their corresponding hash values to detect any corruption or inconsistency. The system also utilizes checkpoint mechanisms that capture the grid’s operational state at regular intervals. These checkpoints serve as recovery markers in case of system interruptions or hardware failures. Understanding how these checkpoints interact with backup operations is a vital part of mastering Avamar’s operational intricacies.

One of the hallmarks of Avamar’s design is its ability to perform incremental forever backups. Unlike traditional systems that perform periodic full backups, Avamar conducts an initial full backup followed by continuous incremental backups thereafter. Since deduplication ensures that only new data chunks are stored, each incremental backup effectively behaves as a full backup in terms of recoverability. This approach minimizes backup windows and eliminates the need to repeatedly capture unchanged data. The incremental forever strategy exemplifies Avamar’s efficiency and aligns with enterprise demands for shorter recovery point objectives.

Bandwidth management during backups is another core competency of Avamar. The system allows administrators to set bandwidth throttling policies that control data transfer rates during specific time windows. This feature prevents backup operations from saturating network links and ensures that business-critical applications retain priority access to bandwidth. Avamar’s ability to dynamically adjust bandwidth usage based on network conditions is especially beneficial in distributed or remote office environments. The E20-598 exam often explores this concept through scenario-based questions that require an understanding of bandwidth optimization strategies.

In environments with large data repositories, Avamar’s deduplication database—known as the hash cache—plays an instrumental role in maintaining high performance. The hash cache stores recently used hash values in memory, allowing the system to quickly identify duplicate data without repeatedly querying the entire index. This caching mechanism significantly reduces disk I/O operations and enhances throughput. The exam evaluates knowledge of how the hash cache operates, how it is maintained, and how it influences backup speed and efficiency.

Data integrity within Avamar is further safeguarded through the use of cyclic redundancy checks and checksum verification. Each data chunk is accompanied by a checksum value that is recalculated and validated during every backup and restore operation. This ensures that even if hardware anomalies or disk corruption occur, the system can detect inconsistencies and take corrective actions. Candidates preparing for the E20-598 exam must be able to explain how Avamar verifies data accuracy and preserves integrity throughout the backup lifecycle.

The deduplication process extends beyond the boundaries of individual clients. Avamar performs global deduplication, meaning that data shared across multiple clients is stored only once within the grid. For example, if several virtual machines contain identical operating system files, Avamar recognizes these redundancies and eliminates duplicate storage. This global deduplication capability delivers extraordinary storage savings and simplifies capacity planning for administrators managing large enterprise environments.

In addition to traditional file system backups, Avamar supports application-aware backups through specialized plugins. These plugins integrate with enterprise applications such as Microsoft Exchange, SQL Server, and Oracle databases. They enable consistent and application-level backups by interacting with native APIs, ensuring that transactional data is captured coherently without disrupting ongoing operations. During backups, these plugins coordinate with the application to quiesce data, perform the backup, and then resume normal activities. This feature is essential for achieving consistency in databases and email systems, and it is a recurring focus of the E20-598 exam.

Avamar’s deduplication not only reduces storage requirements but also enhances scalability. Because only unique data is transmitted and stored, system growth occurs at a predictable rate, allowing administrators to forecast capacity expansions more accurately. Furthermore, deduplication reduces the burden on backup windows, enabling enterprises to complete backups within constrained timeframes even as data volumes expand. This scalability is one of the main reasons why Avamar remains a preferred choice for enterprise data protection.

The recovery process in Avamar is closely tied to its backup methodology. Since all data is stored as deduplicated chunks, restoration involves reassembling these chunks into their original structure. The Avamar server identifies which unique segments belong to a requested file or dataset and reconstructs it using the metadata stored in the catalog. Because of the deduplication mechanism, restores are often faster than traditional systems, as Avamar retrieves only the necessary segments without redundancy. Administrators can initiate restores at the file, directory, or system level, depending on the recovery objectives.

Another remarkable attribute of Avamar’s backup engine is its ability to perform client-side encryption before data transmission. This ensures that sensitive data remains secure throughout the backup process, both in motion and at rest. Encryption keys are managed centrally through Avamar’s security framework, maintaining strict control over access and decryption privileges. The E20-598 certification exam evaluates a candidate’s understanding of these encryption mechanisms, emphasizing their importance in maintaining data confidentiality in compliance with organizational and regulatory standards.

One of the subtle yet powerful features of Avamar’s architecture is the concept of backup retention and expiration. Administrators define how long specific backups should be retained before they are marked for deletion. The system automatically enforces these policies through garbage collection routines that reclaim storage space occupied by expired data. This process runs seamlessly in the background, ensuring that the grid remains optimized and that valuable storage resources are continually recycled. Candidates studying for the E20-598 exam should understand how retention policies influence storage utilization and how to manage them effectively.

Avamar’s integration with EMC Data Domain enhances its backup process by combining Avamar’s client-side deduplication with Data Domain’s target-side deduplication and scalability. In this hybrid model, Avamar handles the deduplication and metadata management, while Data Domain serves as the repository for the deduplicated data. This integration allows enterprises to extend their backup capacities without sacrificing performance. It also enables replication between Avamar and Data Domain systems, providing an additional layer of resilience and redundancy.

The efficiency of Avamar’s deduplication also has environmental benefits. By reducing the amount of data transmitted and stored, organizations lower their energy consumption and carbon footprint. Fewer disk drives are required, resulting in decreased power usage and cooling demands. This sustainability aspect underscores Avamar’s value not just as a technological asset but as a contributor to environmentally responsible data management practices.

Administrators utilizing Avamar must also be proficient in monitoring backup activities and interpreting operational reports. The Avamar Management Console provides a comprehensive view of ongoing backups, job statuses, and system utilization metrics. Through this interface, administrators can identify failed or incomplete jobs, analyze performance trends, and fine-tune backup schedules for improved efficiency. The exam often tests familiarity with these management tools, as they are indispensable for maintaining operational transparency and reliability.

Another vital element in Avamar’s operational structure is backup validation. After each backup, Avamar conducts validation checks to ensure that the data has been successfully captured and indexed. These validations help detect anomalies early, preventing potential restore failures in the future. Administrators can configure validation schedules and review the results through the management interface. Understanding this process and its significance is a recurring topic in the E20-598 certification objectives.

The deduplication process, while immensely beneficial, requires meticulous management to sustain performance over time. As the deduplication index grows, periodic maintenance activities such as index optimization and checkpoint validation become necessary. Avamar automates these routines to ensure the index remains efficient and responsive. Administrators must also monitor disk utilization to prevent capacity imbalances among storage nodes, as uneven distribution can lead to degraded performance.

Network efficiency within Avamar’s backup process is another domain that demands attention. Avamar supports multi-streaming, allowing multiple backup threads to run concurrently. This feature maximizes throughput, especially for large datasets or environments with numerous clients. Administrators can configure multi-streaming parameters based on client hardware capabilities and network bandwidth. Properly configured, multi-streaming can reduce backup durations dramatically while maintaining data integrity.

In distributed enterprises with remote offices, Avamar’s deduplication mechanism proves particularly advantageous. Remote offices can perform local backups that transmit only unique data to the central Avamar grid, minimizing WAN utilization. This feature enables centralized data protection without overwhelming the corporate network. For E20-598 candidates, understanding how Avamar optimizes distributed backups and manages remote clients is critical.

The sophistication of Avamar’s backup processes also extends into virtualization. Avamar’s integration with VMware environments allows administrators to perform image-level backups of virtual machines using the VMware vStorage APIs for Data Protection. This method captures the complete virtual machine image efficiently while still applying deduplication to minimize data redundancy. Avamar’s support for changed block tracking further accelerates incremental backups of virtual machines by identifying and protecting only modified disk blocks since the last backup.

Every element of Avamar’s backup workflow is orchestrated to achieve a balance between speed, security, and reliability. From the initial segmentation of data to the final storage of deduplicated chunks, each process contributes to the overall harmony of the system. The synergy between client and server, between hash calculations and deduplication indexing, and between backup scheduling and resource allocation epitomizes Avamar’s engineering precision.

Mastering the intricacies of Avamar’s backup and deduplication mechanisms requires not just theoretical understanding but an appreciation for how these processes interact in dynamic enterprise environments. The E20-598 certification challenges candidates to analyze these interactions, troubleshoot anomalies, and design backup strategies that embody efficiency and resilience. EMC Avamar remains a quintessential example of how intelligent architecture and meticulous design can transform the traditional paradigm of backup and recovery into a model of agility, scalability, and precision.

Avamar Recovery Operations and Disaster Management

The essence of any backup and recovery system lies not merely in the ability to preserve data but in the assurance that such data can be retrieved with precision, speed, and consistency when needed. EMC Avamar epitomizes this principle through its meticulously engineered recovery operations and disaster management framework, forming a crucial component of the knowledge evaluated in the E20-598 exam. Understanding Avamar’s recovery logic, mechanisms of verification, and methodologies for restoring systems across diverse environments is indispensable for storage administrators who aim to guarantee uninterrupted continuity of operations in the face of adversity.

Recovery within Avamar begins as an inversion of its backup logic. The deduplicated data chunks stored within the grid are reassembled into their original structures based on metadata references maintained in the Avamar database. When an administrator initiates a restore request, the Avamar server identifies the unique data objects corresponding to the requested files and orchestrates their retrieval from the storage nodes. The system reconstructs these chunks in memory and transmits them to the client system, where they are restored to their original location or an alternate path defined by the administrator. The entire process unfolds seamlessly, ensuring that the integrity and authenticity of the restored data remain uncompromised.

One of the defining strengths of Avamar’s recovery mechanism lies in its flexibility. It supports multiple types of restore operations, ranging from granular file-level recoveries to full system restorations. File-level restores allow users to recover specific documents, folders, or configurations without the need to rebuild entire systems. This feature proves invaluable in scenarios such as accidental file deletions or configuration corruption. On the other hand, full system restores facilitate complete reconstitution of operating systems, applications, and user data, often used in disaster recovery scenarios or hardware replacements. Avamar accommodates these varied needs through an intuitive interface that allows administrators to select the appropriate recovery scope.

The Avamar Management Console serves as the central hub for managing restore operations. Within this console, administrators can search for backup instances, browse recovery points, and initiate restoration tasks. The console provides chronological views of available backups, allowing users to select specific recovery timestamps that align with their business requirements. This temporal precision enables organizations to perform point-in-time recoveries, reverting systems to exact states preceding failures or corruption. Such functionality demonstrates Avamar’s capability to serve as both a reactive and preventive instrument in data resilience.

Integral to the recovery process is Avamar’s reliance on metadata. Each backup operation generates metadata that catalogs the structure, ownership, and attributes of every file and folder. This metadata is stored separately from the actual data chunks, ensuring that it remains accessible even if partial data corruption occurs. During a restore, Avamar references this metadata to reconstruct the logical file system hierarchy, ensuring that restored data mirrors its original form. For the E20-598 exam, understanding how metadata functions as the backbone of Avamar’s recovery operations is critical, as it directly influences the precision and speed of restorations.

Avamar’s recovery architecture also encompasses the concept of checkpoints. These checkpoints serve as internal snapshots of the Avamar grid’s operational state, created at predetermined intervals. In the event of system inconsistencies or hardware anomalies, administrators can roll back to a previous checkpoint, restoring the system to a stable and verified configuration. Each checkpoint captures essential details such as database records, storage indexes, and system configurations. During disaster recovery operations, these checkpoints act as vital lifelines, enabling administrators to reestablish system functionality rapidly without compromising data integrity.

Another vital feature underpinning Avamar’s recovery process is replication. Avamar supports bidirectional and unidirectional replication between multiple grids or domains. Replication ensures that data backed up in one location is securely mirrored to another, often geographically distant, site. This replication mechanism fortifies disaster recovery strategies by guaranteeing that even if one grid is compromised due to hardware failure, cyberattack, or natural disaster, the replicated site remains available for restoration. Administrators can configure replication schedules, select specific clients or datasets for replication, and monitor replication progress through the Avamar Management Console. The E20-598 exam frequently explores this topic, emphasizing the ability to design resilient replication policies that balance performance, bandwidth, and recovery objectives.

In a disaster recovery scenario, Avamar’s capabilities shine through its integration with enterprise continuity frameworks. Organizations typically define recovery time objectives (RTO) and recovery point objectives (RPO) to guide their resilience strategies. Avamar enables administrators to align backup frequencies, replication intervals, and retention policies with these business-defined objectives. For instance, critical systems with low tolerance for downtime can be configured for more frequent backups and near-continuous replication, while non-critical systems can follow less stringent schedules. Understanding how to configure Avamar to meet RTO and RPO requirements is an essential skill assessed in the certification exam.

Disaster recovery with Avamar often extends beyond mere data restoration; it encompasses system reconstruction. In large-scale environments, Avamar can be utilized to rebuild entire data centers after catastrophic events. Administrators can deploy Avamar servers at recovery sites and synchronize them with replicated data to restore operations seamlessly. This capability ensures operational continuity even when the primary infrastructure becomes inaccessible. The system’s deduplication feature accelerates these large-scale recoveries by minimizing the amount of data that must be transferred between sites.

Security remains a fundamental aspect of Avamar’s recovery architecture. During restore operations, data remains encrypted both in transit and at rest. Avamar’s encryption protocols prevent unauthorized access and maintain compliance with stringent security regulations. Administrators can control access to restore operations through role-based access control, ensuring that only authorized personnel can initiate or approve restorations. Additionally, audit logs capture every restore action performed within the system, providing traceability and accountability for compliance audits.

The efficiency of Avamar’s recovery processes is also enhanced by its integration with Data Domain. When Avamar operates in conjunction with Data Domain systems, restorations can be performed directly from the deduplicated storage repository without first rehydrating data. This eliminates redundant operations and reduces recovery times dramatically. The synergy between Avamar and Data Domain allows administrators to leverage the best attributes of both platforms—Avamar’s intelligent deduplication and Data Domain’s scalable storage capacity.

Performance optimization during restore operations is achieved through Avamar’s intelligent resource management. The system dynamically allocates resources such as CPU, memory, and bandwidth based on the scope and urgency of the restore task. Parallelism is utilized to retrieve multiple data chunks simultaneously from different storage nodes, expediting large-scale recoveries. Administrators can prioritize restore jobs, ensuring that critical systems are recovered before non-essential ones. This level of control and adaptability exemplifies the sophistication of Avamar’s recovery mechanisms and is a key area of focus for the E20-598 certification.

An often-overlooked but crucial aspect of Avamar recovery is the validation process. After each restoration, Avamar verifies that the recovered data matches the original backup in both content and structure. This validation process employs checksum comparisons to detect discrepancies or corruption. If inconsistencies are detected, Avamar automatically retries retrieval from alternate nodes or checkpoints. The validation mechanism not only guarantees data integrity but also reinforces user confidence in the reliability of the backup system.

Beyond standard recovery scenarios, Avamar provides specialized options for virtual environments. In VMware ecosystems, Avamar supports both image-level and file-level restores of virtual machines. Image-level recovery involves restoring an entire virtual machine to its previous operational state, while file-level recovery allows selective restoration of specific files within a virtual disk. The integration with VMware vCenter ensures seamless browsing of backups and one-click recovery of virtual machines. For Hyper-V environments, Avamar employs similar logic, using native APIs to achieve consistent and reliable restores. The ability to manage virtual environment recoveries efficiently is a recurring topic in the E20-598 examination.

Avamar’s integration with enterprise applications further extends its recovery versatility. Application-aware plugins for databases such as Oracle, SQL Server, and SAP HANA enable transactionally consistent restores. These plugins interact with native application tools to coordinate backup and restore processes, ensuring that all dependent files and transaction logs are synchronized. For instance, during a SQL Server restore, Avamar works with the database engine to reapply transaction logs and bring the database to a consistent state. The E20-598 exam evaluates understanding of these integrations, focusing on how administrators can leverage them for precise application recovery.

In addition to traditional on-premises restores, Avamar supports cloud-based disaster recovery. The Avamar Virtual Edition can be deployed in cloud environments, allowing organizations to restore data directly into virtual machines hosted in the cloud. This feature provides flexibility in disaster recovery planning, enabling hybrid recovery strategies that combine on-premises infrastructure with cloud scalability. Administrators can restore workloads in the cloud temporarily while rebuilding on-premises systems, ensuring minimal disruption to business operations.

The architecture of Avamar’s recovery process also incorporates checkpoint and rollback mechanisms at both the system and client levels. In cases of data corruption or failed restores, administrators can revert the Avamar server to a previous checkpoint, effectively undoing changes that led to instability. Similarly, client systems can utilize rollback features to restore previous configurations without affecting broader backup sets. These capabilities enhance resilience by providing multiple layers of fail-safes within the recovery workflow.

Disaster management with Avamar extends beyond reactive recovery; it involves continuous preparation and proactive testing. Administrators are encouraged to perform regular recovery drills using Avamar’s test restore functionality. Test restores simulate full recovery scenarios without disrupting production systems, validating that backups are restorable and that recovery objectives can be met. These exercises are critical for verifying disaster readiness and are a recommended practice emphasized in the certification exam.

In multi-site organizations, Avamar’s disaster management framework supports cross-domain recovery. Administrators can perform restores from replicated data across different grids, allowing flexible recovery strategies that span multiple locations. This feature ensures business continuity even if regional disruptions occur. Cross-domain recovery also simplifies migration and system upgrades, as data can be restored directly into new environments without manual intervention.

Monitoring recovery operations is facilitated through detailed reporting and alert mechanisms. The Avamar Management Console provides dashboards that display restoration progress, performance metrics, and potential errors. Administrators can generate historical reports to analyze recovery trends and identify recurring issues. Alerts can be configured to notify administrators of failed or delayed restore tasks, enabling prompt remediation. The E20-598 exam tests familiarity with these monitoring tools and the ability to interpret recovery reports effectively.

Avamar’s resilience is further reinforced by its self-healing properties. During recovery, if the system detects damaged or inaccessible data chunks, it automatically retrieves redundant copies from alternate storage nodes or replicated sites. This self-repair capability ensures uninterrupted recovery operations and exemplifies Avamar’s engineering reliability. Additionally, the system’s distributed grid architecture prevents single points of failure, ensuring that even partial node losses do not compromise restoration performance.

The overarching philosophy behind Avamar’s disaster management lies in harmonizing speed, precision, and foresight. Recovery operations are not treated as isolated responses but as integral components of a continuous data protection lifecycle. Each backup is inherently structured for recoverability, each checkpoint embodies a safeguard against unpredictability, and each replication policy serves as a bridge between resilience and business assurance. For administrators pursuing the E20-598 certification, mastering these interdependencies means mastering not only a technology but a philosophy of data stewardship.

In the grand landscape of enterprise data recovery, EMC Avamar distinguishes itself through its unwavering commitment to dependability and its capacity to transform adversity into continuity. Every process, from metadata reconstruction to cross-domain replication, functions with calculated precision to ensure that when systems falter, restoration is immediate and complete. Through intelligent design and relentless attention to detail, Avamar has redefined what recovery means—transcending mere restoration to become the embodiment of digital resilience in an unpredictable technological world.

Avamar Management, Administration, and Performance Optimization

Efficient management and administration form the cornerstone of any enterprise data protection solution, and within EMC Avamar, these disciplines are elevated to an intricate symphony of precision, automation, and foresight. Understanding how to effectively manage, monitor, and optimize an Avamar environment is fundamental for success in the E20-598 exam. The architecture of Avamar, with its sophisticated data deduplication, scalable grid infrastructure, and policy-driven automation, demands a level of administrative acumen that ensures both reliability and high performance across complex and distributed storage ecosystems.

At its foundation, Avamar’s administrative model revolves around centralized control through the Avamar Administrator console. This interface serves as the operational nucleus for configuring policies, monitoring system health, and performing maintenance tasks. Administrators utilize it to define client groups, assign backup schedules, and oversee the performance of the grid. Every interaction within the Avamar environment is reflected through this management console, offering a transparent and granular view of operations. Understanding the console’s interface, navigation, and functions is not merely a technical requirement but a strategic necessity for mastering Avamar’s management discipline.

The structure of client management within Avamar is hierarchical. Clients—representing the systems and applications being protected—are organized into domains and groups. Each domain can encompass multiple clients sharing similar characteristics or requirements. Groups, in turn, are assigned specific datasets, policies, and schedules. For instance, a group consisting of database servers may be configured to perform nightly incremental backups with retention policies extending to thirty days, while another group for end-user systems might employ weekly backups with shorter retention windows. This hierarchical organization not only simplifies management but also ensures that backup operations are executed according to business priorities.

Policies represent the logic of automation in Avamar administration. Each policy governs how, when, and where backups occur, as well as how long they are retained. Policies define schedules, retention periods, and data encryption requirements. Administrators can create multiple policies tailored to different operational requirements, ensuring flexibility in managing diverse workloads. The policy framework also encompasses blackout windows—specific time intervals during which backup operations are suspended to avoid network congestion or interference with business-critical processes.

Scheduling within Avamar is designed with adaptability in mind. Backup schedules can be configured to run at fixed times, during off-peak hours, or triggered by external events. Avamar’s scheduling engine takes into account system load, network availability, and client readiness to ensure optimal performance. This intelligent scheduling minimizes contention for system resources, maintaining balance across all backup jobs. For the E20-598 exam, understanding how to configure and optimize backup schedules based on organizational demands and system capacity is a vital skill.

Monitoring and reporting are integral to Avamar administration. The Avamar Management Console provides comprehensive dashboards that track the health and performance of the grid. Administrators can view metrics such as deduplication ratios, storage utilization, client activity, and backup success rates. Each backup job generates logs that detail its execution status, duration, and any encountered anomalies. These logs serve as diagnostic tools during troubleshooting, allowing administrators to identify failed jobs, analyze root causes, and implement corrective measures. The system’s reporting module can generate customized reports for auditing, compliance, and performance evaluation purposes.

Avamar’s grid architecture requires careful oversight to maintain operational equilibrium. Each node within the grid performs specific roles, such as storage, management, or utility functions. The management node coordinates communication across the grid, while storage nodes handle the deduplicated data. Performance tuning often involves balancing workloads among nodes to prevent bottlenecks. Administrators can redistribute clients, adjust node assignments, or modify network configurations to maintain even utilization. Load balancing is not merely a one-time configuration but an ongoing administrative discipline, ensuring that each node contributes proportionally to system performance.

One of the most critical tasks in Avamar administration is capacity planning. As data volumes expand, storage capacity must be managed meticulously to prevent saturation. Avamar provides tools to monitor disk usage trends, deduplication effectiveness, and growth projections. Administrators can use these insights to forecast when additional capacity will be required. When storage expansion becomes necessary, new nodes can be seamlessly integrated into the grid. The system automatically redistributes data across available nodes, ensuring uniform load distribution. Understanding how to anticipate capacity requirements and execute expansion without service disruption is a core topic in the E20-598 certification.

Performance optimization within Avamar extends beyond hardware considerations; it involves fine-tuning system parameters to enhance efficiency. Administrators can adjust deduplication cache sizes, modify client concurrency levels, and configure network throttling to achieve optimal throughput. Deduplication performance, for instance, can be improved by ensuring that the hash cache size aligns with available system memory, reducing disk I/O operations. Similarly, client concurrency settings determine how many backup streams can run simultaneously, impacting both speed and resource utilization. Achieving harmony between these parameters requires deep comprehension of Avamar’s operational dynamics.

Network performance plays a decisive role in Avamar’s overall efficiency. Since backup and restore operations rely heavily on data transmission, network latency and bandwidth availability directly influence completion times. Avamar’s network throttling capabilities allow administrators to manage bandwidth consumption during peak business hours. Additionally, by leveraging client-side deduplication, Avamar significantly reduces the amount of data sent over the network, optimizing performance even in bandwidth-constrained environments. For the E20-598 exam, candidates must demonstrate proficiency in designing network configurations that accommodate both local and remote backups efficiently.

Maintenance tasks are the unseen backbone of sustained Avamar performance. Regular maintenance includes garbage collection, checkpoint validation, and hfscheck operations. Garbage collection is responsible for reclaiming space from expired backups, ensuring that the system remains free from obsolete data. Checkpoint validation verifies the consistency of the system’s internal state, while hfscheck ensures the integrity of the file system within each node. These tasks are automated but can also be manually triggered when necessary. Neglecting maintenance routines can lead to performance degradation and potential data integrity issues, making their mastery essential for certified administrators.

User management within Avamar is tightly coupled with security controls. Access to administrative functions is governed by role-based access control, ensuring that only authorized users can modify configurations or initiate critical operations. Roles can range from basic operators, who monitor jobs, to full administrators with unrestricted privileges. This segregation of duties supports compliance with security frameworks such as ISO and NIST. Authentication is integrated with enterprise directory services like LDAP, allowing seamless user provisioning and centralized management. Understanding these access control mechanisms is pivotal for ensuring secure administration practices.

Security in Avamar administration extends to encryption management. Data encryption is configurable at both the client and server levels, ensuring protection in transit and at rest. Administrators must handle encryption keys with utmost diligence, as key loss can render encrypted backups unrecoverable. Avamar employs AES-256 encryption, balancing robust security with minimal performance overhead. Additionally, SSL/TLS protocols safeguard communication between clients and the Avamar server. In regulated industries where compliance is paramount, proper encryption configuration and key management are indispensable skills tested in the certification exam.

Replication management forms another dimension of Avamar administration. Administrators can configure replication between multiple Avamar grids or between Avamar and Data Domain systems. Replication policies dictate which datasets are copied, the replication frequency, and bandwidth limitations. Monitoring replication performance ensures that secondary sites remain synchronized and ready for disaster recovery. Administrators must also manage retention and expiration independently on replicated systems to maintain compliance and optimize storage utilization. This aspect of replication administration reflects Avamar’s holistic approach to business continuity planning.

Performance optimization is not confined to system-level parameters; it extends to client-side configurations as well. Clients running on different operating systems may exhibit unique performance characteristics, requiring tailored adjustments. For example, Linux clients may benefit from tuning network buffers, while Windows clients may require adjustments to VSS snapshot behavior. Administrators can analyze client logs to detect bottlenecks, adjust backup sets, or reschedule operations to minimize contention. Mastery of cross-platform optimization techniques is a key competency evaluated in the E20-598 exam.

The Avamar system’s internal database, known as the MCS database, plays a crucial role in coordinating and tracking all backup and restore activities. Regular database maintenance ensures its responsiveness and stability. Administrators should perform periodic database compaction, index optimization, and backup of the MCS database itself. These tasks prevent fragmentation and improve query performance. The exam often explores these maintenance activities, emphasizing the relationship between database health and overall system efficiency.

System alerts and notifications serve as the early warning mechanism within Avamar’s administrative framework. Administrators can configure alerts for conditions such as low disk capacity, node failures, or job failures. These alerts can be delivered through email, SNMP traps, or integration with third-party monitoring tools. Proactive alert management allows administrators to address issues before they escalate into service disruptions. The ability to interpret alerts and execute remedial actions forms part of the situational analysis scenarios featured in the E20-598 assessment.

Troubleshooting within Avamar demands both analytical and procedural expertise. Common issues such as failed backups, slow performance, or communication errors can stem from network anomalies, misconfigurations, or hardware degradation. Administrators employ diagnostic tools such as log retrieval utilities, system logs, and command-line utilities to investigate anomalies. Each error message in Avamar is accompanied by a unique identifier, allowing precise correlation with known solutions in the Avamar knowledge base. Mastery of troubleshooting workflows ensures swift resolution and minimal downtime, underscoring the administrator’s role as the custodian of reliability.

Automation enhances administrative efficiency within Avamar. Through scripting and scheduled maintenance tasks, repetitive operations can be executed without manual intervention. Administrators can automate report generation, policy enforcement, and backup verification tasks. Automation not only reduces human error but also ensures consistent adherence to organizational policies. Although Avamar does not rely on code scripting for standard operations, it supports integration with automation frameworks through APIs, allowing seamless orchestration within broader IT ecosystems.

Monitoring performance trends over time enables predictive management. By analyzing historical data, administrators can identify patterns such as gradual performance degradation, deduplication efficiency changes, or storage consumption anomalies. Predictive analytics empower administrators to take preventive measures—such as expanding capacity, optimizing schedules, or fine-tuning deduplication parameters—before problems impact production. The E20-598 exam places emphasis on the analytical aspect of management, testing candidates’ ability to interpret performance metrics and formulate optimization strategies.

Backup validation, an essential administrative function, ensures that every backup stored within the Avamar grid remains recoverable. Validation routines periodically verify the integrity of backup data and metadata associations. If discrepancies are found, Avamar initiates self-healing actions, retrieving redundant copies from other nodes or triggering reindexing. These processes maintain the reliability of the backup repository, preventing corruption from propagating undetected. Administrators must understand the scheduling and execution of validation routines to guarantee long-term data fidelity.

Patch management and software updates constitute another administrative responsibility. Avamar updates often include performance enhancements, security fixes, and feature improvements. Administrators must plan and execute updates systematically, ensuring compatibility with existing infrastructure. Updates typically follow a phased deployment approach, beginning with non-production environments before full-scale implementation. Backup of configuration files and databases before upgrades is a best practice, ensuring rollback capability in case of unexpected issues. The certification exam evaluates understanding of update methodologies, emphasizing risk mitigation and procedural rigor.

In complex enterprise ecosystems, Avamar often coexists with other EMC technologies such as NetWorker and Data Domain. Administrators must manage interoperability among these platforms to ensure cohesive data protection strategies. Avamar’s integration with Data Domain enables tiered deduplication, while coordination with NetWorker facilitates centralized management across hybrid environments. Administrators can consolidate reporting, unify policies, and synchronize retention across systems. This multi-platform awareness forms an advanced competency that distinguishes proficient administrators from novice operators.

Every administrative decision within Avamar—be it policy design, replication configuration, or capacity expansion—reflects a balance between performance, reliability, and compliance. Effective management requires not only technical precision but also strategic foresight, anticipating the evolving needs of enterprise data landscapes. EMC Avamar’s administrative framework, with its emphasis on automation, intelligence, and resilience, empowers organizations to transform data protection into a seamless and adaptive process. For candidates pursuing the E20-598 certification, mastering these facets of administration signifies a holistic grasp of not just technology but the enduring principles of operational excellence in modern data management.

Advanced Troubleshooting, Integration, and Optimization Techniques

In enterprise environments, data protection is only as effective as the administrator’s ability to troubleshoot, integrate, and optimize the backup and recovery ecosystem. EMC Avamar, with its sophisticated architecture, deduplication mechanisms, and scalable grid infrastructure, demands a deep understanding of advanced operational techniques. Mastery of these capabilities is essential for success in the E20-598 exam, as candidates are expected not only to configure and manage Avamar but also to resolve complex challenges, integrate with other technologies, and enhance performance under demanding conditions.