Certification: EMCIE Avamar

Certification Full Name: EMC Implementation Engineer Avamar

Certification Provider: EMC

Exam Code: E20-594

Exam Name: Backup and Recovery - Avamar Specialist for Implementation Engineers

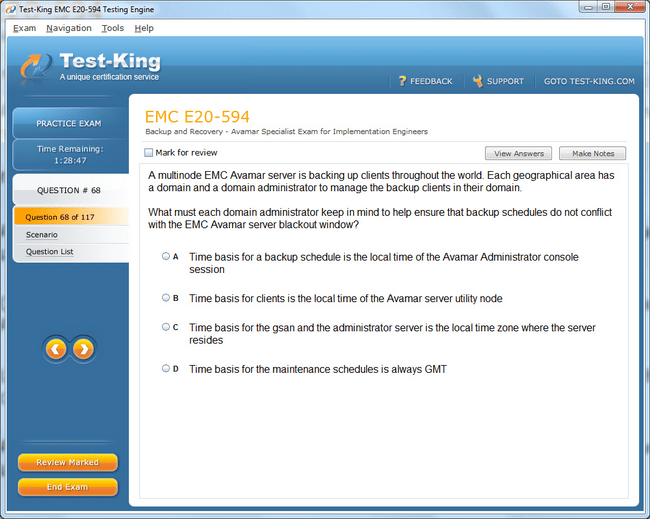

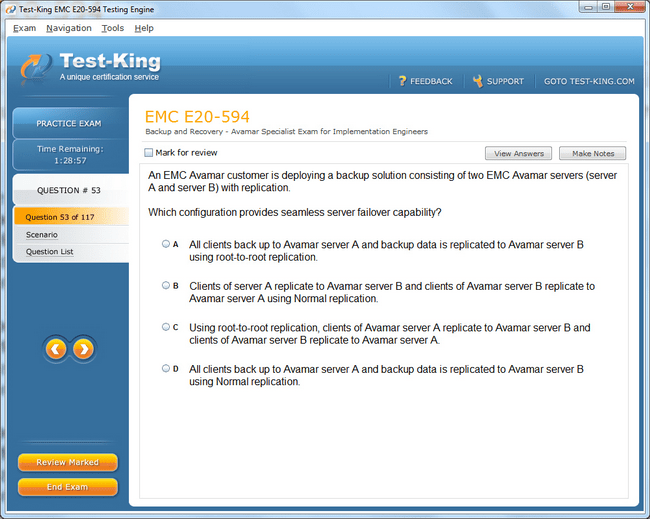

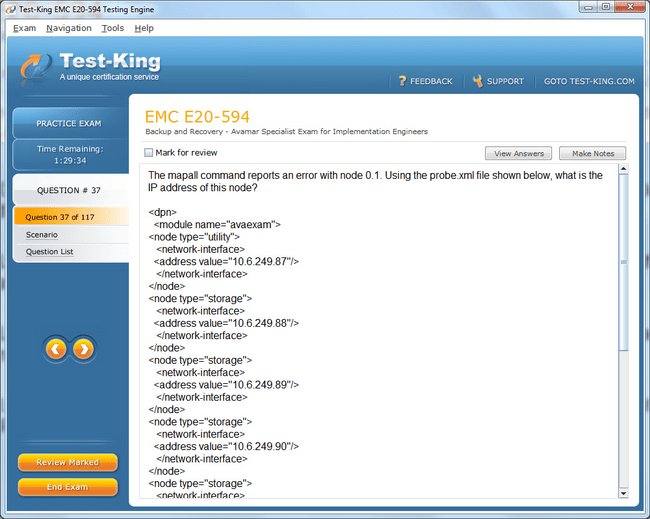

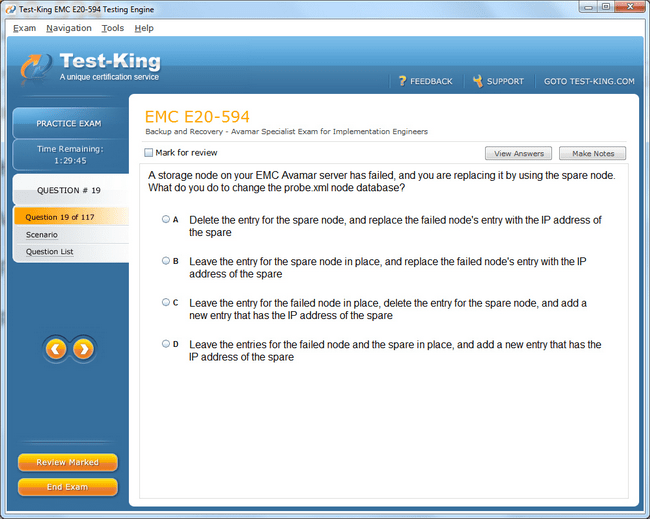

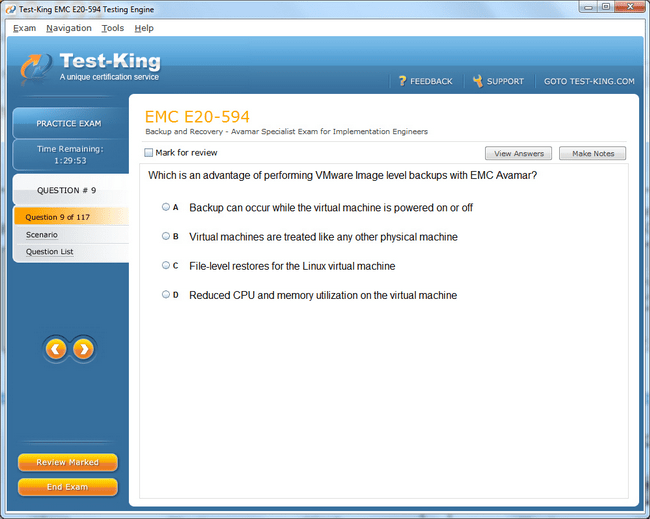

E20-594 Exam Product Screenshots

Why EMCIE Avamar Certification is a Game-Changer for Data Backup Professionals

In the intricate and ever-evolving world of data storage and protection, professionals often seek avenues to distinguish themselves and validate their expertise. Among the plethora of certifications available, the EMC Implementation Engineer Avamar credential stands as a significant benchmark for technical proficiency and practical knowledge in enterprise data backup solutions. This certification is designed for individuals who aspire to demonstrate a profound understanding of Avamar architecture, deployment strategies, and operational optimization within complex IT environments. By obtaining this credential, data backup professionals signal not only their technical acumen but also their commitment to maintaining the integrity, availability, and security of organizational data.

Introduction to EMCIE Avamar Certification

The EMCIE Avamar certification is increasingly recognized as a transformative milestone for professionals who wish to navigate the challenging landscape of enterprise storage. The curriculum emphasizes both theoretical knowledge and hands-on application, creating a balanced framework for engineers to assimilate advanced concepts. Individuals pursuing this credential gain exposure to the intricacies of data deduplication, backup performance optimization, and disaster recovery planning. These competencies are particularly valuable in modern enterprises where data proliferation and regulatory compliance requirements demand sophisticated storage management strategies.

Understanding Avamar Technology

Avamar is a robust data backup and recovery platform renowned for its efficiency in reducing storage requirements and enhancing backup performance through sophisticated deduplication mechanisms. Deduplication, the process of identifying and eliminating redundant data across the storage infrastructure, significantly minimizes disk consumption and accelerates backup operations. EMCIE Avamar certification equips professionals with the knowledge to implement these mechanisms optimally, ensuring that enterprises can store larger datasets without proportionally expanding storage resources.

The certification also delves into the structural nuances of Avamar deployment, including the interplay between Avamar server nodes, storage nodes, and client systems. Professionals learn to navigate the system architecture, configure backup policies, and monitor performance metrics to ensure seamless data protection. This understanding is particularly critical in distributed environments where backup efficiency can directly impact operational continuity and business resilience.

Essential Skills for Data Backup Professionals

Obtaining the EMC Implementation Engineer Avamar credential confers a unique set of competencies that extend beyond basic operational tasks. Certified engineers acquire the ability to architect backup solutions tailored to the specific demands of enterprise IT infrastructure. This includes designing storage strategies that optimize deduplication, integrating Avamar with existing storage networks, and implementing policies that ensure rapid data recovery in the event of system failures.

Furthermore, the certification emphasizes troubleshooting and problem-solving skills. Engineers learn to identify bottlenecks in backup operations, diagnose errors, and implement remedial measures with minimal disruption to ongoing processes. The hands-on experience gained through this credential fosters an analytical mindset, enabling professionals to anticipate challenges and devise innovative solutions for complex data protection scenarios.

Beyond technical skills, EMCIE Avamar certification nurtures a strategic understanding of data governance. Certified engineers comprehend the criticality of compliance requirements, regulatory mandates, and organizational data retention policies. This holistic perspective empowers them to design backup solutions that not only enhance efficiency but also mitigate risks associated with data loss, corruption, or unauthorized access.

Career Advancement Opportunities

The impact of EMCIE Avamar certification extends into the professional trajectory of data backup engineers. Organizations increasingly recognize the value of certified professionals who can independently manage sophisticated backup environments. This recognition often translates into accelerated career progression, access to high-profile projects, and opportunities to lead critical initiatives in data protection.

Market demand for certified engineers is robust, particularly in sectors where data integrity is paramount, such as finance, healthcare, and government. Professionals with EMCIE Avamar credentials are frequently sought for roles that require mastery of enterprise backup solutions, disaster recovery planning, and system optimization. The certification provides a tangible measure of expertise, instilling confidence in employers and clients alike regarding the professional’s capability to manage sensitive and voluminous data environments effectively.

Salary prospects also correlate positively with certification attainment. Certified professionals tend to command higher remuneration due to the specialized knowledge and practical experience they bring to the organization. Additionally, the credential enhances global mobility, as enterprises across different geographies acknowledge the standardization and rigor associated with EMCIE Avamar certification.

Preparing for Certification and Practical Insights

Achieving EMCIE Avamar certification necessitates a dedicated approach to learning and hands-on practice. Professionals must familiarize themselves with Avamar architecture, client-server interactions, and backup configuration paradigms. Recommended preparation involves a combination of formal training courses, self-paced study, and laboratory exercises that simulate real-world backup scenarios. By engaging with diverse operational environments, candidates can reinforce theoretical knowledge through practical application.

Understanding the nuances of system performance, such as throughput optimization, storage allocation, and error diagnostics, is crucial for success. Candidates benefit from cultivating a meticulous attention to detail, as even minor misconfigurations can compromise backup integrity. Additionally, exposure to troubleshooting exercises enhances adaptive problem-solving capabilities, ensuring engineers can respond efficiently to unforeseen challenges in enterprise deployments.

Another valuable dimension of preparation involves analyzing case studies of Avamar implementation in various organizational contexts. By examining scenarios where certified engineers have enhanced backup efficiency, mitigated data loss, or streamlined recovery processes, aspirants can gain actionable insights. These examples illuminate the real-world impact of EMCIE Avamar knowledge and reinforce the importance of strategic planning in backup operations.

Strategic Importance in Modern IT Infrastructure

The relevance of EMCIE Avamar certification is amplified by the growing complexity of modern IT infrastructure. Enterprises increasingly rely on heterogeneous environments that integrate physical, virtual, and cloud-based storage systems. Data backup professionals with specialized certification possess the acumen to navigate these hybrid environments, ensuring consistent and reliable data protection across multiple platforms.

Certified engineers contribute not only to operational efficiency but also to organizational resilience. By implementing robust backup policies, optimizing storage usage, and orchestrating disaster recovery plans, they mitigate potential losses and facilitate rapid recovery from incidents. Their expertise becomes a linchpin in maintaining business continuity, safeguarding mission-critical information, and supporting strategic decision-making.

Furthermore, the credential fosters a culture of continuous learning and innovation. Professionals equipped with EMCIE Avamar knowledge are more inclined to explore emerging technologies, automate backup workflows, and integrate novel solutions that enhance data protection strategies. This proactive approach ensures that enterprises remain agile and responsive to evolving data management challenges.

Expanding Professional Horizons

Earning EMCIE Avamar certification opens pathways to collaborative opportunities, as certified professionals often engage with cross-functional teams, share insights with peers, and contribute to knowledge transfer initiatives. The credential also serves as a foundation for pursuing advanced EMC certifications or related qualifications in storage and cloud technologies. By building upon the core competencies acquired through Avamar certification, professionals can expand their expertise into complementary domains, enhancing their versatility and value within the IT ecosystem.

The prestige associated with EMCIE Avamar certification is reinforced by its rigorous standards and industry recognition. Certified engineers are perceived as reliable custodians of organizational data, capable of implementing high-efficiency backup systems that adhere to best practices and compliance requirements. This professional credibility fosters trust and opens avenues for leadership roles, consultancy opportunities, and engagement in strategic IT projects.

Mastering the Core of Avamar Technology

The EMC Implementation Engineer Avamar credential imparts a profound understanding of enterprise-level backup systems, providing professionals with the ability to navigate complex storage ecosystems with confidence. At the heart of this expertise is Avamar’s sophisticated architecture, which integrates client systems, server nodes, and storage arrays into a seamless framework optimized for deduplication and efficient data recovery. Professionals certified in EMCIE Avamar acquire the capability to architect backup solutions that balance performance, reliability, and resource utilization, allowing enterprises to manage burgeoning datasets with minimal overhead.

A critical component of this mastery is a comprehensive grasp of deduplication processes. Deduplication minimizes redundancy by identifying duplicate data blocks across systems and storing only a single instance, dramatically reducing storage consumption. This process not only conserves disk space but also accelerates backup and restore operations. EMCIE Avamar professionals learn to implement deduplication strategies that enhance efficiency while maintaining data integrity, ensuring that organizational information remains secure and recoverable at all times.

Understanding the interplay of Avamar server nodes and storage nodes is essential for maintaining system resilience. Professionals learn to configure, monitor, and troubleshoot these nodes, ensuring that backups are executed consistently and efficiently. They gain the ability to detect performance bottlenecks, optimize throughput, and maintain the overall health of backup systems. This technical dexterity is a cornerstone of the certification, equipping engineers with practical skills that translate directly into operational improvements.

Advanced Configuration and Deployment

Certification in EMCIE Avamar emphasizes the deployment of Avamar in diverse IT environments. Professionals acquire the skills necessary to integrate Avamar into heterogeneous infrastructures, including virtualized platforms, cloud storage solutions, and traditional on-premises data centers. This versatility allows certified engineers to design backup solutions tailored to specific organizational requirements, whether optimizing for speed, storage efficiency, or redundancy.

Configuration competencies extend beyond basic deployment. Certified engineers understand how to design backup policies that reflect organizational priorities, such as retention schedules, replication policies, and recovery point objectives. By mastering these parameters, EMCIE Avamar professionals ensure that backup operations align with business continuity plans and regulatory compliance requirements. The ability to craft nuanced policies distinguishes certified engineers as both technically adept and strategically minded.

Additionally, the certification provides exposure to advanced features such as client-side deduplication, incremental backups, and replication strategies. Professionals learn to orchestrate complex workflows, balancing performance with reliability, and ensuring that data can be restored swiftly in the event of a system failure. This proficiency in nuanced operational configurations is what elevates EMCIE Avamar professionals beyond general IT practitioners, placing them in a realm of specialized expertise.

Troubleshooting and Problem-Solving Acumen

A defining characteristic of EMCIE Avamar certification is its focus on troubleshooting and adaptive problem-solving. Certified engineers are trained to anticipate and address a spectrum of operational challenges, from performance degradation to system errors. Through hands-on exercises and scenario-based learning, professionals cultivate analytical acumen that enables them to diagnose issues swiftly and implement corrective measures with minimal disruption.

This competency includes the ability to interpret log files, assess system alerts, and understand the root causes of backup failures. Professionals develop a methodology for systematic problem resolution, ensuring that corrective actions address underlying issues rather than superficial symptoms. By fostering a proactive mindset, the certification prepares engineers to maintain continuous backup operations even under demanding conditions, enhancing enterprise resilience.

Moreover, troubleshooting extends to optimization of storage utilization. EMCIE Avamar professionals learn to identify inefficiencies, adjust deduplication strategies, and fine-tune system performance to meet evolving demands. This capacity for continuous improvement reinforces the practical value of the certification, equipping engineers to contribute significantly to operational excellence and data management strategies.

Strategic Understanding of Data Governance

Beyond technical proficiency, EMCIE Avamar certification nurtures a strategic perspective on data governance and organizational compliance. Certified professionals gain insight into retention policies, regulatory mandates, and security protocols, enabling them to design backup solutions that mitigate risk and uphold institutional standards. This strategic acumen is particularly valuable in sectors such as finance, healthcare, and government, where data protection is both a legal requirement and a business imperative.

The credential emphasizes the importance of aligning technical operations with broader organizational objectives. Professionals learn to evaluate business requirements, assess data criticality, and implement backup strategies that support continuity and risk management. By integrating technical execution with governance considerations, EMCIE Avamar engineers ensure that their work contributes meaningfully to both operational stability and regulatory compliance.

Enhancing Operational Efficiency

EMCIE Avamar certification equips professionals to enhance operational efficiency through intelligent resource management. By mastering deduplication, replication, and incremental backup strategies, certified engineers can optimize storage consumption, reduce backup windows, and minimize network load. These efficiencies translate directly into cost savings and improved service levels, allowing enterprises to handle increasing data volumes without proportional investment in hardware or bandwidth.

The credential also fosters a mindset of continuous monitoring and refinement. Professionals learn to establish performance baselines, track system metrics, and identify trends that may indicate emerging issues. This vigilance enables timely interventions and ensures that backup environments operate at peak efficiency. By embedding these practices into their workflow, EMCIE Avamar engineers reinforce the reliability and agility of organizational IT infrastructure.

Real-World Application and Innovation

Certified EMCIE Avamar professionals are distinguished by their ability to apply theoretical knowledge in practical, high-stakes environments. They contribute to complex projects such as large-scale data migrations, disaster recovery planning, and hybrid cloud integrations. Their expertise allows them to navigate challenges that might otherwise compromise data integrity or operational continuity, providing organizations with both tactical and strategic advantages.

Innovation is another hallmark of the credential. Professionals are encouraged to explore automation, scripting, and integration with emerging technologies to optimize backup workflows. By experimenting with innovative solutions and sharing best practices, EMCIE Avamar engineers drive continuous improvement, helping enterprises stay ahead in an era of rapid technological change. Their capacity to combine technical mastery with inventive thinking ensures that their work not only safeguards data but also advances organizational efficiency.

Expanding Professional Versatility

The knowledge and skills gained from EMCIE Avamar certification extend beyond traditional backup tasks, positioning professionals as versatile contributors within IT departments. Certified engineers often participate in cross-functional initiatives, advising on infrastructure optimization, system architecture, and risk mitigation. Their ability to translate technical insight into strategic recommendations enhances their professional value and broadens the scope of responsibilities they can assume.

Moreover, the credential provides a foundation for further specialization and professional growth. Engineers may pursue advanced certifications, explore cloud storage technologies, or delve into data analytics integration with backup systems. This versatility ensures long-term career development and sustains relevance in a rapidly evolving technological landscape, making EMCIE Avamar certification a pivotal investment in professional trajectory.

Elevating Professional Credibility

The EMC Implementation Engineer Avamar credential provides a definitive validation of technical expertise, positioning professionals as authoritative figures in enterprise data protection and storage management. Attaining this certification communicates to employers and peers that the individual possesses not only theoretical knowledge but also practical proficiency in deploying, configuring, and optimizing Avamar systems. This recognition elevates professional credibility, distinguishing certified engineers from their contemporaries in the field of IT infrastructure and backup solutions.

In an era where data proliferation is exponential and regulatory compliance demands meticulous attention, organizations increasingly seek individuals who can demonstrate specialized knowledge in safeguarding information. Professionals with EMCIE Avamar certification are regarded as dependable custodians of organizational data, capable of executing backup operations with precision and foresight. This professional stature fosters trust within teams and encourages greater responsibility in critical projects that directly influence business continuity.

Expanding Career Horizons

The credential opens a multitude of avenues for career advancement within the IT domain. Certified engineers often find opportunities in enterprise storage administration, backup and recovery management, disaster recovery planning, and systems engineering. The versatility conferred by EMCIE Avamar allows professionals to transition seamlessly between roles that require deep technical understanding and strategic oversight.

Organizations value the ability of certified engineers to assess infrastructure needs, design efficient backup architectures, and ensure rapid recovery from data disruptions. This capability frequently results in elevated roles such as senior storage engineer, backup solution architect, or infrastructure consultant. Professionals are also sought after for leadership positions overseeing teams responsible for critical data protection initiatives, providing a trajectory from operational execution to strategic guidance.

Enhancing Earning Potential

Market dynamics reflect a tangible financial incentive associated with EMCIE Avamar certification. The specialized knowledge and operational skill set of certified engineers often translate into higher remuneration compared to non-certified peers. Organizations are willing to invest in individuals who can reduce storage costs, optimize backup operations, and mitigate the risk of data loss, recognizing the long-term economic benefits of retaining such talent.

Furthermore, this credential frequently opens opportunities for consultancy roles, freelance engagements, and project-based assignments, which can provide supplemental income streams. Professionals with EMCIE Avamar certification may also benefit from relocation or global mobility options, as multinational organizations acknowledge the standardization and rigor of this credential across regions.

Industry Recognition and Demand

The demand for EMCIE Avamar certified professionals is robust across industries where data integrity is paramount. Sectors such as finance, healthcare, government, and technology consistently seek engineers capable of implementing resilient backup solutions and maintaining compliance with stringent regulatory frameworks. The credential not only validates technical proficiency but also signals an understanding of industry-specific data governance requirements, enhancing employability in high-stakes environments.

Recognition extends beyond organizational confines to professional networks and industry forums. Certified engineers are often invited to contribute insights, present at conferences, or participate in collaborative projects. This visibility reinforces professional reputation and positions individuals as thought leaders in the domain of enterprise backup and recovery solutions.

Access to Strategic Projects

Certification in EMCIE Avamar equips professionals to engage in projects of strategic importance, such as large-scale data migration, hybrid cloud integration, and disaster recovery implementation. Their expertise ensures that these initiatives are executed with minimal risk and optimal efficiency. By contributing to projects that directly impact organizational resilience and operational continuity, certified engineers reinforce their value and demonstrate an aptitude for both technical and managerial responsibilities.

Participation in such strategic projects often accelerates career progression. Certified professionals gain exposure to cross-functional teams, interact with senior leadership, and influence decision-making processes related to IT infrastructure and data protection strategy. This experience cultivates a blend of technical insight and business acumen, distinguishing EMCIE Avamar holders as versatile assets within any enterprise.

Networking and Professional Community

The EMCIE Avamar credential also facilitates immersion into a community of like-minded professionals who share expertise, best practices, and emerging trends in backup technology. Networking within this ecosystem provides avenues for mentorship, collaborative problem-solving, and exposure to innovative approaches to enterprise storage challenges. Interaction with a professional community enhances knowledge, broadens perspectives, and fosters continuous skill development, ensuring that certified engineers remain at the forefront of technological evolution.

Engagement in professional forums, workshops, and user groups enables certified engineers to exchange insights on practical deployment strategies, performance optimization techniques, and novel applications of Avamar technology. This collaborative exchange not only reinforces technical skills but also positions professionals to influence industry standards and contribute meaningfully to the advancement of enterprise backup solutions.

Global Recognition and Versatility

One of the most compelling benefits of EMCIE Avamar certification is its global recognition. Enterprises worldwide acknowledge the credibility of this credential, making certified professionals competitive candidates for roles in diverse geographic locations. The portability of expertise allows engineers to explore international opportunities, participate in multinational projects, and adapt to varied IT environments with confidence.

This global recognition underscores the versatility of EMCIE Avamar professionals. They are equipped to navigate heterogeneous infrastructures, integrate backup solutions across physical, virtual, and cloud environments, and contribute to both operational efficiency and strategic planning. The ability to function effectively in diverse contexts enhances professional mobility and fosters long-term career resilience.

Contribution to Organizational Resilience

Certified engineers play a pivotal role in ensuring the continuity and robustness of enterprise IT systems. Their expertise in backup configuration, performance monitoring, and disaster recovery planning directly impacts the reliability of organizational operations. By implementing efficient data protection strategies, EMCIE Avamar professionals reduce downtime, mitigate risk, and enable rapid recovery from incidents, safeguarding critical business processes.

The strategic value of these contributions often extends beyond technical execution. Certified professionals are instrumental in advising leadership on infrastructure investments, risk mitigation measures, and compliance adherence. Their insights inform decisions that shape organizational resilience, positioning them as integral stakeholders in both operational and strategic planning.

Recognition Beyond Technical Skills

While technical expertise is fundamental, EMCIE Avamar certification also validates soft skills such as analytical thinking, problem-solving, and strategic judgment. Certified engineers are frequently entrusted with high-stakes responsibilities that require meticulous planning, risk assessment, and coordination with multiple teams. The credential signals to employers that the professional can blend technical execution with organizational insight, providing a comprehensive value proposition.

This blend of skills enhances career prospects in roles that demand leadership in addition to operational competence. Professionals may oversee teams managing critical data environments, guide policy development for backup protocols, and mentor junior engineers. By combining technical mastery with strategic vision, EMCIE Avamar certified engineers contribute to both immediate operational success and long-term organizational stability.

Opportunities for Continued Growth

The attainment of EMCIE Avamar certification is often a gateway to further specialization and professional development. Engineers may pursue advanced certifications in related EMC technologies, cloud storage solutions, or broader IT infrastructure domains. This commitment to continuous learning ensures that certified professionals maintain relevance in a rapidly evolving technological landscape, positioning them for sustained career growth.

Additionally, the credential encourages a proactive approach to innovation. Certified engineers are often at the forefront of implementing automation, optimizing workflows, and exploring emerging backup methodologies. Their ability to integrate new technologies with established practices enhances organizational efficiency and reinforces their role as indispensable contributors to enterprise IT strategy.

Understanding the Certification Framework

Achieving EMC Implementation Engineer Avamar certification demands a meticulous approach to learning and preparation. The credential is designed to assess both theoretical understanding and practical proficiency, requiring candidates to demonstrate competence in deploying, configuring, and managing Avamar backup solutions within enterprise environments. Understanding the certification framework is the first step toward success. Professionals must become familiar with Avamar architecture, client-server interactions, backup policies, replication strategies, and performance optimization techniques.

The exam tests a combination of knowledge areas, including storage deduplication mechanisms, disaster recovery planning, troubleshooting methodologies, and compliance considerations. Candidates are expected to navigate scenarios that reflect real-world operational challenges, requiring analytical reasoning and applied skills. Preparing for the examination entails developing an integrated understanding of these components and recognizing how each influences backup reliability, data integrity, and organizational continuity.

Study Materials and Resources

Effective preparation begins with identifying appropriate study materials. EMC-provided documentation, training courses, and practice exercises form the backbone of the learning process. Candidates benefit from immersive training programs that simulate enterprise backup environments, allowing them to configure systems, monitor performance, and troubleshoot issues in a controlled setting. This hands-on exposure reinforces theoretical knowledge and cultivates confidence in applying concepts to practical situations.

Supplementary resources, such as technical whitepapers, community forums, and case studies, provide additional context and insight. Whitepapers elucidate architectural nuances, highlight best practices, and detail advanced features like client-side deduplication and incremental backup optimization. Community forums foster peer-to-peer interaction, enabling candidates to exchange problem-solving strategies, discuss complex scenarios, and clarify ambiguities. Case studies illustrate how certified engineers have implemented Avamar solutions across varied organizational contexts, providing practical examples that anchor theoretical concepts in tangible outcomes.

Strategic Study Techniques

Candidates who approach preparation strategically enhance their likelihood of success. Establishing a structured study schedule ensures consistent progress and reduces the risk of overlooking critical topics. Breaking down the curriculum into manageable segments, prioritizing areas of complexity, and revisiting challenging concepts reinforces retention and mastery. Active learning techniques, such as note-taking, summarizing concepts in personal language, and teaching principles to peers, deepen comprehension and facilitate long-term recall.

Simulation exercises play an integral role in reinforcing learning. By configuring virtual Avamar environments, candidates develop practical familiarity with backup policies, replication setups, and node management. This experiential approach cultivates problem-solving skills, as learners encounter and address issues that mirror real-world operational dilemmas. Engaging repeatedly with simulated scenarios fosters adaptability, allowing candidates to approach the examination with both confidence and competence.

Troubleshooting and Analytical Practice

A critical dimension of EMCIE Avamar exam preparation involves cultivating troubleshooting and analytical acumen. Candidates must be able to identify performance bottlenecks, diagnose system errors, and implement effective remedial measures. Developing these competencies requires systematic practice, including interpreting log files, analyzing backup alerts, and simulating system failures to devise recovery strategies.

Analytical exercises enable candidates to understand the root causes of issues rather than merely addressing superficial symptoms. This approach enhances operational efficiency and equips professionals to maintain seamless backup operations under diverse conditions. Repeated exposure to complex troubleshooting scenarios instills confidence, ensuring that candidates can respond effectively to the situational questions presented in the certification assessment.

Time Management and Exam Strategy

Mastery of the EMCIE Avamar exam extends beyond technical knowledge; effective time management during preparation and assessment is crucial. Allocating sufficient time to study each domain, while balancing practical exercises and theoretical review, prevents cognitive overload and ensures comprehensive coverage. During the examination, candidates benefit from reading questions carefully, analyzing scenario-based prompts, and allocating time according to complexity.

Developing an internal strategy for prioritizing questions reduces anxiety and enhances performance. Candidates may find it advantageous to first address familiar topics, consolidate earned points, and then focus on challenging scenarios requiring deeper analytical reasoning. Practicing under timed conditions reinforces this approach, enabling candidates to adapt to the pacing and cognitive demands of the actual exam environment.

Understanding Real-World Scenarios

The certification emphasizes practical application, so preparation should include exposure to real-world scenarios. Candidates benefit from studying examples of enterprise backup deployments, data recovery operations, and system optimizations executed by certified engineers. Analyzing these scenarios helps learners appreciate the interplay between policy design, system configuration, and operational efficiency.

For instance, observing how professionals optimize deduplication for large datasets or implement replication strategies across geographically distributed nodes highlights the nuanced considerations that influence system performance. Understanding these scenarios equips candidates to answer situational questions with clarity and insight, reflecting the practical acumen expected of EMCIE Avamar certified engineers.

Leveraging Peer Support and Mentorship

Networking with peers and seeking guidance from mentors enhances preparation effectiveness. Engaging in discussions with experienced professionals allows candidates to explore alternative approaches, clarify ambiguities, and gain insight into best practices. Mentors who have previously earned EMCIE Avamar certification can provide personalized guidance, highlight common pitfalls, and offer strategies for mastering complex topics.

Peer collaboration also enables group problem-solving exercises, where candidates collectively troubleshoot simulated failures, optimize backup operations, and analyze performance metrics. This collaborative environment fosters critical thinking, reinforces learning, and provides exposure to diverse perspectives, mirroring the collaborative nature of enterprise IT operations.

Emphasizing Compliance and Governance

An often overlooked aspect of exam preparation is understanding regulatory compliance and data governance implications. Candidates must be conversant with organizational retention policies, industry-specific mandates, and security protocols that influence backup configurations. Studying these elements ensures that candidates can demonstrate not only technical proficiency but also strategic awareness of the broader operational context in which Avamar systems function.

Knowledge of compliance considerations is particularly crucial in sectors with stringent data protection requirements. Candidates should examine case studies where backup policies were designed to meet regulatory obligations, exploring how certified engineers balance efficiency, reliability, and adherence to legal frameworks. This dual focus on technical skill and governance understanding is essential for comprehensive exam readiness.

Continuous Reinforcement and Revision

Sustained success in obtaining EMCIE Avamar certification relies on continuous reinforcement of knowledge and periodic revision. Candidates are encouraged to revisit challenging topics, refine practical skills through repeated simulation exercises, and periodically assess their understanding through practice assessments. This iterative approach strengthens memory retention, enhances problem-solving proficiency, and ensures that knowledge is readily deployable in both the exam context and professional practice.

Reinforcement also includes evaluating performance against self-established benchmarks. Candidates may identify areas of weakness, prioritize remedial study, and track progress over time. This disciplined methodology cultivates confidence and ensures that the candidate enters the examination with comprehensive readiness, equipped to tackle both theoretical questions and practical scenarios with ease.

Driving Operational Excellence

EMC Implementation Engineer Avamar certified professionals occupy a pivotal role in enterprise data management, providing expertise that translates directly into operational excellence. Their ability to design, deploy, and optimize backup solutions ensures that organizational data remains secure, accessible, and recoverable under a wide range of circumstances. In dynamic IT environments, where data volumes expand exponentially and infrastructure complexity increases, these professionals apply their advanced knowledge to enhance performance, reduce downtime, and maximize storage efficiency.

Certified engineers leverage sophisticated deduplication techniques, replication strategies, and incremental backup workflows to streamline processes while conserving resources. Their interventions reduce redundant storage consumption and optimize network bandwidth, creating backup systems that operate with agility and resilience. Organizations benefit not only from reduced operational costs but also from heightened reliability, as certified engineers anticipate potential issues and implement preemptive measures that mitigate risks.

Case Studies and Practical Applications

Examining real-world scenarios highlights the tangible impact of EMCIE Avamar expertise. In large-scale enterprise migrations, certified professionals orchestrate complex backup strategies to ensure seamless transfer of voluminous datasets across diverse infrastructures. By carefully configuring policies, monitoring system health, and verifying data integrity, they facilitate transitions with minimal disruption to business operations.

In multinational corporations, Avamar professionals often manage distributed backup environments spanning multiple geographies. Their skill in configuring replication between data centers, managing latency challenges, and synchronizing recovery objectives ensures consistent data availability. These practical applications underscore the importance of certification, as professionals translate theoretical knowledge into effective operational solutions that safeguard critical information assets.

Enhancing Disaster Recovery Capabilities

Disaster recovery planning is a core domain where EMCIE Avamar certified engineers make significant contributions. Organizations increasingly rely on these professionals to implement comprehensive strategies that enable rapid recovery from hardware failures, software malfunctions, or catastrophic events. Certified engineers meticulously design recovery point objectives and recovery time objectives, ensuring that data restoration meets organizational requirements without compromising system stability.

Through simulation exercises and contingency planning, professionals test backup systems under varied scenarios, identifying weaknesses and implementing corrective measures. Their proactive approach strengthens organizational resilience, reduces potential downtime, and instills confidence in stakeholders regarding the robustness of IT infrastructure. The practical experience gained in managing high-stakes recovery situations differentiates EMCIE Avamar professionals as indispensable contributors to business continuity.

Optimizing System Performance

Certified professionals continually monitor and optimize backup operations to maintain peak system performance. They analyze throughput metrics, detect anomalies, and fine-tune system parameters to enhance efficiency. By leveraging performance analytics, EMCIE Avamar engineers identify bottlenecks, reallocate resources, and implement optimization strategies that improve both speed and reliability of backup processes.

This ongoing refinement extends to storage utilization. Professionals assess deduplication effectiveness, evaluate retention policies, and implement incremental strategies that balance resource consumption with operational objectives. Their ability to combine analytical insight with practical implementation ensures that backup systems not only function effectively but also adapt to evolving organizational needs.

Contributing to Compliance and Governance

Data governance and regulatory compliance are increasingly critical considerations for organizations managing sensitive information. EMCIE Avamar professionals bring expertise that ensures backup systems adhere to organizational policies and legal mandates. They implement retention schedules, monitor access controls, and maintain audit trails that satisfy industry regulations and internal standards.

Their strategic understanding of compliance enables organizations to mitigate risk while optimizing operational efficiency. Professionals are adept at designing systems that balance regulatory adherence with performance, ensuring that data protection strategies support both legal obligations and business objectives. This dual focus enhances the strategic value of certified engineers, positioning them as both technical experts and custodians of organizational governance.

Facilitating Technological Innovation

The role of EMCIE Avamar certified engineers extends beyond maintenance and optimization. Their expertise fosters technological innovation within enterprises, as they explore new methodologies to enhance backup and recovery operations. Automation of repetitive tasks, integration with emerging storage solutions, and exploration of hybrid cloud deployments exemplify the innovative contributions these professionals make.

By experimenting with novel approaches and sharing insights with teams, certified engineers drive efficiency improvements and advance the overall sophistication of data management practices. Their ability to implement creative solutions ensures that backup infrastructure evolves in tandem with organizational growth and technological progress, solidifying the long-term relevance of their expertise.

Mentorship and Knowledge Transfer

Certified professionals frequently serve as mentors within IT departments, sharing expertise with junior engineers and guiding teams in implementing best practices. This knowledge transfer ensures continuity of skill within the organization and promotes a culture of excellence. Mentorship extends to advising on complex configurations, troubleshooting challenging scenarios, and reinforcing understanding of core principles, thereby amplifying the impact of certification across multiple levels of enterprise operations.

By cultivating skill development among colleagues, EMCIE Avamar professionals contribute to organizational capability, enabling teams to manage sophisticated backup environments efficiently and consistently. This mentorship function reinforces the strategic value of certified engineers, as they influence both current operations and the professional growth of their peers.

Strategic Decision Support

In addition to technical execution, EMCIE Avamar professionals provide insight that informs strategic decision-making. Their understanding of system capabilities, performance metrics, and risk factors positions them to advise leadership on infrastructure investments, policy design, and resource allocation. Organizations benefit from their ability to translate operational data into actionable recommendations, ensuring that decisions regarding storage expansion, disaster recovery planning, and data governance are grounded in technical feasibility and practical experience.

Their contribution to strategic planning enhances organizational resilience and operational agility. Certified engineers bridge the gap between technical intricacies and managerial priorities, enabling enterprises to make informed, forward-looking decisions that optimize both performance and reliability.

Impact on Organizational Efficiency

The presence of EMCIE Avamar certified professionals correlates strongly with enhanced organizational efficiency. By streamlining backup operations, optimizing resource allocation, and implementing reliable recovery strategies, they reduce downtime and ensure continuity of business processes. Their interventions enhance productivity, minimize operational disruption, and enable enterprises to handle increasing data demands without proportional expansion of infrastructure or staffing.

This efficiency extends beyond immediate technical outcomes. Certified engineers influence workflow optimization, procedural standardization, and performance monitoring practices, creating an ecosystem where data protection aligns seamlessly with organizational objectives. The cumulative effect of their expertise results in a resilient, responsive, and highly efficient IT environment.

Expanding Professional Influence

The expertise of EMCIE Avamar certified engineers extends into professional recognition beyond their immediate organization. They are often invited to participate in conferences, contribute to technical forums, and advise on industry standards for backup and recovery. This professional influence enhances the visibility of certified engineers and reinforces the broader significance of their skill set within the IT community.

Engagement in these arenas allows professionals to remain abreast of emerging trends, share innovative approaches, and contribute to the evolution of enterprise data management practices. Their influence not only shapes organizational outcomes but also advances industry-wide understanding of best practices in backup solutions and data protection strategies.

Adapting to Emerging Trends in Data Protection

The landscape of data backup and recovery is evolving at an unprecedented pace, driven by the exponential growth of data, the proliferation of cloud technologies, and the increasing complexity of enterprise IT environments. EMC Implementation Engineer Avamar certified professionals are uniquely positioned to navigate this transformation, applying their specialized knowledge to implement efficient, reliable, and scalable backup solutions. Their expertise in Avamar architecture, deduplication strategies, and replication mechanisms allows organizations to maintain data integrity while adapting to emerging technological paradigms.

As enterprises increasingly adopt hybrid cloud architectures, certified engineers facilitate seamless integration of on-premises and cloud-based storage solutions. Their understanding of replication policies, performance optimization, and disaster recovery planning ensures that data remains accessible and secure across distributed environments. Professionals anticipate potential bottlenecks and implement proactive measures, aligning backup strategies with evolving business needs and regulatory mandates.

Advancing Automation and Intelligent Backup

One of the most significant developments in modern data protection is the integration of automation and intelligent management tools. EMCIE Avamar professionals are at the forefront of implementing automated backup workflows, reducing manual intervention, and enhancing operational efficiency. Automation enables organizations to execute scheduled backups, monitor system health, and respond to anomalies with minimal human oversight, freeing IT teams to focus on strategic initiatives.

Beyond automation, intelligent backup solutions leverage predictive analytics and machine learning to optimize storage utilization, detect potential failures, and recommend corrective actions. Certified engineers apply their deep understanding of Avamar systems to configure these advanced tools, ensuring that data protection processes are both proactive and adaptive. This fusion of technical mastery and forward-thinking innovation positions EMCIE Avamar professionals as catalysts for enhanced resilience and operational agility.

Navigating Security and Compliance Challenges

As data becomes an increasingly valuable asset, the risks associated with unauthorized access, corruption, or loss have escalated. Certified professionals play a pivotal role in fortifying backup systems against these threats. Their expertise encompasses not only the technical aspects of data protection but also compliance with regulatory frameworks, industry standards, and organizational policies. By implementing robust access controls, encryption protocols, and retention policies, EMCIE Avamar engineers safeguard sensitive information while ensuring adherence to legal and regulatory requirements.

In sectors such as finance, healthcare, and government, compliance is not merely a procedural concern but a strategic imperative. Professionals with EMCIE Avamar certification help organizations navigate complex regulatory landscapes, integrating backup strategies that satisfy legal obligations without compromising efficiency or performance. This dual capability—technical excellence coupled with governance insight—amplifies their value in contemporary IT ecosystems.

Supporting Disaster Recovery and Business Continuity

The imperative for robust disaster recovery and business continuity strategies has never been more pronounced. Organizations face potential disruptions from hardware failures, cyberattacks, natural disasters, and human error. EMCIE Avamar certified engineers contribute significantly to mitigating these risks by designing comprehensive recovery plans, establishing recovery point and recovery time objectives, and conducting simulation exercises to validate system readiness.

Their role extends to continuous monitoring and optimization of disaster recovery protocols. By analyzing backup performance metrics, detecting inefficiencies, and refining policies, certified professionals ensure that recovery procedures remain effective under diverse circumstances. This proactive stewardship strengthens organizational resilience, minimizes downtime, and instills confidence among stakeholders that critical operations can withstand unforeseen disruptions.

Leveraging Emerging Technologies

The evolution of data storage technologies, including cloud computing, virtualization, and containerization, presents both challenges and opportunities. EMCIE Avamar professionals leverage their expertise to integrate these emerging technologies with established backup infrastructures, ensuring compatibility, efficiency, and scalability. Their knowledge of Avamar architecture and deployment nuances allows for seamless adaptation to hybrid or multi-cloud environments, enabling organizations to expand storage capabilities without sacrificing data protection standards.

Additionally, certified engineers explore innovative approaches such as incremental backups, deduplication optimization, and data tiering to maximize resource utilization. By aligning cutting-edge technologies with strategic backup objectives, they create resilient, high-performance systems that meet both current operational demands and future growth requirements.

Fostering a Culture of Continuous Improvement

The dynamic nature of IT infrastructure necessitates a culture of continuous improvement, and EMCIE Avamar professionals are instrumental in cultivating this mindset. They monitor emerging trends, evaluate new methodologies, and implement process enhancements that elevate organizational data protection capabilities. Their contributions often extend to mentoring colleagues, disseminating best practices, and leading initiatives that refine operational workflows.

This commitment to continuous learning ensures that backup strategies evolve alongside technological advancements, maintaining relevance and effectiveness. Certified professionals not only preserve organizational data but also drive innovation, efficiency, and strategic insight across the IT landscape.

Expanding Professional Influence and Strategic Leadership

EMCIE Avamar certification positions professionals to assume influential roles within organizations, contributing to both tactical operations and strategic planning. Their expertise informs decision-making related to infrastructure investment, risk mitigation, and technology adoption. By translating technical knowledge into actionable insights, certified engineers shape enterprise backup policies, guide cross-functional projects, and support leadership in aligning IT objectives with broader business goals.

Their professional influence extends beyond individual organizations. Certified engineers often participate in industry forums, collaborate on emerging standards, and share innovative practices, thereby contributing to the advancement of enterprise data protection on a global scale. This combination of operational expertise and strategic acumen underscores the enduring relevance of EMCIE Avamar certification in a rapidly evolving technological environment.

Driving Long-Term Career Growth

The acquisition of EMCIE Avamar certification is not only a milestone in technical proficiency but also a strategic investment in long-term career development. Certified professionals are well-positioned to explore leadership roles, advanced technical specializations, and consultancy opportunities. Their ability to navigate complex backup environments, optimize system performance, and align operations with organizational priorities ensures sustained professional relevance.

Continuous engagement with emerging technologies, participation in professional communities, and ongoing skill enhancement further reinforce their career trajectory. Certified engineers cultivate versatility, resilience, and credibility, enabling them to adapt to evolving industry demands and maintain a competitive edge throughout their professional journey.

Conclusion

The future of data backup and recovery is characterized by rapid technological evolution, expanding data volumes, and heightened security demands. EMCIE Avamar certified professionals occupy a critical role in this landscape, leveraging their technical expertise, strategic insight, and practical experience to implement resilient, efficient, and innovative backup solutions. Their mastery of Avamar systems, combined with an understanding of compliance, automation, and emerging technologies, ensures that organizations can safeguard information, optimize performance, and maintain operational continuity.

By fostering a culture of continuous improvement, driving innovation, and contributing to strategic decision-making, these professionals not only protect enterprise data but also shape the trajectory of IT infrastructure and data management practices. The credential represents more than technical accomplishment; it is a gateway to enduring professional influence, organizational resilience, and the capacity to navigate the complexities of an increasingly data-driven world.

Frequently Asked Questions

How can I get the products after purchase?

All products are available for download immediately from your Member's Area. Once you have made the payment, you will be transferred to Member's Area where you can login and download the products you have purchased to your computer.

How long can I use my product? Will it be valid forever?

Test-King products have a validity of 90 days from the date of purchase. This means that any updates to the products, including but not limited to new questions, or updates and changes by our editing team, will be automatically downloaded on to computer to make sure that you get latest exam prep materials during those 90 days.

Can I renew my product if when it's expired?

Yes, when the 90 days of your product validity are over, you have the option of renewing your expired products with a 30% discount. This can be done in your Member's Area.

Please note that you will not be able to use the product after it has expired if you don't renew it.

How often are the questions updated?

We always try to provide the latest pool of questions, Updates in the questions depend on the changes in actual pool of questions by different vendors. As soon as we know about the change in the exam question pool we try our best to update the products as fast as possible.

How many computers I can download Test-King software on?

You can download the Test-King products on the maximum number of 2 (two) computers or devices. If you need to use the software on more than two machines, you can purchase this option separately. Please email support@test-king.com if you need to use more than 5 (five) computers.

What is a PDF Version?

PDF Version is a pdf document of Questions & Answers product. The document file has standart .pdf format, which can be easily read by any pdf reader application like Adobe Acrobat Reader, Foxit Reader, OpenOffice, Google Docs and many others.

Can I purchase PDF Version without the Testing Engine?

PDF Version cannot be purchased separately. It is only available as an add-on to main Question & Answer Testing Engine product.

What operating systems are supported by your Testing Engine software?

Our testing engine is supported by Windows. Andriod and IOS software is currently under development.

EMC Certifications

- DECA-CIS - Dell EMC Certified Associate - Cloud Infrastructure and Services

- DECE-Isilon solutions - Dell EMC Certified Expert - Isilon Solutions

- DECS-CA - Dell EMC Certified Specialist - Cloud Architect

- EMCDS - EMC Data Science Specialist

- EMCIE Avamar - EMC Implementation Engineer Avamar

- EMCIE RecoverPoint - EMC Implementation Engineer RecoverPoint

- EMCIE Unity Solutions - EMC Implementation Engineer Unity Solutions

- EMCIE VPLEX - EMC Implementation Engineer VPLEX

- EMCIE XtremIO Solutions - EMC Implementation Engineer XtremIO Solutions

- EMCSA Avamar - EMC Storage Administrator Avamar

- EMCSA RecoverPoint - EMC Storage Administrator RecoverPoint

- EMCSA VPLEX - EMC Storage Administrator VPLEX

- EMCTA Isilon Solutions - EMC Technology Architect Isilon Solutions

- EMCTA XtremIO Solutions - EMC Technology Architect XtremIO Solutions