Certification: PowerCenter Data Integration 9.x: Developer Specialist

Certification Full Name: PowerCenter Data Integration 9.x: Developer Specialist

Certification Provider: Informatica

Exam Code: PR000041

Exam Name: PowerCenter Data Integration 9.x:Developer Specialist

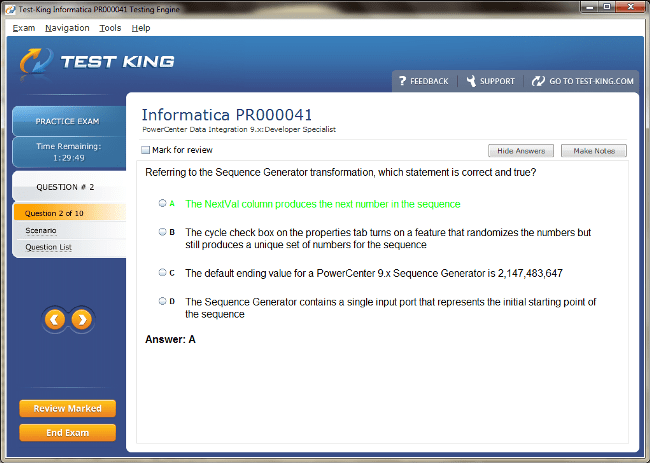

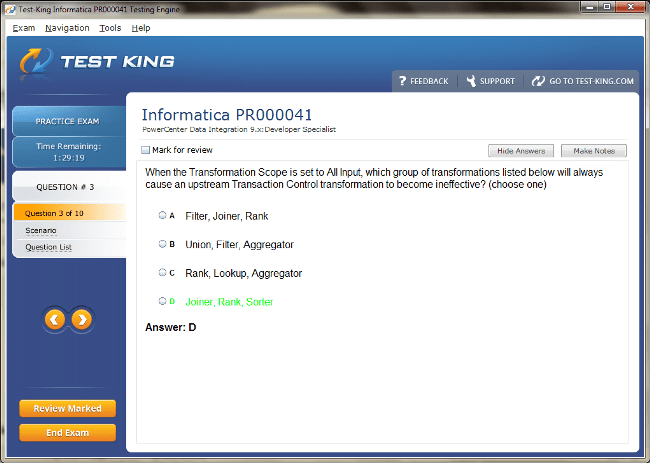

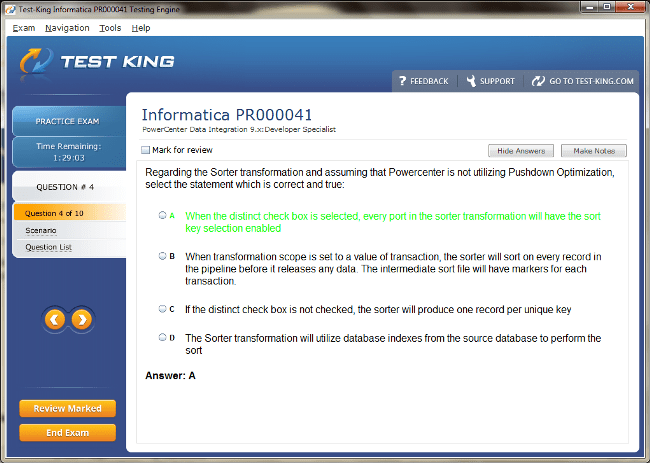

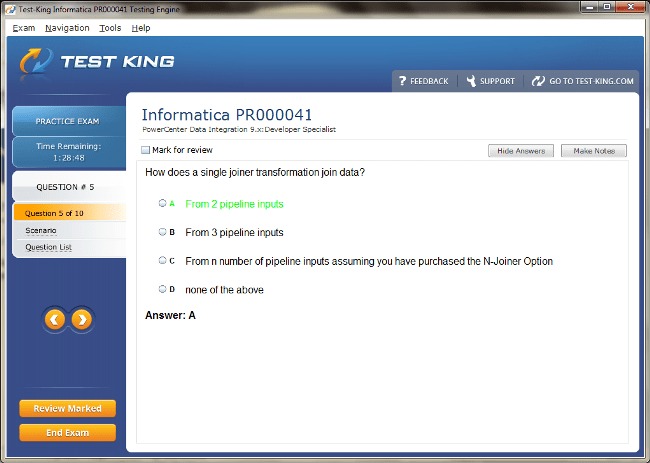

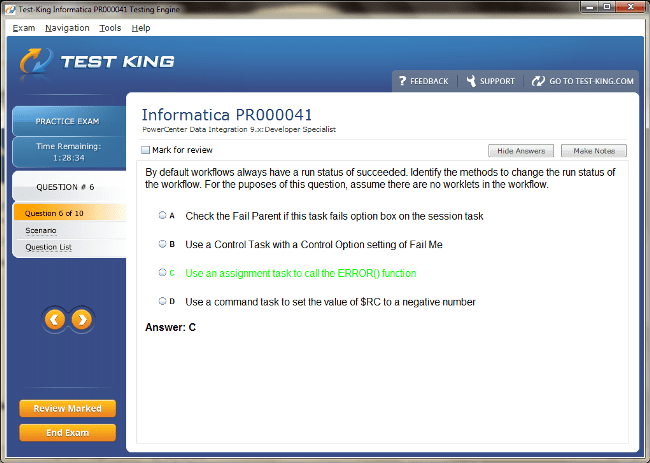

PR000041 Exam Product Screenshots

Understanding the Informatica Certified PowerCenter Data Integration 9.x: Developer Specialist Certification

The Informatica Certified Specialist - Data Integration 10.x credential stands as a distinguished benchmark for professionals navigating the intricate landscape of data architecture and integration. This certification is not merely an academic accolade but a practical endorsement of a professional’s capacity to design, develop, and implement comprehensive data integration solutions using Informatica’s suite of tools. Individuals who pursue this certification are often tasked with orchestrating the flow of data across multifarious systems, ensuring that information is accessible, reliable, and accurately transformed to meet business requirements. The breadth of knowledge tested by this certification spans several critical domains, including foundational concepts of data integration, understanding the architecture of enterprise data systems, and applying the principles of both batch and real-time data processing.

Exploring the Significance and Scope of the Certification

The certification emphasizes proficiency in Informatica Data Integration 10.x, with a focus on three primary products: PowerCenter, Data Engineering Integration, and Data Engineering Streaming. PowerCenter serves as the backbone for traditional ETL processes, providing a robust framework for extracting, transforming, and loading data across diverse sources. It equips professionals with the ability to build scalable workflows and reusable mappings that can handle high volumes of data efficiently. Data Engineering Integration extends these capabilities by addressing the growing need for complex transformations, orchestration of multiple workflows, and integration of large-scale data pipelines. Data Engineering Streaming introduces the paradigms necessary for real-time data processing, enabling organizations to act on incoming data immediately, a critical requirement in modern analytics and operational decision-making environments.

Preparing for this certification demands a combination of theoretical knowledge, hands-on practice, and strategic learning. The exam itself is a ninety-minute online proctored test comprising seventy multiple-choice questions, requiring a minimum score of seventy percent to pass. Each domain within the exam carries different weightings, reflecting the emphasis placed on practical applicability and conceptual understanding. For example, understanding the nuances of PowerCenter mappings and workflow execution often commands greater attention, while the principles of architecture and data streaming are evaluated for depth of comprehension and strategic insight. The exam’s structure is meticulously designed to ensure that candidates can demonstrate both their analytical abilities and their technical acumen in real-world scenarios.

Candidates often begin their preparation by enrolling in formal training programs provided by Informatica. Courses such as PowerCenter Developer Levels 1 and 2, Data Engineering Integration Developer, and Data Engineering Streaming Developer provide a structured pathway to mastery. These programs blend lectures, practical exercises, and scenario-based learning to cultivate a robust understanding of the tools and their application. The diversity of learning formats, including instructor-led sessions, virtual classrooms, and on-demand modules, allows professionals to tailor their preparation to suit their schedules and preferred learning styles. Beyond formal instruction, extensive review of official documentation is indispensable. Guides detailing the installation, configuration, and usage of PowerCenter, Data Engineering Integration, and Data Engineering Streaming offer insights into both standard practices and advanced features. These resources often contain illustrative examples that bridge the gap between theory and practice, equipping candidates with the ability to implement solutions that adhere to best practices and performance standards.

Hands-on practice represents an equally vital component of preparation. Professionals benefit from interacting with Informatica tools in real or simulated environments, gaining practical experience with PowerCenter Designer, Workflow Manager, Workflow Monitor, Developer Tool, and EDC. Creating workflows, executing mappings, monitoring data pipelines, and troubleshooting errors allows candidates to internalize the functionality of these tools and develop an intuitive understanding of data flow management. Free trial versions of the software facilitate experimentation without the constraints of production environments, encouraging candidates to engage with complex data integration scenarios and explore advanced configurations. Such experiential learning not only reinforces theoretical concepts but also cultivates problem-solving skills essential for real-world application.

Another critical aspect of preparation involves evaluating knowledge through practice exams and quizzes. These assessments provide immediate feedback, highlighting areas of strength and identifying domains that require further attention. Engaging in collaborative learning environments, such as study groups and professional forums, offers additional advantages. These communities allow candidates to share insights, discuss challenges, and exchange practical tips gleaned from prior experience, fostering an atmosphere of collective knowledge enhancement. The combination of structured training, comprehensive documentation review, practical experimentation, and continuous self-assessment creates a holistic preparation approach, enabling candidates to approach the examination with confidence and competence.

The professional benefits of the certification extend well beyond the confines of the examination itself. Achieving recognition as a certified specialist conveys credibility and expertise to employers, colleagues, and industry peers. Professionals who hold this credential are often regarded as authorities in data integration, capable of managing complex workflows, optimizing processes, and ensuring the integrity and accessibility of enterprise data. This recognition can lead to career advancement opportunities, including promotions, higher remuneration, and access to challenging projects that leverage certified expertise. The certification also facilitates networking and knowledge-sharing opportunities within the global Informatica community, allowing professionals to engage with like-minded peers, attend exclusive events, and access resources that support continued professional growth.

The scope of practical application for certified specialists is extensive. Data integration professionals are increasingly required to navigate heterogeneous data environments, reconcile disparate sources, and ensure that data is transformed, validated, and delivered in a format suitable for analytics, reporting, and operational decision-making. Mastery of PowerCenter equips professionals to handle batch-oriented workflows, while expertise in Data Engineering Integration and Streaming provides the skills necessary for real-time data manipulation and large-scale pipeline orchestration. In practice, this may involve designing robust ETL processes for financial data consolidation, integrating customer information across cloud and on-premises systems, or implementing streaming data solutions that inform instantaneous business decisions. The ability to seamlessly combine these capabilities positions certified specialists as indispensable contributors to data-driven enterprises.

In addition to technical expertise, the certification encourages the development of strategic thinking and problem-solving skills. Candidates learn to evaluate the optimal approach for data movement and transformation based on business requirements, system constraints, and performance considerations. They are trained to identify potential bottlenecks, implement error handling mechanisms, and optimize workflow execution for both efficiency and reliability. These competencies are critical in enterprise environments where data accuracy, timeliness, and availability directly impact business outcomes. The integration of technical mastery with analytical foresight ensures that certified professionals can not only execute data integration tasks but also contribute to strategic decision-making processes within their organizations.

The certification also emphasizes adaptability and continuous learning. Informatica’s ecosystem is dynamic, with frequent updates and enhancements to its suite of tools. Certified specialists are expected to maintain proficiency with new functionalities, architectural paradigms, and integration methodologies. This requirement fosters a mindset of lifelong learning, compelling professionals to remain current with industry trends, emerging technologies, and best practices in data integration. Continuous engagement with educational resources, professional forums, and networking communities supports the ongoing development of skills and ensures that certified specialists remain competitive in an evolving landscape of data management.

Moreover, the journey toward certification cultivates professional resilience and discipline. Preparing for a rigorous exam that combines conceptual, practical, and analytical challenges demands structured study plans, persistent practice, and the ability to self-assess objectively. Professionals develop time management skills, critical thinking abilities, and perseverance—qualities that are transferable to everyday professional responsibilities. These attributes contribute to a holistic development that extends beyond technical competence, reinforcing the overall effectiveness and maturity of certified individuals in their professional roles.

The impact of this certification also resonates within organizational contexts. Data integration projects often involve collaboration across multiple teams, including business analysts, system architects, developers, and operational managers. Certified specialists serve as bridges between technical implementation and business strategy, ensuring that integration solutions align with organizational objectives. Their expertise supports the creation of reliable, scalable, and auditable data pipelines, which in turn enhances data governance, quality assurance, and regulatory compliance. In environments where data is a strategic asset, the presence of certified professionals enhances organizational capability, mitigates risks associated with data mismanagement, and drives informed decision-making.

From a professional development perspective, attaining the Informatica Certified Specialist - Data Integration 10.x certification can be transformative. It provides a structured framework for skill acquisition, validates proficiency in a competitive marketplace, and opens pathways to advanced roles in data architecture, data engineering, and integration consulting. Professionals who embrace this certification often find themselves better equipped to navigate complex technological landscapes, manage enterprise-level projects, and contribute meaningfully to the strategic use of data within their organizations. The combination of technical mastery, strategic insight, and professional credibility forms the foundation upon which successful data integration careers are built.

The emphasis on real-world applicability throughout the certification ensures that professionals are not only prepared to pass an exam but also ready to address the multifaceted challenges encountered in contemporary data environments. Certified specialists are adept at leveraging Informatica tools to implement solutions that are efficient, scalable, and maintainable, enabling organizations to harness the full potential of their data assets. This practical orientation, coupled with rigorous theoretical grounding, distinguishes the Informatica Certified Specialist - Data Integration 10.x certification as a credential that carries both immediate professional benefits and long-term career value.

Ultimately, the certification represents a convergence of knowledge, skill, and professional recognition. It signals to the marketplace that an individual possesses not only the technical capability to design, develop, and deploy data integration solutions but also the analytical acumen, strategic foresight, and adaptability required to excel in complex data environments. By achieving this credential, professionals affirm their commitment to excellence, continuous learning, and leadership in the evolving field of data integration and enterprise data architecture.

Understanding the Examination Format and Knowledge Requirements

The Informatica Certified Specialist - Data Integration 10.x credential demands an intricate comprehension of data integration concepts, architecture, and practical execution using the suite of Informatica tools. The examination evaluates a candidate's ability to integrate, manipulate, and orchestrate data efficiently across diverse environments while ensuring accuracy, performance, and scalability. It is an online proctored test that lasts ninety minutes and comprises seventy multiple-choice questions, requiring a minimum score of seventy percent to achieve certification. Each question is designed to assess both theoretical understanding and practical aptitude, reflecting the complexity of real-world scenarios in enterprise data environments.

Candidates preparing for the examination must develop an understanding of six interrelated domains that collectively define proficiency in data integration. The first domain encompasses Data Integration Concepts, which delves into the foundational principles of data extraction, transformation, and loading. This includes understanding different data types, transformation rules, error handling mechanisms, and data validation techniques. It requires candidates to grasp how raw data from heterogeneous sources can be standardized, cleansed, and structured for downstream analytics or operational processing. Knowledge of metadata management is also crucial within this domain, as it allows professionals to track data lineage, maintain audit trails, and ensure the reliability of integrated data.

The Architecture domain evaluates a candidate's insight into the design and deployment of enterprise data integration frameworks. Candidates are expected to understand how various components of Informatica interact, how data flows across systems, and how integration solutions are structured for reliability and scalability. This includes recognizing the interdependencies among PowerCenter, Data Engineering Integration, and Data Engineering Streaming, and determining how these tools can be orchestrated to meet complex business requirements. Architecture knowledge also involves assessing system constraints, identifying potential bottlenecks, and implementing optimization strategies to ensure efficient and uninterrupted data processing.

Proficiency in PowerCenter forms a substantial component of the examination, emphasizing the practical aspects of creating and executing mappings, workflows, and transformations. Candidates must understand how to design reusable mappings that optimize performance, configure workflows for automation and error handling, and monitor execution for efficiency. This knowledge is not limited to simple data movement but extends to designing sophisticated ETL pipelines that integrate multiple sources, apply complex transformations, and deliver consistent results. Understanding the nuances of session configuration, partitioning strategies, and workflow scheduling is essential for professionals aiming to achieve mastery in PowerCenter.

Data Engineering Integration is another critical domain, requiring candidates to manage complex, large-scale integration pipelines. This includes understanding advanced transformation techniques, performance optimization, and workflow orchestration across multiple datasets and systems. Candidates must be adept at handling high-volume data processing, ensuring data integrity, and troubleshooting potential issues. This domain tests the ability to translate business requirements into efficient, maintainable integration solutions that align with organizational objectives while addressing operational constraints and performance considerations.

The Data Engineering Streaming domain focuses on real-time data processing and immediate availability of information for analytical and operational purposes. Candidates are expected to understand the principles of streaming architecture, including the ingestion, transformation, and distribution of data in near real-time. Practical knowledge of stream configuration, monitoring, and error recovery mechanisms is essential. This domain evaluates the ability to design streaming solutions that maintain low latency, high reliability, and data accuracy, enabling organizations to act swiftly on incoming data. The examination in this area ensures that certified specialists can bridge traditional batch processing techniques with real-time analytics demands, providing comprehensive solutions that cater to modern business requirements.

Operational best practices constitute the final knowledge domain, integrating aspects of data governance, monitoring, and performance tuning. Candidates are required to demonstrate awareness of best practices in workflow monitoring, resource management, and error handling. This includes implementing strategies to ensure data quality, maintain auditability, and adhere to compliance requirements. Understanding operational workflows and troubleshooting methodologies ensures that professionals can sustain enterprise-level integration environments while minimizing downtime and optimizing system efficiency.

Effective preparation for the examination involves a strategic blend of structured training, extensive study of official documentation, and immersive practical experience. Formal courses offered by Informatica, such as PowerCenter Developer Levels 1 and 2, Data Engineering Integration Developer, and Data Engineering Streaming Developer, provide a comprehensive foundation for each domain. These programs combine theoretical instruction with hands-on exercises, scenario-based problem solving, and interactive learning, ensuring that candidates develop both conceptual knowledge and practical skills. The diversity of delivery formats, including instructor-led, virtual, and on-demand courses, allows flexibility in learning while maintaining the depth of study required for mastery.

Official guides and documentation serve as invaluable resources for understanding the intricacies of the tools and their application. PowerCenter Installation and Configuration guides, Data Engineering Integration User manuals, and Streaming Developer references provide step-by-step instructions, detailed examples, and insights into advanced functionalities. They guide candidates through installation, configuration, and execution processes, illustrating real-world application scenarios that help bridge theory and practice. The combination of documentation review and practical experimentation ensures that candidates can confidently implement, troubleshoot, and optimize integration solutions.

Hands-on practice is paramount to mastering the domains assessed in the examination. Professionals benefit from creating mappings, orchestrating workflows, and executing transformations in trial environments or sandbox systems. Using tools such as PowerCenter Designer, Workflow Manager, Workflow Monitor, Developer Tool, and EDC enables candidates to gain experiential knowledge that reinforces theoretical concepts. Practice projects allow individuals to explore complex scenarios, experiment with partitioning strategies, configure error handling, and monitor execution for performance insights. This experiential learning is crucial for developing a nuanced understanding of how tools operate in real-world enterprise environments and preparing candidates to handle operational challenges efficiently.

Regular assessment through practice exams and quizzes supports continuous improvement and self-evaluation. By identifying areas of strength and weakness, candidates can allocate study time more effectively and focus on domains that require deeper understanding. Collaborative learning environments, such as professional forums and study groups, further enhance preparation by facilitating the exchange of knowledge, sharing of best practices, and discussion of practical problem-solving approaches. This communal learning approach allows candidates to benefit from the collective experience of peers and experts, enriching their comprehension and readiness for the examination.

In addition to technical and operational expertise, the examination implicitly evaluates analytical thinking, problem-solving skills, and decision-making capabilities. Candidates must demonstrate the ability to assess the requirements of complex integration tasks, determine the most efficient approach, and apply tools and techniques effectively. This involves weighing trade-offs between performance and complexity, selecting optimal workflows, and implementing error recovery strategies that minimize disruption. Mastery of these skills ensures that certified professionals are not only capable of executing integrations but can also optimize processes, enhance reliability, and support strategic organizational objectives.

The professional implications of successfully navigating the examination extend into career development and organizational impact. Certification validates an individual’s ability to implement scalable, maintainable, and efficient data integration solutions, signaling competence and credibility to employers, colleagues, and industry peers. It enhances career prospects, facilitating promotions, new job opportunities, and roles of increasing responsibility. Certified professionals are often sought after for projects requiring deep expertise in data orchestration, transformation, and real-time analytics, positioning them as essential contributors to organizational success.

Mastery of the knowledge domains also equips professionals to participate meaningfully in enterprise-level planning and architecture discussions. Certified specialists are capable of advising on system design, workflow optimization, and integration strategies that align with business goals. Their insights contribute to improved governance, regulatory compliance, and operational efficiency, demonstrating the value of certification beyond individual skill assessment. By integrating theoretical knowledge with hands-on expertise, professionals develop a holistic understanding of data integration processes, enabling them to anticipate challenges, mitigate risks, and deliver reliable, high-quality solutions.

The emphasis on real-world applicability throughout the examination ensures that certification is not merely a theoretical exercise but a reflection of practical capability. Candidates gain experience with scenarios that mimic the complexities of enterprise data ecosystems, including heterogeneous data sources, high-volume pipelines, and real-time processing demands. This prepares professionals to manage diverse projects, implement solutions that adhere to best practices, and maintain operational resilience. It also fosters adaptability, as candidates must understand and respond to evolving requirements, system constraints, and technological innovations in data integration.

Continuous learning and professional growth are integral components of the certification journey. Informatica’s tools and methodologies evolve regularly, introducing new features, architectural enhancements, and optimization techniques. Certified specialists are encouraged to maintain proficiency with the latest versions, explore novel integration strategies, and stay informed about emerging trends in data engineering and real-time analytics. Engagement with the global community of certified professionals, participation in workshops, and exploration of knowledge resources contribute to ongoing development, ensuring sustained relevance and professional excellence.

Achieving mastery across the examination’s domains requires a disciplined approach, combining structured study plans, hands-on experimentation, and reflective practice. Candidates must allocate time to comprehend complex concepts, practice workflows, troubleshoot scenarios, and evaluate performance outcomes. This disciplined preparation builds analytical rigor, problem-solving aptitude, and operational acumen, which are directly transferable to professional responsibilities and complex enterprise projects. The interplay of theoretical knowledge and practical skill development cultivates confidence and competence, enabling professionals to perform effectively in high-stakes data integration environments.

The examination also underscores the importance of integrating strategic thinking with technical execution. Professionals must not only understand how to implement integrations but also why specific approaches are optimal in a given context. They learn to evaluate workflow design choices, assess the implications of data transformations, and optimize resource allocation for efficiency and reliability. This blend of technical proficiency and strategic insight differentiates certified specialists as thought leaders capable of guiding complex projects and influencing organizational data strategies.

The combination of comprehensive domain knowledge, practical experience, and analytical competence ensures that certified professionals are well-equipped to address challenges in diverse operational settings. They can manage complex ETL processes, orchestrate large-scale pipelines, monitor performance, troubleshoot errors, and optimize execution to meet evolving business needs. This expertise extends beyond day-to-day operations, empowering professionals to contribute to strategic initiatives, advise on architecture design, and mentor peers, thereby amplifying their impact within their organizations.

The knowledge acquired through preparation and examination for the Informatica Certified Specialist - Data Integration 10.x credential fosters a mindset of continuous improvement and intellectual curiosity. Professionals develop the capacity to explore innovative approaches to integration, experiment with new methodologies, and refine processes based on experiential learning. This proactive engagement with evolving practices enhances adaptability, ensures long-term competence, and positions individuals as indispensable contributors to their organizations’ data-driven success.

The examination, therefore, serves as a comprehensive measure of proficiency, encompassing not only technical capabilities but also operational acumen, strategic insight, and adaptive thinking. It evaluates candidates on their ability to implement, optimize, and manage data integration processes while anticipating challenges, mitigating risks, and ensuring quality outcomes. The resulting certification reflects a synthesis of knowledge, skill, and practical wisdom, validating a professional’s readiness to navigate the complexities of enterprise data environments.

Building a Comprehensive Approach to Mastery

Preparation for the Informatica Certified Specialist - Data Integration 10.x certification requires a methodical blend of structured study, hands-on practice, and strategic knowledge reinforcement. Success in this rigorous examination is contingent upon cultivating both conceptual understanding and practical skills in the usage of Informatica tools, including PowerCenter, Data Engineering Integration, and Data Engineering Streaming. Candidates are expected to navigate complex integration scenarios, design scalable workflows, and implement data transformations that uphold integrity, performance, and operational efficiency.

The initial phase of preparation typically involves enrolling in formal training programs offered by Informatica. These courses provide a structured framework for developing a profound understanding of the tools and their functionalities. PowerCenter Developer Levels 1 and 2 courses guide candidates through the creation of mappings, configuration of workflows, and execution of transformations. Participants learn to optimize session performance, manage error handling, and create reusable components that enhance scalability and maintainability. Data Engineering Integration Developer courses expand on these concepts by emphasizing orchestration of large-scale workflows, advanced transformation techniques, and performance tuning for high-volume pipelines. Data Engineering Streaming Developer programs introduce real-time data ingestion, transformation, and distribution strategies, preparing candidates to meet the demands of immediate data availability and low-latency processing. These formal courses integrate theoretical instruction with practical exercises, enabling candidates to apply learned concepts in simulated or live environments.

Beyond structured courses, comprehensive study of official documentation is critical to mastering the nuances of each tool. The PowerCenter Installation and Configuration Guide provides step-by-step instructions for setup, configuration, and operational management, while the Data Engineering Integration User Guide and Data Engineering Streaming User Guide delve into advanced functionalities, configuration settings, and best practices for complex workflows. These resources also include illustrative examples and practical scenarios, offering insight into real-world applications. By meticulously reviewing these guides, candidates gain a deeper appreciation of system intricacies, workflow dependencies, and operational strategies, which are essential for successfully executing integration tasks during the examination.

Hands-on practice constitutes a pivotal aspect of preparation. Utilizing Informatica tools in a controlled environment allows candidates to internalize theoretical concepts and develop intuitive operational skills. PowerCenter Designer, Workflow Manager, Workflow Monitor, Developer Tool, and EDC provide a platform for experimenting with mappings, orchestrating workflows, and executing transformations. Creating complex pipelines, configuring session properties, and monitoring workflow execution cultivates a practical understanding of performance optimization and error resolution. These exercises reinforce learning, build confidence, and prepare candidates to handle the dynamic challenges of enterprise-level data integration projects. Trial versions of the software facilitate experimentation without the risk of affecting production systems, encouraging candidates to explore advanced features, test edge cases, and implement real-world scenarios.

The integration of practice with theory is further enhanced by the use of practice exams and quizzes. Simulated assessments allow candidates to evaluate their knowledge, identify strengths and weaknesses, and refine their approach to problem-solving. Repeated exposure to exam-style questions develops familiarity with the format, improves time management, and strengthens analytical skills. The process of reviewing incorrect responses and understanding the underlying concepts fosters deeper comprehension and enhances readiness for the actual examination.

Collaborative learning plays a significant role in preparation. Engaging with study groups, online communities, and professional forums allows candidates to share insights, discuss challenges, and learn from the experiences of peers. This collective approach enriches understanding by exposing candidates to diverse perspectives and practical strategies for handling complex integration scenarios. It also provides opportunities for mentorship and guidance from individuals who have successfully navigated the examination, offering valuable tips and approaches that may not be immediately apparent through self-study alone.

Developing a disciplined study plan is essential for effective preparation. Allocating dedicated time for structured learning, documentation review, hands-on practice, and self-assessment ensures comprehensive coverage of all domains. Prioritizing areas that require additional focus, such as advanced transformations, real-time streaming configurations, or performance optimization, allows candidates to build confidence and competency across each aspect of data integration. Regularly revisiting previously studied material reinforces retention and facilitates a deeper understanding of interrelated concepts.

Practical exercises should mirror real-world scenarios as closely as possible. Candidates benefit from designing and executing workflows that integrate multiple data sources, apply complex transformations, and manage both batch and streaming data. Experimenting with workflow scheduling, error recovery mechanisms, and resource allocation strategies enhances operational proficiency. Understanding the interactions between different components, such as mapping variables, session parameters, and workflow dependencies, cultivates a holistic perspective that is critical for effective data integration management.

Preparation also involves cultivating analytical and problem-solving skills. Candidates must learn to evaluate integration challenges, determine optimal approaches, and implement solutions that balance performance, maintainability, and reliability. This requires the ability to diagnose issues, optimize workflows, and anticipate potential bottlenecks. Developing these skills ensures that candidates are equipped to manage the complexities of enterprise data environments and to apply their knowledge effectively in dynamic scenarios.

Time management is another crucial aspect of preparation. Allocating sufficient periods for conceptual learning, hands-on practice, and self-assessment ensures balanced development across all required competencies. Practicing under timed conditions simulates the examination environment, helping candidates improve their efficiency and accuracy when navigating multiple-choice questions. Structured study schedules also reduce cognitive overload, allowing for focused learning and gradual mastery of intricate concepts.

Maintaining a focus on current industry trends enhances the effectiveness of preparation. Informatica tools evolve continually, incorporating new features, architectural enhancements, and optimized functionalities. Staying informed about these changes ensures that candidates are conversant with the latest best practices, integration techniques, and real-time data processing methodologies. Engaging with webinars, workshops, professional blogs, and community discussions provides exposure to emerging approaches, enriching practical knowledge and fostering adaptability.

Effective preparation encompasses strategic goal setting. Establishing clear objectives, such as mastering specific tools, achieving competency in advanced transformations, or executing efficient streaming workflows, provides direction and motivation. Setting milestones enables candidates to measure progress, maintain momentum, and adjust study strategies as needed. Goal-oriented preparation reinforces accountability and enhances the likelihood of success.

The combination of structured learning, documentation review, hands-on practice, self-assessment, and collaborative engagement creates a comprehensive preparation strategy. Each component reinforces the others, cultivating a balance of theoretical knowledge, operational proficiency, and analytical thinking. Candidates develop the ability to implement integrations efficiently, optimize workflows, troubleshoot errors, and apply advanced techniques in real-world scenarios. This multidimensional preparation approach ensures not only examination success but also long-term competence in professional practice.

Immersive exercises and project-based practice are particularly effective in consolidating learning. By simulating enterprise-scale integration scenarios, candidates can explore complex workflows, integrate disparate data sources, and manage transformation processes. These exercises encourage critical thinking, problem-solving, and resource optimization, cultivating the ability to respond effectively to operational challenges. Experimenting with real-time data streams, high-volume processing, and multi-system orchestration builds confidence and familiarity with scenarios that are likely to appear in professional practice and examination questions.

Continuous reflection on learning outcomes enhances preparation quality. Candidates should assess their performance in practice exercises, identify gaps in understanding, and refine their approach accordingly. Reflective practice promotes deep learning, strengthens retention, and fosters a proactive mindset. By analyzing errors, revisiting difficult concepts, and experimenting with alternative strategies, candidates develop resilience, adaptability, and a nuanced comprehension of data integration principles.

Engaging with mentors and experienced professionals further enriches preparation. Guidance from individuals who have successfully completed the certification process provides insight into effective study techniques, common pitfalls, and practical applications of knowledge. Mentorship supports conceptual clarity, reinforces best practices, and enhances confidence. Interaction with seasoned professionals also offers exposure to nuanced operational strategies, industry-specific challenges, and advanced problem-solving approaches that may not be covered in formal training or documentation.

Integrating theoretical knowledge with hands-on practice ensures a seamless transition from preparation to application. Candidates learn to design, implement, and monitor workflows, configure real-time streaming solutions, and execute complex transformations with precision and efficiency. Familiarity with operational monitoring, error recovery, and performance optimization enables professionals to manage enterprise-level environments with competence and confidence. By combining conceptual understanding, practical experience, and analytical thinking, candidates develop the versatility required to excel in the dynamic field of data integration.

The preparation process fosters critical cognitive skills, including analytical reasoning, strategic planning, and decision-making under constraints. Candidates are trained to evaluate integration challenges, prioritize tasks, and implement solutions that maximize efficiency and maintain data integrity. These skills are transferable to professional responsibilities, enabling certified specialists to contribute meaningfully to enterprise data strategies, optimize operational processes, and support informed decision-making.

Maintaining consistency and discipline throughout the preparation journey is essential. Regular engagement with study material, dedicated hands-on practice, and continuous assessment promote incremental mastery of complex concepts. Developing a routine that balances learning, practice, and reflection reinforces retention, strengthens skill acquisition, and prepares candidates to navigate the examination confidently.

Professional growth and certification readiness are enhanced by exposure to diverse scenarios and environments. Candidates benefit from exploring various data integration challenges, experimenting with both batch and streaming workflows, and managing multiple interconnected processes. This exposure cultivates adaptability, reinforces problem-solving capabilities, and deepens understanding of the practical implications of data integration principles.

In addition to technical preparation, candidates must cultivate a mindset conducive to success. Patience, persistence, and intellectual curiosity are essential qualities for mastering the complexities of data integration. Embracing challenges, reflecting on experiences, and seeking continuous improvement ensures sustained engagement and long-term competence.

Integrating all aspects of preparation—structured learning, documentation review, hands-on experimentation, collaborative engagement, self-assessment, and reflective practice—creates a comprehensive and resilient foundation for certification success. Candidates emerge not only with the knowledge required to navigate the examination but also with the operational skill, analytical acuity, and strategic insight necessary to excel as professionals in enterprise data environments.

By combining meticulous study, practical exploration, and analytical reflection, candidates cultivate the expertise needed to manage complex workflows, orchestrate real-time data processing, and implement scalable and maintainable integration solutions. This preparation ensures proficiency in the usage of PowerCenter, Data Engineering Integration, and Data Engineering Streaming, while fostering problem-solving abilities, critical thinking, and operational confidence. The holistic approach to preparation transforms candidates into adept, versatile, and highly competent professionals, capable of contributing meaningfully to their organizations’ data integration initiatives.

Professional Value and Strategic Benefits of Certification

The Informatica Certified Specialist - Data Integration 10.x certification represents a transformative credential for professionals engaged in data architecture, integration, and analytics. Beyond serving as a validation of technical prowess, it confers a distinct professional advantage by demonstrating an individual's ability to design, implement, and manage complex data integration solutions across diverse enterprise environments. Candidates who achieve this certification are recognized for their competence in orchestrating data pipelines, optimizing workflow efficiency, and ensuring the accuracy and integrity of information that underpins critical business decisions.

The certification emphasizes mastery of Informatica Data Integration 10.x, including the robust capabilities of PowerCenter, the extensive scalability of Data Engineering Integration, and the immediacy offered by Data Engineering Streaming. Through rigorous examination and preparation, professionals acquire the expertise to manage both batch and real-time data workflows, integrating heterogeneous sources while maintaining consistent quality and performance. This proficiency not only enables individuals to execute operational tasks with precision but also positions them as strategic contributors capable of influencing enterprise data initiatives.

One of the most tangible advantages of achieving this credential is professional credibility. Organizations seeking to implement or enhance data integration capabilities recognize the value of certified specialists who can navigate complex workflows, design reusable mappings, and implement scalable pipelines. Certification signals to employers that the individual possesses the knowledge and practical skills necessary to meet stringent performance and reliability standards, thereby fostering trust and confidence in their technical competence. This recognition often translates into expanded responsibilities, increased opportunities for leadership, and enhanced career mobility within and across organizations.

The career impact of certification extends to tangible growth opportunities, including promotions, higher compensation, and access to specialized projects. Professionals equipped with this credential are frequently called upon to lead critical initiatives, such as enterprise-wide data consolidation, real-time streaming deployments, and optimization of large-scale data processing pipelines. The practical experience gained through preparation for the certification—combined with formal training and hands-on experimentation—ensures that certified specialists can contribute to projects that have high strategic and operational significance, thereby increasing their value to the organization.

Networking and community engagement are additional benefits of achieving this certification. Certified professionals gain access to an expansive network of peers, mentors, and subject matter experts within the global Informatica community. Participation in this community facilitates knowledge exchange, collaborative problem solving, and exposure to emerging trends and best practices. By interacting with other certified specialists, professionals can refine their skills, stay abreast of technological advancements, and gain insights into innovative approaches to data integration challenges. This network not only supports continuous professional development but also opens doors to potential collaborative projects and career opportunities.

Certification also enhances practical proficiency by emphasizing experiential learning and real-world application. Candidates are required to demonstrate competency in PowerCenter, Data Engineering Integration, and Data Engineering Streaming through hands-on exercises, scenario-based problem solving, and performance evaluations. This practical focus ensures that individuals are not only capable of theoretical understanding but can also execute complex data workflows, monitor and optimize performance, and troubleshoot operational issues effectively. These capabilities are directly transferable to professional contexts, equipping certified specialists to manage enterprise-level integration environments with confidence and efficiency.

The value of certification is further amplified by its influence on strategic thinking and decision-making. Professionals who possess this credential are adept at evaluating integration requirements, designing optimal solutions, and anticipating potential challenges in data workflows. They develop the ability to balance performance, reliability, and maintainability, ensuring that integration strategies align with organizational objectives and operational constraints. This capacity for strategic foresight distinguishes certified specialists as key contributors to enterprise data governance, workflow optimization, and system architecture planning.

Career advancement is also supported by the credential’s ability to validate specialized technical skills. As organizations increasingly rely on data-driven decision-making, the demand for professionals who can ensure the seamless flow and transformation of information has surged. Certified specialists are uniquely positioned to meet this demand, possessing the capability to design workflows that integrate diverse sources, implement advanced transformations, and maintain operational efficiency. Their expertise is recognized as a differentiator in competitive hiring landscapes, providing candidates with a competitive edge and enhancing employability across multiple sectors, including finance, healthcare, technology, and logistics.

Professional credibility is closely intertwined with operational competence. Certification indicates that an individual can manage critical components of data integration, including workflow orchestration, session optimization, error recovery, and performance monitoring. By demonstrating proficiency in these areas, certified specialists provide assurance to employers that they can maintain the integrity and reliability of enterprise data processes. This assurance is particularly vital in high-stakes environments where errors, delays, or inefficiencies can have substantial operational and financial repercussions.

The certification also supports the development of transferable skills that extend beyond technical expertise. Certified professionals cultivate analytical thinking, problem-solving aptitude, and strategic planning abilities that are applicable to a variety of complex business scenarios. They learn to evaluate the most effective approach for data movement, transformation, and distribution, considering both performance metrics and organizational goals. This combination of technical mastery and analytical capability equips professionals to contribute meaningfully to cross-functional projects, support data-driven initiatives, and influence the strategic utilization of information assets.

Exposure to practical challenges during preparation fosters operational resilience and adaptability. Candidates encounter scenarios that mirror enterprise-level integration demands, including high-volume data processing, multi-source orchestration, and real-time streaming implementations. These experiences cultivate confidence and proficiency, enabling professionals to handle complex workflows, troubleshoot issues efficiently, and optimize processes for sustained performance. The ability to navigate these challenges is a hallmark of certified specialists, enhancing their effectiveness in professional environments.

The professional benefits of certification are complemented by opportunities for continuous learning and knowledge expansion. Informatica tools and methodologies evolve rapidly, introducing new capabilities, optimization strategies, and integration paradigms. Certified specialists are encouraged to engage with these advancements, ensuring ongoing relevance and proficiency. Access to community resources, workshops, webinars, and peer discussions facilitates knowledge acquisition, enabling professionals to stay informed about emerging trends, innovative techniques, and evolving best practices in data integration. This engagement cultivates intellectual curiosity, reinforces practical expertise, and sustains professional growth over time.

Certification also amplifies credibility in client-facing and advisory roles. Professionals who hold this credential are positioned as trusted experts capable of guiding integration strategy, recommending best practices, and implementing solutions that meet business objectives. Their expertise is recognized not only internally within organizations but also externally, enhancing reputation and influence in consulting, project management, and collaborative initiatives. This recognition supports career diversification, enabling certified specialists to pursue roles that combine technical execution with strategic advisory responsibilities.

In addition to career and operational benefits, certification enhances the ability to contribute to enterprise-level decision-making. Certified specialists are equipped to analyze integration requirements, assess resource constraints, and propose solutions that optimize workflow performance. They can identify potential bottlenecks, implement recovery strategies, and design scalable solutions that maintain data quality and consistency. This ability to link technical execution with organizational objectives ensures that certified professionals are instrumental in aligning data integration processes with strategic goals, delivering measurable value to the enterprise.

The preparation for certification instills a disciplined and methodical approach to professional development. Candidates develop structured study habits, cultivate hands-on experimentation skills, and engage in reflective practice to reinforce learning. These habits extend into professional practice, fostering meticulousness, accountability, and operational rigor. Certified specialists exhibit confidence in executing complex workflows, managing enterprise pipelines, and addressing operational challenges with analytical insight and precision.

Certification also encourages the development of mentorship and leadership capabilities. Professionals who have successfully navigated the examination are well-positioned to guide peers, advise teams on best practices, and contribute to skill development initiatives within their organizations. By sharing expertise, mentoring colleagues, and leading integration projects, certified specialists enhance the collective competency of their teams, reinforce operational excellence, and strengthen organizational capability in managing enterprise data environments.

The versatility of certification enables professionals to operate across diverse industries and technological landscapes. As organizations increasingly rely on data-driven insights, certified specialists are sought for their ability to design, implement, and manage integration solutions that accommodate complex datasets, heterogeneous systems, and real-time processing requirements. Their expertise in orchestrating PowerCenter workflows, engineering large-scale pipelines, and implementing streaming solutions provides a foundation for contributing to diverse projects, from analytics-driven decision support systems to operational reporting frameworks.

Professional credibility is reinforced by the ability to deliver measurable outcomes. Certified specialists are capable of optimizing workflow efficiency, ensuring data quality, and mitigating operational risks, directly influencing organizational performance. Their knowledge of advanced transformations, system orchestration, and monitoring techniques enables them to implement solutions that are not only functional but also reliable, maintainable, and scalable. This operational competence, coupled with recognized certification, enhances employability, career advancement, and professional reputation.

Engagement with the global community of certified specialists fosters knowledge sharing and exposure to diverse approaches. Interaction with peers, industry experts, and thought leaders provides insight into innovative techniques, emerging trends, and best practices. This ongoing engagement supports continuous skill enhancement, professional networking, and exposure to new challenges and solutions in data integration. By participating actively in this community, certified specialists maintain relevance, enrich their expertise, and position themselves as leaders in the field.

The practical application of knowledge gained through certification extends into everyday professional responsibilities. Certified specialists can implement, monitor, and optimize complex workflows, manage real-time data pipelines, and execute transformations with efficiency and precision. Their proficiency ensures that enterprise data integration initiatives are executed reliably, with minimal error and maximal performance, supporting informed decision-making and strategic business outcomes.

Achieving this certification also reinforces a mindset of lifelong learning, intellectual curiosity, and professional resilience. Candidates are trained to anticipate challenges, troubleshoot effectively, and continuously improve processes, cultivating a proactive and adaptive approach to problem solving. These qualities enhance the value of certified specialists to their organizations, ensuring that they can navigate evolving technological landscapes and contribute meaningfully to enterprise data strategy over the long term.

The strategic impact of certification is reflected in its influence on organizational decision-making, project execution, and workflow optimization. Certified specialists contribute to the design and implementation of robust integration architectures, ensuring that data flows seamlessly across systems, transformations are accurate, and real-time requirements are met. Their expertise supports business intelligence initiatives, operational analytics, and enterprise data governance, reinforcing the strategic value of their role.

Leveraging Expertise for Complex Data Integration Challenges

The Informatica Certified Specialist - Data Integration 10.x certification equips professionals with the advanced skills necessary to navigate complex data integration landscapes, bridging the gap between theoretical knowledge and operational excellence. Mastery of this certification ensures that individuals are capable of designing, executing, and optimizing sophisticated workflows that encompass both batch processing and real-time data streaming. Candidates gain the ability to orchestrate high-volume data pipelines, integrate heterogeneous sources, and maintain consistency and accuracy across diverse environments, which is essential for organizations relying on timely and reliable information for strategic decision-making.

Practical application begins with the orchestration of PowerCenter workflows, where professionals design reusable mappings and automate transformations to facilitate efficient data movement. Understanding session configuration, partitioning strategies, and workflow dependencies enables certified specialists to optimize performance while minimizing resource utilization. They are trained to implement error handling and recovery mechanisms, ensuring resilience and continuity of operations in complex enterprise environments. These skills are critical in scenarios where even minor disruptions in data flow can have cascading effects on business intelligence, reporting, and operational processes.

Data Engineering Integration extends these capabilities to larger and more intricate pipelines, emphasizing the orchestration of multi-step workflows that manage extensive datasets. Professionals are adept at applying advanced transformation logic, monitoring pipeline execution, and addressing performance bottlenecks. This knowledge allows them to consolidate disparate data sources, perform sophisticated cleansing and validation operations, and deliver information in a format that supports analytical and operational needs. The ability to handle high-volume and multi-source workflows positions certified specialists as indispensable assets for organizations undertaking large-scale integration projects.

The proficiency in Data Engineering Streaming empowers professionals to manage real-time data pipelines effectively. Understanding stream ingestion, transformation, and distribution enables certified specialists to process information immediately as it arrives, supporting rapid decision-making and operational agility. They learn to configure streaming environments, monitor latency and throughput, and implement recovery strategies that maintain data accuracy and reliability. Real-time data capabilities are particularly relevant in sectors such as finance, telecommunications, and e-commerce, where the timely availability of information can directly impact revenue, customer experience, and competitive advantage.

Hands-on experimentation is an integral component of mastering these practical skills. Professionals engage with Informatica tools, including PowerCenter Designer, Workflow Manager, Workflow Monitor, Developer Tool, and EDC, to simulate real-world scenarios. By constructing and executing complex workflows, managing dependencies, and troubleshooting operational issues, candidates internalize both the functional and strategic aspects of data integration. This immersive approach reinforces learning, builds operational confidence, and enhances the ability to implement solutions that are both robust and scalable.

Preparation also emphasizes strategic workflow optimization. Certified specialists learn to assess resource allocation, monitor execution performance, and apply optimization techniques to reduce processing time and enhance throughput. By analyzing workflow efficiency and identifying potential bottlenecks, professionals can implement solutions that maximize system performance while minimizing operational costs. These skills are critical in enterprise environments where the volume, velocity, and variety of data continue to increase, demanding solutions that are efficient, reliable, and adaptable.

Analytical and problem-solving capabilities are cultivated through scenario-based exercises that mimic enterprise integration challenges. Candidates are presented with complex data flows, diverse source systems, and real-time processing requirements, requiring them to design workflows that balance operational efficiency with data integrity. These exercises enhance the ability to anticipate potential issues, implement proactive mitigation strategies, and ensure seamless execution of data integration tasks. By continuously refining these skills, certified specialists develop a holistic understanding of the interactions between systems, workflows, and data transformations, enabling them to handle the multifaceted challenges of enterprise-level projects.

Integration projects often involve collaboration with multiple teams, including data analysts, system architects, business stakeholders, and operational managers. Certified specialists are trained to communicate effectively, translating technical considerations into actionable insights that align with organizational objectives. Their expertise allows them to guide teams in implementing best practices, optimizing workflow configurations, and ensuring that integration solutions meet both performance and compliance standards. This ability to bridge technical execution with strategic objectives enhances the impact of certified professionals within their organizations.

Advanced practical skills extend to monitoring and error management. Professionals learn to configure alerts, review execution logs, and implement recovery strategies that mitigate the impact of failures on workflow continuity. They develop the capacity to diagnose complex issues, optimize performance in real-time, and maintain system stability under high-demand conditions. These operational competencies are critical for ensuring the reliability of enterprise data pipelines, particularly in environments where data is a strategic asset and timely access is essential.

The application of certification knowledge also involves implementing governance and quality assurance measures. Certified specialists understand the importance of metadata management, lineage tracking, and auditability in maintaining data integrity. They are capable of designing workflows that adhere to organizational policies, regulatory requirements, and industry best practices. By integrating these considerations into practical implementation, professionals contribute to the creation of reliable, compliant, and high-quality data pipelines that support informed decision-making and operational excellence.

Preparation for the certification encourages continuous experimentation and reflection. Professionals engage in iterative testing of workflows, evaluating performance metrics, and refining transformation logic to achieve optimal results. This process fosters a mindset of continuous improvement, encouraging individuals to explore innovative approaches, adopt emerging methodologies, and enhance the efficiency and scalability of their solutions. The iterative nature of practice ensures that candidates are not only adept at executing predefined workflows but can also adapt to evolving requirements and dynamic enterprise environments.

In addition to technical proficiency, the certification cultivates strategic thinking. Certified specialists learn to evaluate trade-offs between performance, complexity, and maintainability, ensuring that solutions align with organizational priorities. They develop the ability to anticipate potential risks, design resilient workflows, and implement scalable integration architectures. This combination of technical and strategic skills enables professionals to contribute meaningfully to enterprise data initiatives, supporting long-term operational goals and enhancing organizational capacity to leverage information assets effectively.

Collaboration and knowledge sharing are reinforced through engagement with peers and mentors. Certified specialists participate in forums, workshops, and community discussions, exchanging insights on workflow optimization, real-time processing strategies, and advanced transformation techniques. These interactions provide exposure to diverse problem-solving approaches, emerging tools, and industry best practices, enriching practical expertise and fostering innovation in data integration processes. By learning from the experiences of others, professionals enhance their ability to design effective, efficient, and adaptable integration solutions.

The practical application of certification knowledge is evident in high-stakes projects that require reliability, scalability, and performance. Professionals apply their skills to design complex ETL workflows, manage streaming data pipelines, and integrate disparate sources in environments with stringent operational and regulatory requirements. Their ability to optimize execution, troubleshoot failures, and maintain data integrity ensures that enterprise systems operate seamlessly, supporting analytics, reporting, and operational decision-making. The real-world applicability of these skills distinguishes certified specialists as highly competent and versatile contributors.

Advanced training also emphasizes the use of metadata and performance analysis to inform workflow design. Certified professionals leverage insights from system logs, execution metrics, and historical performance data to refine transformations, optimize workflow scheduling, and enhance resource utilization. This analytical approach ensures that integration processes are not only functional but also efficient, reliable, and aligned with organizational performance objectives. The ability to translate operational data into actionable optimization strategies underscores the strategic value of certified specialists in enterprise environments.

The certification further reinforces adaptability in evolving technological landscapes. As Informatica tools continue to advance, professionals are encouraged to explore new features, integrate emerging methodologies, and apply contemporary best practices in workflow design and execution. Maintaining proficiency with evolving capabilities ensures that certified specialists remain relevant, capable of addressing emerging challenges, and positioned to implement innovative solutions that enhance organizational data integration capacity.

Effective utilization of practical skills includes the ability to manage both batch-oriented and streaming workflows simultaneously. Certified specialists understand how to balance resource allocation, configure execution environments, and monitor performance to maintain efficiency across diverse data processing paradigms. This dual competency ensures that enterprise data operations can respond to both scheduled and real-time demands, providing flexibility, scalability, and continuity.

The development of expertise extends to troubleshooting complex scenarios, including data inconsistencies, system performance degradation, and unexpected workflow failures. Certified specialists are trained to identify root causes, implement corrective measures, and optimize execution to prevent recurrence. This problem-solving acumen enhances operational resilience and reliability, ensuring that integration pipelines can sustain high performance under varying conditions.

Professional growth is supported by the ability to apply advanced skills to cross-functional projects, including analytics, business intelligence, and operational reporting initiatives. Certified specialists leverage their expertise to facilitate seamless data flow, ensure accurate transformation, and deliver information in formats that support decision-making across departments. Their contributions strengthen organizational capability, enhance operational efficiency, and reinforce data-driven decision-making practices.

The holistic development fostered by certification preparation encourages a balance between technical proficiency, strategic thinking, and operational awareness. Professionals are equipped to design, implement, and optimize workflows that are robust, scalable, and maintainable. They develop the ability to anticipate challenges, analyze system performance, and apply corrective strategies, ensuring continuous improvement and sustained operational excellence.

Preparation emphasizes integration with organizational standards and compliance frameworks. Certified specialists understand how to align workflow design, data transformations, and operational procedures with regulatory requirements and internal governance policies. This knowledge ensures that enterprise data pipelines not only meet performance and reliability objectives but also adhere to legal and ethical standards, supporting organizational accountability and risk mitigation.

Ultimately, the practical and strategic skills acquired through the Informatica Certified Specialist - Data Integration 10.x certification enable professionals to contribute meaningfully to enterprise-level initiatives, optimize operational efficiency, and support the effective use of data as a strategic resource. Their proficiency encompasses workflow orchestration, transformation logic, real-time data streaming, error handling, performance optimization, and compliance adherence, forming a comprehensive foundation for managing complex data integration environments with confidence and expertise.

Continuous Growth, Industry Relevance, and Strategic Application

Achieving the Informatica Certified Specialist - Data Integration 10.x credential marks a pivotal milestone in the career of data integration professionals, signifying mastery over intricate workflows, advanced transformations, and real-time data orchestration. Beyond the immediate recognition, the certification fosters a trajectory of continuous growth, equipping professionals with the cognitive agility and practical expertise required to navigate evolving enterprise environments. Those who attain this distinction are prepared to manage heterogeneous data sources, optimize complex pipelines, and ensure the reliability and accuracy of information that drives strategic decision-making.

Preparation for mastery encompasses comprehensive understanding of PowerCenter, Data Engineering Integration, and Data Engineering Streaming tools. Professionals gain the ability to design reusable mappings, automate transformations, and orchestrate workflows across diverse operational landscapes. This proficiency is not merely theoretical; it requires the integration of hands-on practice with scenario-based exercises, allowing candidates to internalize best practices, troubleshoot complex challenges, and refine execution strategies. By repeatedly engaging with real-world simulations, individuals develop both operational confidence and strategic insight, positioning them as indispensable contributors to enterprise data initiatives.

The practical skill set cultivated through this certification enables professionals to balance batch and streaming workflows efficiently. Certified specialists are adept at resource allocation, session management, and workflow orchestration, ensuring high performance and minimal latency across varied processing environments. They understand the importance of error handling, recovery strategies, and monitoring, ensuring that critical data pipelines maintain integrity and reliability. These capabilities are particularly valuable in high-volume, real-time operational contexts where any delay or inconsistency in data flow can have significant downstream consequences.

Strategic application extends to workflow optimization and performance analysis. Certified specialists are trained to evaluate execution logs, analyze throughput, and implement configurations that enhance resource utilization and processing efficiency. By leveraging analytical insights, they can redesign transformations, adjust scheduling, and optimize data pipelines for both scalability and maintainability. This ability to integrate operational monitoring with strategic planning distinguishes certified professionals, enabling them to anticipate challenges and implement solutions that minimize disruptions while maximizing efficiency.

Beyond technical execution, the certification emphasizes governance, compliance, and quality assurance. Certified specialists develop expertise in managing metadata, tracking data lineage, and ensuring adherence to organizational policies and regulatory requirements. This focus on governance guarantees that workflows are not only efficient but also reliable and auditable, supporting organizational accountability and mitigating operational risks. By embedding these practices into routine operations, professionals contribute to sustainable, high-quality data management that underpins informed decision-making.

Collaboration is a fundamental aspect of leveraging the certification’s benefits. Certified specialists engage with cross-functional teams, including data analysts, architects, business stakeholders, and operational managers, to implement integration solutions that align with organizational objectives. They communicate technical considerations effectively, translating complex processes into actionable insights that support enterprise strategies. By serving as liaisons between technical and business perspectives, these professionals enhance decision-making, optimize workflows, and ensure that data integration initiatives deliver measurable value.

The certification also opens avenues for career advancement and strategic influence. Professionals gain recognition as experts capable of leading critical projects, implementing enterprise-wide solutions, and contributing to long-term data strategy. Their proficiency is acknowledged both internally and externally, enhancing opportunities for promotions, specialized assignments, and leadership roles. The ability to execute complex integrations, optimize performance, and ensure data quality positions certified specialists as key decision-makers and thought leaders within organizations.

Continuous professional growth is reinforced by engagement with the Informatica community. Certified specialists participate in forums, workshops, and collaborative discussions, exchanging insights, exploring innovative techniques, and staying informed about emerging trends in data integration. This networking fosters exposure to novel problem-solving approaches, enhances operational creativity, and ensures sustained industry relevance. By remaining connected with peers and experts, professionals can adapt to evolving technologies and apply advanced methodologies to maintain competitive advantage.

Practical experience is further enriched by scenario-based projects that simulate enterprise complexities. Professionals design workflows integrating multiple data sources, apply advanced transformations, and orchestrate both batch and streaming operations. These exercises cultivate analytical thinking, problem-solving aptitude, and operational dexterity. By iteratively testing, refining, and optimizing solutions, certified specialists develop the capacity to handle real-world challenges with precision and efficiency. This experiential learning solidifies theoretical knowledge, ensuring that skills are immediately transferable to professional environments.

Certified professionals are also equipped to manage high-stakes environments requiring operational resilience. They develop the ability to diagnose performance bottlenecks, implement recovery procedures, and maintain continuity of service in critical data pipelines. This operational competence ensures that data remains accurate, accessible, and timely, even under conditions of high demand or unexpected system disruptions. Organizations benefit from the reliability and stability provided by these specialists, who serve as guardians of enterprise data integrity and operational efficiency.

Analytical skills cultivated through certification preparation extend to workflow evaluation, resource optimization, and strategic planning. Certified specialists assess the implications of design choices, balance performance with maintainability, and implement solutions aligned with organizational goals. This strategic mindset enhances their ability to contribute to long-term enterprise planning, ensuring that integration solutions are scalable, resilient, and adaptable to changing business requirements.

Mentorship and leadership opportunities emerge naturally from certification attainment. Certified specialists can guide peers, advise teams on best practices, and support knowledge transfer initiatives. By sharing expertise in workflow orchestration, performance optimization, and real-time processing, these professionals elevate the competency of their teams, fostering collective proficiency and enhancing organizational capability. Their leadership reinforces operational standards, promotes knowledge sharing, and ensures that best practices are consistently applied across enterprise initiatives.

The certification also promotes intellectual curiosity and a commitment to continuous learning. As Informatica tools evolve, certified specialists are encouraged to explore new features, integrate innovative methodologies, and remain conversant with industry developments. This dedication to lifelong learning ensures that professionals maintain relevance, adapt to technological advances, and continue contributing effectively to organizational objectives. Engagement with evolving tools and approaches enhances operational creativity and strategic foresight, positioning certified specialists as adaptive leaders in dynamic enterprise environments.

Practical application of certification knowledge includes integration across diverse business domains. Certified specialists manage workflows supporting analytics, operational reporting, and decision support systems, ensuring that data is processed, transformed, and delivered efficiently. They are adept at handling heterogeneous sources, orchestrating complex transformations, and maintaining workflow continuity. These capabilities enable organizations to leverage information as a strategic asset, supporting timely decision-making and enhancing competitive advantage.

Advanced problem-solving is reinforced through exposure to real-world challenges, including high-volume data processing, error management, and performance optimization. Certified specialists develop the ability to anticipate potential issues, implement proactive strategies, and maintain operational stability. This expertise ensures that enterprise data pipelines remain robust, resilient, and responsive, minimizing disruptions and supporting continuous business operations.