Certification: SAS Certified Data Integration Developer for SAS 9

Certification Full Name: SAS Certified Data Integration Developer for SAS 9

Certification Provider: SAS Institute

Exam Code: A00-260

Exam Name: SAS Data Integration Development for SAS 9

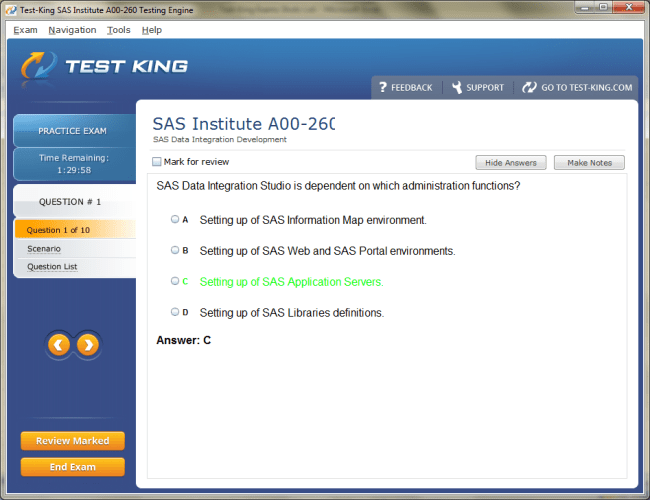

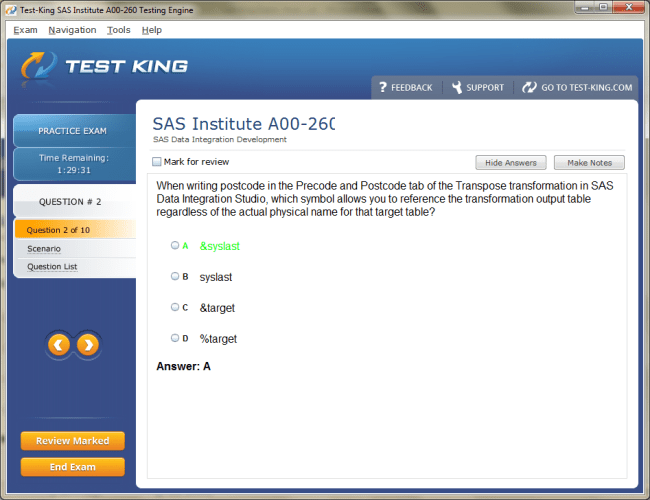

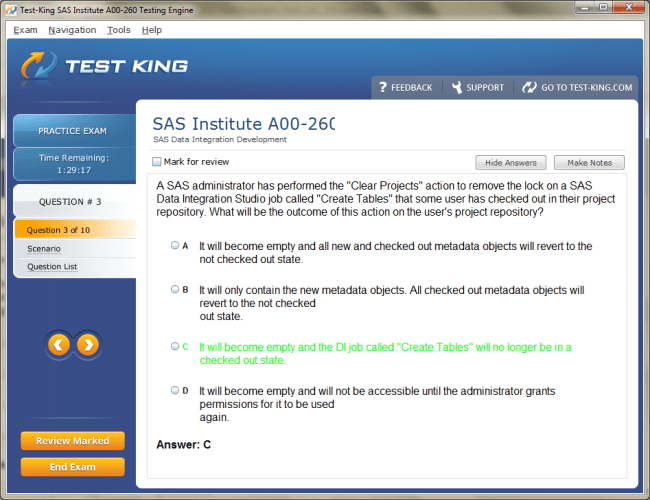

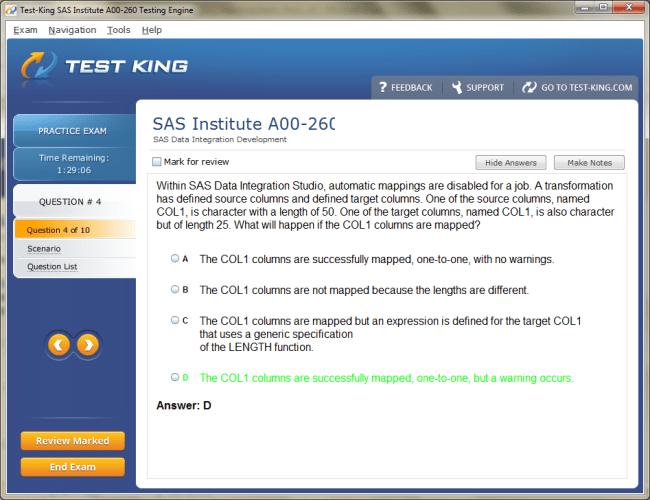

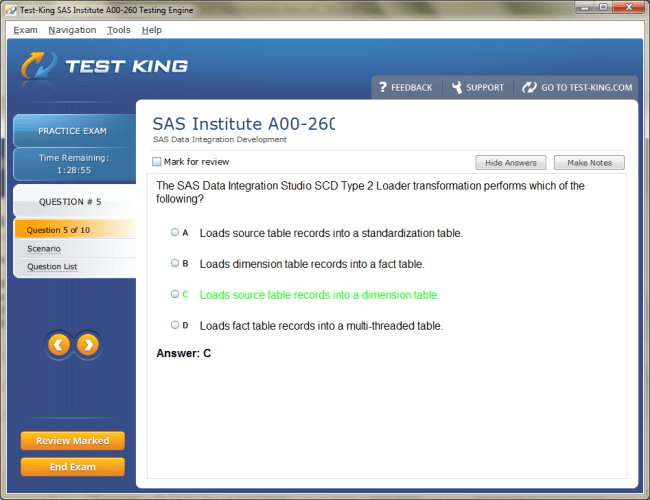

A00-260 Exam Product Screenshots

Top Skills You’ll Gain from the SAS Certified Data Integration Developer for SAS 9 Certification

In the contemporary realm of data management, the ability to consolidate, transform, and optimize information from multiple sources is more than a technical skill; it is a strategic capability that shapes organizational intelligence and decision-making. The SAS Certified Data Integration Developer for SAS 9 certification embodies this principle, providing professionals with a profound comprehension of data integration methodologies, analytical rigor, and practical expertise. Individuals who pursue this credential are not merely learning a software suite; they are cultivating a multifaceted skill set that positions them as indispensable architects of data ecosystems.

Understanding the SAS Certified Data Integration Developer for SAS 9

The certification immerses aspirants in the architecture and operational paradigms of SAS 9, encouraging a nuanced understanding of how data flows across heterogeneous systems. It emphasizes the orchestration of extract, transform, and load processes, underscoring the importance of efficiency, accuracy, and scalability. Candidates develop an ability to navigate complex data landscapes, harmonizing disparate data sources into coherent, reliable, and actionable formats. This is accomplished through a combination of conceptual mastery and hands-on practice, enabling learners to implement integration strategies that are robust, repeatable, and adaptable to diverse organizational environments.

A distinctive feature of this certification is the emphasis on meticulous attention to detail. Professionals learn to scrutinize data pipelines with precision, identifying subtle inconsistencies, anomalies, or redundancies that could compromise downstream analysis. Through this process, they cultivate a mindset of analytical vigilance, understanding that even minor deviations in data quality can have cascading effects on decision-making processes. This attention to granularity reinforces the importance of data governance, ensuring that information remains accurate, consistent, and reliable throughout its lifecycle.

The learning experience also fosters ingenuity in problem-solving and workflow optimization. SAS Certified Data Integration Developers are trained to anticipate potential bottlenecks, troubleshoot operational inefficiencies, and implement solutions that enhance throughput without sacrificing integrity. They gain the capacity to design processes that balance computational performance with maintainability, recognizing that sustainable integration strategies require both technical acumen and strategic foresight. This skill set extends beyond mere procedural knowledge; it cultivates the ability to think critically about how data flows influence business outcomes and to propose enhancements that align with organizational objectives.

Moreover, the certification equips professionals with an understanding of the broader ecosystem in which SAS operates. Modern enterprises increasingly rely on a multitude of databases, flat files, and multidimensional repositories. The ability to navigate these heterogeneous systems, to extract meaningful information, and to harmonize it across platforms is a distinguishing characteristic of a certified developer. This versatility is further enhanced by exposure to advanced transformation techniques, enabling practitioners to restructure, aggregate, or cleanse data according to sophisticated rules that preserve semantic integrity and analytical value.

Data quality assurance is another essential skill honed through this certification. Candidates learn to implement validation protocols, error-handling mechanisms, and consistency checks that safeguard the reliability of integrated datasets. This involves both proactive design of robust pipelines and reactive troubleshooting of unforeseen discrepancies. By mastering these techniques, professionals contribute directly to organizational confidence in analytical outputs, ensuring that decision-makers can rely on timely, accurate, and comprehensive information.

The training also emphasizes efficiency in execution. Through the creation of reusable data flows and automated processes, professionals reduce redundancy and accelerate project timelines. They acquire an appreciation for modularity and reusability in design, understanding that the long-term success of integration projects depends not only on immediate results but also on maintainable structures that can accommodate evolving requirements. This perspective nurtures foresight, as practitioners anticipate future expansions or modifications to data systems and plan processes that are both flexible and resilient.

Throughout the certification journey, professionals are exposed to a wide array of scenarios that mirror real-world challenges. They explore techniques for consolidating data from varied formats, reconciling conflicting records, and maintaining lineage and traceability. They engage with complex transformations that demand both logical reasoning and technical dexterity. These experiences cultivate confidence and autonomy, preparing candidates to take on responsibilities that require both operational expertise and strategic judgment. The skill set extends beyond coding or tool-specific knowledge; it encompasses the art of orchestrating data flows in a manner that is precise, coherent, and aligned with organizational goals.

Strategic thinking is further reinforced through the integration of planning, monitoring, and evaluation practices. Candidates learn to design processes that anticipate potential disruptions, optimize resource usage, and balance workload across systems. This includes evaluating dependencies, prioritizing critical paths, and ensuring that data movement aligns with overarching business timelines. By developing such competencies, certified professionals are able to bridge the gap between technical execution and strategic imperatives, translating complex workflows into tangible business value.

Another noteworthy aspect of this certification is its cultivation of adaptability and continuous learning. The data landscape is dynamic, with evolving technologies, emerging standards, and shifting organizational needs. SAS Certified Data Integration Developers acquire the ability to assimilate new methodologies, adapt to diverse environments, and apply established principles to novel contexts. This agility not only enhances individual capability but also contributes to organizational resilience, as professionals can respond effectively to change while maintaining the integrity of data systems.

In addition to technical proficiency, the certification emphasizes collaboration and communication skills. Professionals are often required to work with cross-functional teams, interpret requirements from stakeholders, and articulate complex concepts in accessible terms. This holistic approach ensures that certified developers are not only adept at manipulating data but also capable of conveying insights, facilitating informed decisions, and guiding organizational strategy through informed analysis.

The certification experience also nurtures an appreciation for the ethical and regulatory dimensions of data integration. Professionals learn to implement processes that respect privacy, adhere to compliance standards, and maintain transparency in data handling. This awareness reinforces the importance of responsible data stewardship, ensuring that the powerful capabilities conferred by the certification are exercised with integrity and conscientiousness.

Ultimately, the SAS Certified Data Integration Developer for SAS 9 certification empowers individuals to transcend the role of a mere technician, cultivating expertise that encompasses architecture, analysis, strategy, and governance. Professionals emerge equipped with an arsenal of competencies, including meticulous workflow design, advanced transformation techniques, data quality assurance, optimization of processes, cross-platform integration, problem-solving acumen, and strategic foresight. This breadth of skill not only enhances career prospects but also enables certified developers to contribute substantively to organizational intelligence, operational efficiency, and sustainable growth.

Mastering Data Integration and Transformation

In the ever-evolving realm of data management, the ability to assimilate, transform, and orchestrate information from multifarious sources is an indispensable skill for contemporary professionals. The SAS Certified Data Integration Developer for SAS 9 credential provides an immersive experience into the nuances of data integration and transformation, equipping individuals with both strategic foresight and operational dexterity. Through rigorous engagement with SAS tools, aspirants develop the ability to manipulate voluminous datasets, reconcile conflicting records, and construct coherent pipelines that preserve the fidelity of information across organizational landscapes.

At the core of data integration lies the meticulous consolidation of information from heterogeneous sources. Professionals learn to harmonize relational databases, flat files, and multidimensional repositories into coherent structures that are both analyzable and actionable. This entails understanding the idiosyncrasies of each data source, identifying redundancies, resolving inconsistencies, and ensuring that all transformations uphold semantic integrity. The certification encourages the development of an analytical mindset that balances precision with efficiency, fostering an awareness that even subtle discrepancies in data can have cascading effects on business intelligence and decision-making.

Transformation skills, another central pillar of this certification, extend beyond simple restructuring or formatting of data. Candidates acquire the capacity to apply complex business rules, perform aggregations, and execute conditional operations that refine and enrich raw datasets. This involves leveraging SAS 9 tools to implement transformations that are optimized for performance, reproducibility, and maintainability. Professionals are trained to anticipate the downstream implications of each modification, ensuring that changes contribute to analytical clarity and do not introduce unintended distortions.

A sophisticated understanding of extract, transform, and load processes is cultivated through immersive, practical exercises. Professionals learn to extract data efficiently from diverse sources, implement robust transformation logic, and load information into target repositories without compromising accuracy or timeliness. The certification emphasizes the orchestration of these processes in a manner that is both scalable and adaptable, preparing candidates to manage integration workflows in dynamic, enterprise-scale environments. They develop the ability to design pipelines that are resilient, traceable, and capable of handling incremental or batch updates with minimal supervision.

Data lineage and traceability are also emphasized as crucial competencies. Professionals are trained to maintain comprehensive documentation and metadata, enabling transparent tracking of data as it traverses multiple systems. This fosters accountability and facilitates error detection, auditing, and compliance with organizational or regulatory standards. The meticulous attention to lineage reinforces the principle that data integration is not a purely mechanical endeavor but an intricate exercise in governance, precision, and foresight.

Another dimension of skill development involves error handling and anomaly detection. Certified developers learn to anticipate potential disruptions in data pipelines, implement preemptive checks, and develop contingency measures that preserve workflow continuity. This encompasses identifying missing or inconsistent records, rectifying schema mismatches, and ensuring that transformations do not compromise analytical validity. Such competencies cultivate a proactive mindset, transforming professionals into vigilant custodians of data who safeguard both operational integrity and analytical accuracy.

Efficiency and optimization are recurrent themes throughout the certification. Professionals acquire methods for streamlining data flows, eliminating redundancy, and enhancing computational performance. They explore modular design approaches that enable reusable components, fostering maintainability and adaptability. By applying these principles, certified developers create integration architectures that are not only effective in the present but also resilient to future expansions, evolving data structures, or technological changes.

The certification also instills versatility, encouraging professionals to apply integration and transformation techniques across diverse industries and use cases. Candidates gain exposure to scenarios ranging from financial data consolidation to healthcare records aggregation, understanding how foundational principles adapt to varied contexts. This breadth of experience nurtures an ability to approach new challenges with confidence, applying both technical knowledge and critical thinking to produce robust, insightful results.

Collaboration and communication skills are subtly reinforced in the process. Data integration is rarely a solitary endeavor; professionals often coordinate with database administrators, analysts, and business stakeholders. The certification promotes the ability to convey complex integration workflows in comprehensible terms, aligning technical implementations with strategic objectives. This capacity ensures that data pipelines are not only operationally sound but also contextually relevant, serving the informational needs of diverse stakeholders effectively.

Furthermore, candidates develop a nuanced appreciation for data quality, validation, and cleansing. They learn to identify and rectify anomalies, apply normalization techniques, and enforce consistency across datasets. These practices safeguard analytical reliability, allowing organizations to make decisions based on accurate, coherent, and trustworthy information. By mastering these capabilities, certified developers cultivate a reputation for precision, accountability, and technical excellence.

The hands-on nature of the certification also encourages ingenuity and adaptive thinking. Professionals explore advanced transformation techniques, experiment with optimization strategies, and confront complex integration challenges that mirror real-world scenarios. This experiential learning reinforces the concept that data integration is both an art and a science, requiring analytical rigor, creativity, and strategic judgment in equal measure. Candidates emerge capable of designing bespoke solutions that meet unique organizational needs while adhering to best practices in efficiency and reliability.

Through engagement with this curriculum, professionals also acquire skills in monitoring and auditing integration workflows. They learn to track performance metrics, identify bottlenecks, and implement corrective measures that ensure smooth operation over time. This aspect of training emphasizes the dynamic nature of data management, highlighting the importance of vigilance, adaptability, and continuous improvement. By mastering these practices, certified developers contribute directly to the operational resilience and strategic agility of their organizations.

The certification further deepens expertise in managing complex transformations, including conditional logic, multi-source aggregations, and hierarchical data structuring. Professionals develop the ability to construct workflows that accommodate nested relationships, evolving data schemas, and intricate interdependencies. These competencies are particularly valuable in large-scale enterprises, where integration processes must reconcile numerous sources while maintaining analytical coherence.

Finally, the SAS Certified Data Integration Developer for SAS 9 credential cultivates an ethos of lifelong learning and professional growth. As data ecosystems evolve, so too must the skills of the practitioner. Candidates are prepared to assimilate new tools, methodologies, and standards, ensuring that their capabilities remain relevant and impactful. This combination of technical mastery, strategic insight, and adaptive intelligence defines the transformative value of the certification, positioning individuals as proficient, versatile, and forward-thinking architects of data integration and transformation.

Mastering Data Management and Ensuring Quality

In contemporary organizations, the caliber of data management often delineates the boundary between operational mediocrity and strategic excellence. The SAS Certified Data Integration Developer for SAS 9 credential equips professionals with advanced expertise in managing complex datasets while ensuring the utmost quality, consistency, and reliability of information. This certification imparts a deep understanding of both the structural and procedural aspects of data, enabling practitioners to implement systems that are robust, traceable, and resilient in the face of ever-evolving organizational demands.

Central to this training is the cultivation of meticulous data governance practices. Professionals are taught to establish frameworks that maintain integrity across the entire data lifecycle, from ingestion to transformation, storage, and eventual analysis. They develop the ability to monitor datasets for anomalies, detect inconsistencies, and implement proactive corrections that prevent erroneous information from propagating through analytical systems. The rigorous focus on data stewardship ensures that organizations can rely on information that is both accurate and contextually coherent, allowing strategic decisions to be made with confidence.

Data cleansing emerges as a pivotal skill within this context. Candidates learn to identify and rectify inaccuracies, standardize formats, reconcile duplicate entries, and harmonize conflicting records. This process extends beyond superficial adjustments; it involves the application of logical rules, validation protocols, and sophisticated transformations that preserve the semantic essence of information while eliminating distortions. Through these practices, professionals cultivate a discerning eye for precision, understanding that the efficacy of analytical models is inextricably tied to the quality of the underlying data.

Validation and verification techniques are further emphasized as essential competencies. Certified developers acquire methods to cross-check datasets, confirm completeness, and enforce consistency across multiple repositories. They become adept at designing automated routines that flag deviations, track changes, and maintain historical records for auditing purposes. This approach not only enhances operational reliability but also reinforces compliance with regulatory standards, which increasingly demand transparency and traceability in data handling.

Advanced data management also encompasses the orchestration of metadata and documentation practices. Professionals learn to maintain comprehensive records of data lineage, transformation logic, and workflow dependencies. This enables transparent tracking of information as it moves through complex pipelines, facilitating troubleshooting, auditing, and future enhancements. By mastering metadata management, certified developers ensure that datasets remain intelligible, reproducible, and analytically valuable even as organizational contexts evolve.

Optimization of data storage and retrieval processes constitutes another dimension of expertise. SAS Certified Data Integration Developers are trained to organize datasets efficiently, employ indexing strategies, and implement partitioning mechanisms that enhance performance. They gain insights into balancing computational speed with storage considerations, understanding that scalable data solutions must reconcile both technical efficiency and operational pragmatism. These skills enable organizations to handle increasingly voluminous and heterogeneous datasets without compromising accessibility or analytical utility.

Error detection and mitigation are intricately woven into the certification curriculum. Professionals learn to anticipate potential points of failure, design exception-handling routines, and implement safeguards that preserve workflow continuity. This includes identifying schema mismatches, incomplete records, and unexpected anomalies, as well as developing strategies to address these issues without compromising the integrity of the broader system. By mastering these competencies, practitioners cultivate resilience and foresight, ensuring that data pipelines remain robust under varying operational conditions.

The certification further emphasizes the integration of quality assurance practices into routine data management. Professionals are trained to implement standardized checks, validation rules, and monitoring dashboards that continuously assess data integrity. These mechanisms allow organizations to identify trends, detect irregularities early, and maintain consistent quality across datasets, reducing the risk of flawed analyses or erroneous insights. Such practices underscore the principle that high-quality data is not a byproduct but a deliberate and continuous process requiring vigilance, discipline, and expertise.

Collaboration and communication are subtly reinforced within this framework. Data management is rarely executed in isolation; professionals often coordinate with analysts, database administrators, and stakeholders to ensure alignment between technical implementation and business objectives. The certification encourages the development of clear, precise communication skills that allow complex quality assurance procedures to be explained and justified, fostering trust and cohesion across teams. This dimension transforms technical proficiency into strategic influence, as data becomes a shared asset whose reliability underpins organizational decision-making.

Scalability and adaptability are recurrent themes in advanced data management training. Certified developers acquire the ability to design systems that accommodate growing datasets, evolving schemas, and emerging data sources. They learn to implement modular architectures, reusable components, and adaptable workflows that sustain long-term efficiency and effectiveness. This foresight allows organizations to respond dynamically to shifting operational requirements while maintaining consistent quality and integrity across all data processes.

In addition to technical competencies, the certification emphasizes ethical and regulatory awareness. Professionals gain insights into responsible data handling, privacy preservation, and adherence to standards such as data protection regulations and industry-specific mandates. This awareness ensures that data management practices are not only operationally effective but also ethically and legally sound, reinforcing organizational credibility and societal trust.

The certification also fosters strategic insight into the role of data quality in decision-making. Professionals understand that analytical outcomes are only as reliable as the datasets that underpin them. By mastering validation, transformation, and governance, certified developers contribute directly to organizational intelligence, enabling leaders to make informed decisions based on robust, accurate, and comprehensive information. This strategic orientation elevates the practitioner from a technical executor to a valued advisor whose expertise informs both operational and strategic initiatives.

Automation is another critical component of advanced data management skills. Professionals learn to design workflows that minimize manual intervention, standardize repetitive tasks, and enhance consistency across processes. By implementing automated quality checks, error handling routines, and transformation sequences, certified developers create environments where efficiency and accuracy coexist harmoniously. This not only accelerates project timelines but also reduces the likelihood of human error, ensuring that data remains reliable and actionable.

The mastery of complex datasets, including nested structures, multi-source integrations, and hierarchical relationships, further differentiates certified professionals. They develop the ability to manage intricate interdependencies, reconcile divergent data points, and produce harmonized outputs suitable for downstream analytical applications. This capacity for handling complexity reflects the advanced nature of the certification, equipping practitioners with skills that are rare and highly sought after in contemporary data-driven enterprises.

Continuous monitoring and evaluation of data processes are emphasized to ensure enduring quality and integrity. Professionals learn to implement metrics, dashboards, and alerts that provide real-time visibility into workflow performance, data anomalies, and transformation efficiency. This enables proactive intervention, early detection of potential issues, and ongoing refinement of processes, cultivating an organizational culture of quality, accountability, and operational excellence.

Finally, the certification encourages an ethos of intellectual curiosity and perpetual growth. Professionals are prepared to adapt to emerging technologies, evolving methodologies, and shifting business contexts, ensuring that their capabilities remain both relevant and innovative. By combining technical mastery, strategic insight, and adaptive intelligence, SAS Certified Data Integration Developers for SAS 9 are uniquely positioned to transform organizational data landscapes, enhancing reliability, operational efficiency, and analytical sophistication.

Mastering ETL Design and Implementation

In contemporary data ecosystems, the capacity to design and implement extract, transform, and load processes is pivotal for operational efficiency and analytical precision. The SAS Certified Data Integration Developer for SAS 9 credential imparts profound knowledge and practical expertise in orchestrating ETL workflows that integrate heterogeneous data sources into coherent, analyzable repositories. Professionals pursuing this credential cultivate the ability to construct pipelines that are not only robust and scalable but also aligned with strategic business objectives and operational exigencies.

At the core of ETL design is the extraction of data from diverse repositories. Certified developers acquire proficiency in identifying relevant sources, understanding structural nuances, and extracting information in a manner that preserves accuracy and completeness. This process involves intricate attention to schema variations, data types, and potential inconsistencies that may exist across platforms. Through hands-on exposure, practitioners learn to anticipate and mitigate challenges arising from heterogeneous environments, ensuring that extracted datasets are reliable, comprehensive, and analytically ready.

Transformation skills are further honed through immersive exercises that emphasize the conversion of raw data into meaningful, structured formats. Professionals master techniques for aggregating, filtering, merging, and reshaping information according to precise business logic. This includes implementing conditional operations, hierarchical mappings, and complex calculations that refine datasets for downstream consumption. By integrating transformation logic with meticulous attention to performance and maintainability, certified developers construct workflows that are both efficient and adaptable, capable of handling incremental loads as well as batch processing with equal efficacy.

Loading processes represent the final, yet equally critical, component of ETL expertise. Professionals are trained to deliver transformed data into target repositories in a manner that ensures integrity, accessibility, and consistency. This involves designing procedures that minimize disruption to operational systems, preserve historical data, and maintain comprehensive audit trails. Certified developers gain experience in configuring load mechanisms that balance speed and reliability, recognizing that optimal ETL workflows require seamless coordination between extraction, transformation, and storage stages.

The SAS 9 environment provides a versatile framework for ETL development, enabling professionals to implement best practices that optimize performance and maintainability. Candidates acquire the ability to design reusable data flows, modular transformation components, and scalable pipelines that can accommodate evolving organizational requirements. This approach not only enhances efficiency but also fosters adaptability, allowing workflows to adjust dynamically to changes in data structures, business priorities, or regulatory standards.

Error handling and workflow resilience are emphasized throughout the ETL design process. Certified developers learn to anticipate potential disruptions, implement validation routines, and create contingencies that maintain continuity of operations. This includes monitoring for missing or inconsistent records, reconciling schema mismatches, and ensuring that each stage of the pipeline preserves analytical fidelity. By mastering these practices, professionals develop robust, self-correcting ETL processes capable of sustaining high performance even in complex or volatile data environments.

Data lineage and traceability are integral to ETL mastery. Professionals are taught to document the flow of information, track transformation logic, and maintain comprehensive metadata for auditing and operational transparency. This ensures that stakeholders can trace the origin, modification, and final destination of every dataset, reinforcing confidence in analytical outputs and enabling efficient troubleshooting. The emphasis on traceability also cultivates accountability and facilitates compliance with industry regulations or internal governance standards.

Optimization strategies constitute another critical area of proficiency. Certified developers learn to streamline ETL workflows by identifying bottlenecks, minimizing redundant operations, and enhancing computational efficiency. Techniques such as partitioning, parallel processing, and indexing are applied to accelerate data movement while preserving accuracy. Professionals develop an instinct for balancing performance with maintainability, understanding that long-term sustainability of ETL processes requires thoughtful design, iterative refinement, and strategic foresight.

Scalability is a recurrent theme in ETL training. Professionals gain expertise in designing workflows capable of accommodating expanding datasets, growing user demands, and increasingly complex analytical requirements. They learn to implement modular architectures, enabling workflows to be extended or modified without compromising existing operations. This adaptability ensures that ETL pipelines remain relevant and effective as organizational needs evolve, enhancing the long-term value of data infrastructure investments.

Advanced transformation scenarios further refine the skill set of certified developers. These scenarios include multi-source joins, hierarchical aggregations, conditional branching, and incremental updates. Professionals develop the capacity to manage dependencies, sequence operations strategically, and apply transformations that preserve both performance and analytical rigor. Exposure to such complex scenarios prepares practitioners to tackle real-world challenges, where data pipelines often involve heterogeneous systems, nested structures, and evolving requirements.

Collaboration and strategic alignment are emphasized as essential dimensions of ETL mastery. Data integration workflows must not only be technically proficient but also aligned with business objectives. Professionals are trained to communicate the rationale behind workflow designs, translate technical specifications into operational requirements, and engage with stakeholders to ensure that ETL implementations support organizational priorities. This ability to bridge technical expertise with strategic insight transforms certified developers into indispensable contributors to data-driven decision-making.

Automation is integrated throughout the ETL design process. Professionals learn to create repeatable, automated workflows that minimize manual intervention, enforce consistency, and enhance reliability. Automated logging, error notifications, and validation routines ensure that processes remain transparent, accountable, and maintainable over time. By incorporating automation thoughtfully, certified developers reduce operational risk, accelerate project delivery, and enhance the overall efficiency of data integration operations.

Monitoring and continuous improvement are also pivotal skills imparted through this certification. Professionals are trained to implement performance metrics, dashboards, and alerts that provide real-time visibility into ETL execution. They learn to analyze workflow efficiency, detect anomalies, and iterate on process design to optimize performance continually. This proactive approach reinforces the principle that ETL pipelines are living systems requiring ongoing refinement to sustain reliability and analytical value.

In addition to technical competencies, certified developers cultivate a nuanced understanding of data quality and governance within ETL workflows. They recognize that the reliability of downstream analytics depends on meticulous management of source, transformed, and loaded data. By embedding validation, reconciliation, and quality assurance protocols directly into ETL processes, professionals ensure that integrated datasets meet rigorous standards of completeness, consistency, and accuracy. This holistic approach positions them as both technical experts and custodians of organizational information.

Ethical considerations and regulatory compliance are subtly interwoven into ETL practices. Professionals learn to design workflows that safeguard sensitive information, adhere to data protection standards, and maintain auditability. This awareness reinforces responsible stewardship of data and ensures that integration processes not only achieve operational efficiency but also meet legal, ethical, and organizational expectations.

Finally, the certification fosters adaptive intelligence and lifelong learning. Professionals are prepared to assimilate new methodologies, emerging technologies, and evolving business requirements into ETL designs. This ability to adapt while maintaining high standards of accuracy, efficiency, and reliability distinguishes SAS Certified Data Integration Developers for SAS 9 as capable architects of modern data infrastructures. Their expertise in orchestrating ETL workflows translates into tangible business value, operational resilience, and sustained analytical excellence.

Enhancing Data Processes Through Problem-Solving, Automation, and Optimization

In contemporary data ecosystems, the ability to troubleshoot complex issues, implement automation, and optimize workflows defines the efficacy and resilience of organizational operations. The SAS Certified Data Integration Developer for SAS 9 credential equips professionals with these indispensable skills, enabling them to transform raw, heterogeneous datasets into streamlined, reliable, and actionable information. This training fosters analytical rigor, technical dexterity, and strategic foresight, preparing individuals to anticipate challenges, implement corrective measures, and design systems that operate at peak efficiency.

Troubleshooting is a fundamental competency cultivated through this certification. Professionals learn to identify anomalies, diagnose root causes, and resolve inconsistencies that can compromise the integrity of data pipelines. This involves a nuanced understanding of source systems, transformation logic, and load mechanisms, allowing practitioners to pinpoint issues with precision. By engaging in iterative problem-solving exercises, candidates develop an ability to interpret error messages, trace data lineage, and reconstruct workflows to restore operational continuity. This analytical vigilance ensures that disruptions are addressed swiftly, minimizing the impact on business intelligence and decision-making processes.

Automation is a complementary skill that amplifies the reliability and efficiency of data processes. Certified developers acquire the ability to design repeatable, self-sustaining workflows that minimize manual intervention. This includes automating extraction, transformation, and load sequences, implementing validation checks, and generating alerts for anomalous conditions. Through automation, professionals reduce the risk of human error, accelerate processing timelines, and ensure consistent adherence to quality standards. The certification emphasizes strategic implementation, encouraging candidates to balance automation with oversight, ensuring that workflows remain flexible and adaptable to evolving requirements.

Optimization is another critical facet of professional development within this context. Candidates learn to analyze workflow performance, identify bottlenecks, and implement solutions that enhance computational efficiency without compromising data integrity. Techniques such as parallel processing, resource balancing, and modular design are explored, enabling developers to construct pipelines that are scalable, maintainable, and performant. This focus on optimization reflects an understanding that efficient workflows not only conserve organizational resources but also enhance the timeliness and reliability of analytical outputs.

Error detection and preemptive mitigation are integrated throughout the curriculum. Professionals gain experience in designing systems that anticipate common pitfalls, apply automated correction routines, and maintain comprehensive logs for monitoring and auditing purposes. This proactive approach transforms data pipelines into resilient infrastructures capable of sustaining operations under variable loads and unforeseen challenges. By mastering these skills, certified developers cultivate foresight, operational acumen, and a systematic approach to problem-solving that extends beyond technical execution.

The certification emphasizes iterative improvement, encouraging professionals to continuously assess and refine processes. Candidates learn to implement performance metrics, monitor workflow efficiency, and evaluate the effectiveness of automation and optimization strategies. This continuous feedback loop ensures that ETL processes, data transformations, and integration pipelines evolve in response to changing organizational demands, data volumes, and analytical requirements. The iterative mindset nurtures adaptability, a critical attribute for professionals operating in dynamic, data-intensive environments.

Complex scenario analysis is another component of skill development. Certified developers encounter situations involving multi-source datasets, intricate transformation logic, and interdependent workflows. They learn to dissect these scenarios methodically, applying troubleshooting methodologies, automation frameworks, and optimization principles to restore or enhance functionality. This hands-on experience cultivates a sophisticated understanding of workflow interconnectivity, enabling professionals to anticipate downstream effects and implement changes without introducing unintended disruptions.

Data quality is intricately linked to troubleshooting and optimization. Professionals learn to enforce validation protocols, reconcile inconsistent records, and monitor for anomalies that may compromise analytical reliability. The ability to maintain high-quality datasets throughout automated and optimized pipelines reinforces the principle that reliability, efficiency, and accuracy are mutually reinforcing objectives. Certified developers are trained to view quality assurance not as an isolated task but as an integral aspect of all data processes, ensuring that operational improvements do not come at the expense of fidelity.

The SAS 9 environment provides a versatile platform for applying these competencies. Professionals gain exposure to tools and functionalities that enable sophisticated monitoring, automation, and optimization. They learn to configure workflows that adapt dynamically to incoming data, handle incremental updates, and maintain robust audit trails. By leveraging the capabilities of the platform, certified developers create resilient, high-performing systems that are capable of supporting complex organizational needs and dynamic analytical environments.

Collaboration is subtly reinforced throughout this training. Troubleshooting, automation, and optimization often require coordination with database administrators, analysts, and stakeholders. Professionals learn to communicate technical issues clearly, explain corrective measures, and articulate the rationale behind automation and optimization strategies. This collaborative proficiency ensures that workflow improvements are not only technically sound but also strategically aligned with organizational priorities, enhancing both operational efficiency and business value.

Proactive risk management is another dimension emphasized in the certification. Professionals acquire the ability to anticipate potential disruptions, design contingencies, and implement safeguards that preserve workflow continuity. This includes addressing schema mismatches, monitoring for missing or inconsistent records, and applying systematic correction routines. By embedding risk mitigation directly into automated and optimized processes, certified developers cultivate reliability and resilience, transforming data pipelines into robust, self-correcting systems.

Efficiency is further enhanced through strategic resource allocation and performance tuning. Candidates learn to analyze the computational and temporal costs of various workflow configurations, implement load balancing strategies, and streamline transformation logic to maximize throughput. This analytical approach ensures that data processes remain efficient even under increasing data volumes or complex operational demands. The ability to balance resource utilization with performance outcomes is a hallmark of advanced expertise in SAS-based data integration.

The certification also emphasizes the interplay between automation and human oversight. Professionals are trained to implement automated routines while maintaining visibility into workflow execution. This balance allows for rapid processing without sacrificing control, enabling practitioners to intervene when anomalies are detected, refine processes, and adapt workflows in real time. The integration of automation with intelligent oversight ensures that ETL pipelines remain reliable, accurate, and responsive to evolving business requirements.

Scenario-based exercises reinforce these competencies. Professionals engage with challenges that involve high-volume datasets, complex transformations, and intricate dependencies across multiple repositories. They learn to troubleshoot, automate, and optimize under realistic conditions, applying learned principles to restore functionality, enhance efficiency, and maintain quality. These immersive experiences cultivate practical acumen, strategic insight, and confidence in managing complex data environments.

Ethical and regulatory considerations are subtly embedded within these practices. Professionals learn to automate processes and optimize workflows in a manner that preserves privacy, maintains compliance, and ensures transparency. This awareness reinforces responsible data stewardship while enhancing operational efficiency, positioning certified developers as conscientious custodians of organizational information.

Finally, the certification fosters an enduring mindset of adaptive intelligence and continuous improvement. Professionals emerge with the ability to assimilate emerging technologies, evolving methodologies, and new operational requirements into their workflows. This adaptability ensures that troubleshooting, automation, and optimization skills remain relevant, allowing SAS Certified Data Integration Developers for SAS 9 to continuously enhance organizational data processes, improve efficiency, and support high-quality analytical outcomes in dynamic environments.

Expanding Career Horizons Through Certification

In the contemporary data-driven landscape, organizations increasingly rely on professionals who can consolidate, transform, and optimize information with precision and foresight. The SAS Certified Data Integration Developer for SAS 9 credential empowers individuals with the skills to navigate complex data environments, design robust workflows, and ensure the quality, consistency, and reliability of integrated datasets. Beyond technical proficiency, this certification cultivates strategic insight, operational agility, and analytical foresight, positioning certified developers to make impactful contributions to organizational success.

Professionals who attain this certification gain access to diverse career trajectories across multiple industries. Financial institutions, healthcare organizations, retail enterprises, and manufacturing conglomerates alike seek experts capable of integrating vast datasets from heterogeneous sources into coherent, actionable formats. Candidates acquire skills that enable them to bridge gaps between raw data and informed decision-making, making them valuable assets in roles that span data engineering, business intelligence, analytics, and operational management. The certification signifies not only mastery of SAS 9 tools but also the ability to translate technical knowledge into strategic value for enterprises.

The credential fosters expertise in designing and executing complex extract, transform, and load processes. Professionals learn to harmonize data from relational databases, flat files, cloud repositories, and multidimensional systems, ensuring seamless integration across organizational platforms. This capacity allows certified developers to address the intricate challenges of contemporary data ecosystems, where multiple sources, variable formats, and evolving schemas coexist. By mastering these integration strategies, candidates enhance both operational efficiency and analytical rigor, enabling organizations to rely on datasets that are accurate, consistent, and timely.

A key outcome of this training is the development of advanced problem-solving and optimization skills. Professionals acquire the ability to troubleshoot complex data workflows, detect anomalies, and implement corrective measures while maintaining workflow continuity. They learn to optimize transformation logic, automate repetitive processes, and design pipelines that scale with growing data volumes and evolving business requirements. These competencies foster resilience and agility, equipping practitioners to anticipate challenges and adapt workflows proactively, minimizing disruptions and maintaining high standards of quality.

Data quality assurance is another critical dimension emphasized in the certification. Candidates develop proficiency in validating, cleansing, and reconciling data to ensure integrity and consistency. They learn to establish protocols that monitor data lineage, enforce validation rules, and maintain traceability throughout the lifecycle of a dataset. These practices not only enhance reliability but also reinforce governance, compliance, and accountability, ensuring that organizational decision-making is informed by trustworthy information.

Industry applications of these skills are wide-ranging and profound. In finance, certified developers contribute to risk assessment, fraud detection, and regulatory reporting by integrating and standardizing complex transactional datasets. In healthcare, they enable patient data consolidation, outcomes analysis, and predictive modeling, ensuring that clinical decisions are supported by accurate and comprehensive information. Retail and e-commerce enterprises benefit from enhanced customer insights, inventory management, and demand forecasting, derived from harmonized sales, marketing, and logistics data. Manufacturing organizations leverage integrated datasets to monitor production efficiency, optimize supply chains, and improve quality control processes. Across these sectors, the ability to translate raw data into actionable insights differentiates certified professionals as strategic contributors to organizational success.

The strategic value of the certification extends beyond operational efficiency. Certified developers acquire a holistic understanding of how integrated data supports decision-making, enabling organizations to align analytics with broader business objectives. Professionals learn to communicate complex workflows to stakeholders, translate technical processes into strategic insights, and advocate for data-driven initiatives that enhance competitiveness. By combining technical mastery with strategic perspective, certified developers elevate their influence, guiding organizational priorities and fostering informed, data-backed strategies.

Automation and workflow optimization further enhance the value of certification. Professionals are trained to design repeatable, automated pipelines that minimize human error and accelerate processing timelines. They gain expertise in modular workflow design, parallel processing, and resource balancing, enabling efficient management of large-scale datasets. These capabilities contribute to organizational agility, allowing enterprises to respond quickly to changing market conditions, operational demands, and analytical needs. Certified developers become pivotal in implementing scalable, resilient data architectures that sustain long-term growth and performance.

Collaboration is reinforced throughout the certification experience. Data integration rarely occurs in isolation; professionals often coordinate with analysts, business managers, database administrators, and IT teams. The credential emphasizes the ability to communicate technical concepts clearly, foster alignment between operational execution and strategic objectives, and contribute to cohesive, high-performing teams. This collaborative proficiency ensures that data initiatives are not only technically sound but also contextually relevant, creating value across organizational layers.

Ethical stewardship of data is another critical dimension embedded in certification training. Professionals learn to handle sensitive information responsibly, adhere to compliance requirements, and implement governance frameworks that safeguard privacy and transparency. This awareness reinforces the importance of responsible data management, ensuring that integrated datasets uphold both operational standards and societal expectations. Certified developers emerge as conscientious custodians of organizational information, balancing efficiency, accuracy, and ethical responsibility.

The certification also instills adaptability and continuous learning. As data technologies, analytical methods, and business requirements evolve, professionals are prepared to assimilate new tools, refine workflows, and maintain best practices in integration and transformation. This dynamic capability ensures that certified developers remain relevant, innovative, and effective, providing long-term value to organizations that rely on accurate, integrated data for strategic decision-making.

Career growth for certified developers is often accelerated by the combination of technical proficiency, analytical capability, and strategic insight cultivated through SAS 9 certification. Professionals are well-positioned for roles such as data integration specialist, ETL developer, business intelligence analyst, data engineer, and enterprise data architect. These roles not only command competitive remuneration but also offer opportunities to influence organizational strategy, drive innovation, and shape operational excellence. The credential signals both expertise and commitment, enhancing employability and professional credibility in a competitive marketplace.

The breadth of skills acquired through this certification ensures applicability across emerging technologies and evolving business paradigms. Certified professionals can adapt integration strategies for cloud computing environments, distributed data architectures, and big data ecosystems. They gain proficiency in managing complex transformations, optimizing workflow performance, and ensuring data quality across increasingly sophisticated platforms. This versatility amplifies career opportunities and positions certified developers as key contributors in both current and future technological landscapes.

Strategic value extends to the organizational impact of certified developers. By integrating disparate datasets, optimizing workflows, and ensuring data reliability, these professionals enhance decision-making, operational efficiency, and analytical insight. They contribute to data-driven cultures where evidence-based strategies inform management practices, operational improvements, and innovation initiatives. The certification equips professionals to serve as bridges between technical execution and strategic vision, reinforcing the alignment between data infrastructure and organizational objectives.

Furthermore, the certification nurtures an analytical mindset, enabling professionals to approach challenges with systematic reasoning and creative problem-solving. Candidates learn to dissect complex workflows, identify optimization opportunities, and implement solutions that balance efficiency, accuracy, and sustainability. This combination of analytical rigor and practical expertise empowers certified developers to navigate intricate data environments, resolve operational challenges, and enhance the overall intelligence of organizations.

As organizations continue to embrace digital transformation, the demand for skilled data integration professionals grows exponentially. The SAS Certified Data Integration Developer for SAS 9 credential equips individuals with rare and highly sought-after skills, ensuring that they remain competitive, adaptable, and influential. Their ability to manage, transform, and optimize complex datasets positions them as critical assets in data-intensive industries, capable of delivering operational efficiency, strategic insight, and tangible business value.

Ultimately, the certification fosters a holistic professional profile that blends technical mastery, analytical sophistication, strategic perspective, and ethical responsibility. Certified developers emerge equipped to design, implement, and optimize data workflows that support informed decision-making, drive organizational performance, and sustain competitive advantage in a rapidly evolving landscape. The combination of technical prowess, operational insight, and strategic foresight positions these professionals as indispensable contributors to organizational intelligence and long-term success.

The strategic and professional value of the SAS Certified Data Integration Developer for SAS 9 credential cannot be overstated. It signifies expertise in orchestrating data processes, ensuring data quality, implementing automation, and optimizing complex workflows. It conveys credibility to employers, clients, and colleagues, reflecting a mastery of both the technical and strategic dimensions of data integration. By achieving this certification, professionals not only expand their career prospects but also contribute meaningfully to the efficiency, insight, and innovation capacity of their organizations.

Certified developers possess the agility to adapt to emerging tools, methodologies, and operational demands, ensuring their skills remain relevant in dynamic environments. They are equipped to lead initiatives, mentor peers, and influence data strategy, creating ripple effects of value that extend across teams and organizational hierarchies. Their combination of technical, analytical, and strategic capabilities positions them as leaders in the field of data integration, capable of translating complex workflows into actionable insights, efficient processes, and long-term organizational growth.

The intersection of technical expertise, problem-solving acumen, and strategic vision cultivated through SAS 9 certification transforms professionals into architects of integrated data ecosystems. Their ability to harmonize diverse datasets, optimize workflows, ensure quality, and implement automation enables organizations to harness the full potential of information assets. By integrating these capabilities, certified developers enhance analytical accuracy, operational efficiency, and organizational decision-making, establishing themselves as indispensable contributors to data-driven success.

The credential’s emphasis on lifelong learning, adaptability, and ethical stewardship further solidifies its value. Professionals are prepared to evolve alongside technological advancements, regulatory changes, and shifting business imperatives, ensuring sustained relevance and impact. Their mastery of SAS tools, coupled with strategic and analytical insight, positions them as catalysts for organizational intelligence, operational excellence, and long-term competitive advantage.

The SAS Certified Data Integration Developer for SAS 9 credential thus represents more than technical certification; it embodies a comprehensive professional transformation. Individuals emerge capable of designing, implementing, and optimizing complex data workflows, ensuring quality and reliability, automating repetitive tasks, and contributing strategic insights that enhance organizational performance. Through these capabilities, certified developers drive efficiency, foster informed decision-making, and create measurable business value, solidifying their role as indispensable assets in the modern data-driven enterprise.

Conclusion

The SAS Certified Data Integration Developer for SAS 9 certification delivers unparalleled professional growth, industry relevance, and strategic impact. It equips professionals with advanced technical skills, analytical rigor, and strategic insight necessary to design, implement, and optimize complex data integration workflows. Certified developers excel in troubleshooting, automation, optimization, and quality assurance, ensuring reliable and actionable datasets across diverse industries. Beyond technical mastery, the credential fosters ethical responsibility, adaptability, and lifelong learning, enabling professionals to navigate evolving technologies and organizational needs. By achieving this certification, individuals enhance career prospects, drive operational efficiency, and contribute meaningfully to organizational intelligence, positioning themselves as indispensable architects of modern data ecosystems.

Frequently Asked Questions

How can I get the products after purchase?

All products are available for download immediately from your Member's Area. Once you have made the payment, you will be transferred to Member's Area where you can login and download the products you have purchased to your computer.

How long can I use my product? Will it be valid forever?

Test-King products have a validity of 90 days from the date of purchase. This means that any updates to the products, including but not limited to new questions, or updates and changes by our editing team, will be automatically downloaded on to computer to make sure that you get latest exam prep materials during those 90 days.

Can I renew my product if when it's expired?

Yes, when the 90 days of your product validity are over, you have the option of renewing your expired products with a 30% discount. This can be done in your Member's Area.

Please note that you will not be able to use the product after it has expired if you don't renew it.

How often are the questions updated?

We always try to provide the latest pool of questions, Updates in the questions depend on the changes in actual pool of questions by different vendors. As soon as we know about the change in the exam question pool we try our best to update the products as fast as possible.

How many computers I can download Test-King software on?

You can download the Test-King products on the maximum number of 2 (two) computers or devices. If you need to use the software on more than two machines, you can purchase this option separately. Please email support@test-king.com if you need to use more than 5 (five) computers.

What is a PDF Version?

PDF Version is a pdf document of Questions & Answers product. The document file has standart .pdf format, which can be easily read by any pdf reader application like Adobe Acrobat Reader, Foxit Reader, OpenOffice, Google Docs and many others.

Can I purchase PDF Version without the Testing Engine?

PDF Version cannot be purchased separately. It is only available as an add-on to main Question & Answer Testing Engine product.

What operating systems are supported by your Testing Engine software?

Our testing engine is supported by Windows. Andriod and IOS software is currently under development.